In the not-so-distant past, conversations about AI were primarily confined to the realms of science fiction and academic papers.

Fast forward to today, and AI is deeply integrated into the fabric of daily life, from powering smart homes to diagnosing diseases.

But as AI technology races ahead, regulatory frameworks are playing catch-up, often lagging behind the curve of innovation.

This presents humanity with a multifaceted puzzle – how do we craft laws and regulations that are agile enough to adapt to rapid technological change yet robust enough to protect societal interests?

Across the globe, regulatory efforts are far from uniform. The European Union (EU) is setting the stage with its comprehensive AI Act, which aims to categorize and control AI applications based on their risk level.

Meanwhile, the US has favored voluntary commitments over immediate legislation.

China, on the other hand, wields a tight grip on AI, aligning regulation with broader political and social governance goals.

And let’s not forget other jurisdictions – AI is a global phenomenon that will require near-universal legislative coverage.

So, where is regulation now, and how is it expected to progress?

The EU approach

The EU has adopted a stringent framework with its groundbreaking AI Act, which is expected to be fully approved by the end of the year.

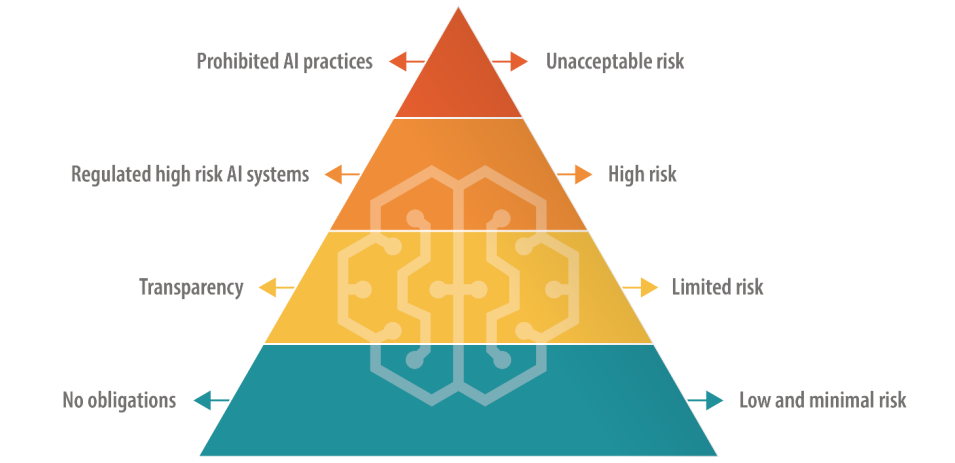

The AI Act specifies four tiers, including “unacceptable risk AIs,” “high-risk AIs,” “limited risks AIs,” and “low-risk AIs,” each with a specific set of guidelines and prohibitions.

Unacceptable risk AIs pose immediate or considerable dangers, such as causing physical or mental harm or infringing on human rights – and these will be outright banned.

High-risk AIs are permitted but subject to strict regulations from risk management to technical documentation and cybersecurity.

Limited and low-risk AIs, on the other hand, remain predominantly unregulated but are required to maintain transparency with users.

There is one reprieve in the short term, as the legislation includes a grace period of about two years for companies to align with the new regulations.

The EU proposes that the original creators of AI models should be liable for how their technology is used, even when embedded into a different system by another company or developer. There are also newly added rules for “foundation models,” which include generative AI models.

Reception to the AI Act varies – some criticize its clumsy definitions and potential to sap innovation. For instance, there is considerable uncertainty over how different AI models sort into risk categories.

Additionally, smaller AI companies are worried they lack the resources to dedicate towards compliance.

OpenAI CEO Sam Altman briefly threatened to pull his company’s products from the EU market if the AI Act specified requirements that can’t be realistically adhered to.

In June, 150 large companies highlighted the Act’s heavy-handed approach, stating the current rules risk destroying European competitiveness.

Divergence from the US

A Stanford analysis of the AI Act discussed fundamental differences between the EU and US approaches to AI regulation.

Alex Engler, a fellow in Governance Studies at The Brookings Institution, said, “There’s a growing disparity between the U.S. and the EU approach [to regulating AI].”

He highlights that the EU has pushed for data privacy and transparency laws, which remain mostly lacking in the US. Disparity confuses compliance for AI companies, and the costs associated with cross-jurisdictional compliance could be overbearing.

“Corporate interests will fight tooth and nail if you have two dramatically different standards for online platforms,” said Engler.

Overall, when push comes to shove, it’s hard to envisage tech firms not finding a way to navigate the AI Act as they have done with GDPR and will have to with the recent Digital Services Act.

Speaking to the journal Nature, Daniel Leufer, a Senior Policy Analyst at Access Now, dismisses the AI industry’s objections to the Act as just “the usual showboating.”

The UK approach

Post-Brexit, the UK aims to capitalize on its independence from the EU to formulate a more flexible and sector-specific regulatory regime.

In contrast to the AI Act, the UK government intends to regulate the applications of AI rather than the underlying software itself.

A “pro-innovation” stance

The UK government released a white paper in March outlining a “pro-innovation” framework, inviting stakeholders to share their views. However, the government has yet to issue guidelines for implementing this framework.

A statement says the “government will help the UK harness the opportunities and benefits that AI technologies present” – an approach criticized for not paying fair attention to AI-related harm.

The government had even planned to offer AI firms an extremely controversial ‘copyright exemption’ but U-turned on that plan in August.

Britain has had a tough time attracting tech innovation lately, notably losing Cambridge chip manufacturer Arm, who opted to publicly list shares in the US rather than domestically. This is perhaps fueling the UK’s anxiety to re-ignite its dwindling tech sector.

To help position the UK as forward-thinking on AI, Prime Minister Rishi Sunak announced a two-day AI Safety Summit taking place in November.

Notably, China has been invited to the event – though there is speculation they’ll be excluded over espionage fears.

The US approach

In the US, the focus is allowing the industry to self-regulate, at least for now.

The US Congress is signaling a ‘wait and see’ approach, embarking on a review of AI closed-door meetings and Chuck Schumer’s AI Insight Forums to determine where precisely AI requires new regulation.

Bipartisan agreements might revolve around narrowly tailored legislation addressing privacy, platform transparency, or protecting children online.

Additionally, some have highlighted that existing laws and regulations remain relevant to AI.

In June, Khan said, “There is no AI exemption from laws prohibiting discrimination…As this stuff becomes more embedded in how daily decisions are being made, I think they invite and merit a lot of scrutiny…I think enforcers, be it at the state level or the national level, are going to be acting.”

Voluntary frameworks and stop-gaps

Prominent tech companies like Microsoft, OpenAI, Google, Amazon, and Meta recently signed voluntary commitments for safe AI development, including conducting internal and external AI model testing before public release.

Many tech industry leaders have come forward with their own pledges, but in the absence of robust legislative oversight, the sincerity of these promises is yet to be tested.

All in all, critics claim that the US’s approach is essentially symbolic, lacking the depth and commitment of the EU’s AI Act. The first AI Insight Forum was a largely closed-door event that some criticized as restricting open debate.

Senator Josh Hawley said the event was a “giant cocktail party.”

As Ryan Calo, Professor at the University of Washington School of Law, aptly summarized, “There’s the appearance of activity, but nothing substantive and binding.”

The Chinese Approach

China, proportionate to its appetite for state control, is imposing some of the most stringent regulations on AI.

The emphasis is on controlling AI’s dissemination of information, reflecting China’s broader political and social governance frameworks.

For instance, generative AI providers whose products can “impact public opinion” are required to submit for security reviews.

The first wave of approved chatbots, including Baidu’s ERNIE Bot, was recently released to the public and found to censor politically-themed questions.

The surge of ‘deep fakes’ and AI-generated content poses challenges worldwide, and China is no exception. Recognizing the potential pitfalls of AI relatively early in the year, the Cyberspace Administration of China (CAC) requires AI-driven content generators to undergo identity verification processes on users.

Furthermore, watermarking provisions have been introduced to distinguish AI-generated content from human-created ones, rules that Western powers also seek to implement.

US and China divergence

AI has accelerated friction between the US and China, sparking what many have termed a “Digital Cold War” – or perhaps more appropriately, an “AI Cold War.”

US restrictions on collaboration and trade with China are nothing new, but the decoupling of the two countries sprung forward a step when Xi Jinping announced his new, reformed Made in China 2025 plan.

This aims to make China 70% self-sufficient in crucial technology sectors by 2025, including high-end AI technology.

Currently, the US is severing funding and research ties with China while subjugating their procurement of AI chips from companies like Nvidia.

While the US has a clear head start in the race for AI dominance, China has a few tricks up its sleeve, including a centralized approach to regulation and near-limitless personal data.

In May, Edith Yeung, a Race Capital investment firm partner, told the BBC, “China has a lot less rules around privacy, and a lot more data [compared to the US]. There’s CCTV facial recognition everywhere, for example.”

China’s closely aligned government and tech industry also yields advantages, as data sharing between its government and domestic tech firms is virtually seamless.

The US and other Western jurisdictions don’t share the same access to business and personal data, which is the lifeblood of machine learning models. In fact, there are already concerns that high-quality data sources are ‘running out.’

Moreover, Western AI companies’ liberal use of potentially copyright training data has caused widespread uproar and lawsuits.

China’s AI companies will likely not suffer from that problem.

Balancing accessibility and risk

AI was once confined to academia and large enterprises. Within a year or so, between a quarter and a half of adults in the UK, US, and EU have used generative AI.

Accessibility has many advantages, including spurring innovation and reducing entry barriers for startups and individual developers. However, this also raises concerns surrounding the potential misuse that comes with unregulated access.

The recent incident where Meta’s Llama-1 language model was leaked onto the internet exemplifies the challenges of containing AI technology. Open-source AI models have already been used for fraudulent purposes and to generate images of child abuse.

AI companies know this, and open-source models threaten their competitive edge. Meta has emerged as somewhat of an industry antagonist, releasing a series of models for free as others, like Microsoft, OpenAI, and Google, struggle to monetize their AI products.

Other open-source models, like the Falcon 180B LLM, are exceptionally powerful while lacking guardrails.

Some speculate that major AI companies are pushing for regulation as this forces smaller players and the open-source community out of the ring, enabling them to maintain dominance over the industry.

AI’s dual-use conundrum

Another complex factor is AI’s dual or multiple applications.

The same algorithms that can improve our quality of life can also be weaponized or applied in military settings. This might be the Achilles heel of the EU AI Act’s heuristic framework – how do you interpret useful AIs that can be modified for harm?

Regulating dual-use technology is notoriously complex. Implementing stringent controls could stifle innovation and economic growth when only a tiny minority misuses an AI technology.

AI companies can circumvent blame by creating policies against misuse, but that doesn’t stop people from using ‘jailbreaks’ to trick models into outputting illicit material.

Balancing AI’s economic benefits and ethical concerns

The benefits of AI are economically compelling, especially in sectors like healthcare, energy, and manufacturing.

Reports by McKinsey and PwC forecast that AI will contribute trillions to the global economy annually, irrespective of the impact of job replacements and lay-offs.

AI can be viewed as a pathway for job creation and growth for countries struggling with economic issues.

On a broader philosophical scale, AI is sometimes portrayed as humanity’s savior amidst macro-level issues such as poverty, climate change, and aging populations.

Western governments – particularly the US and UK – seem inclined to fuel growth through tax incentives, funding for AI research, and a laissez-faire regulatory approach.

This is a gamble the US took in the past by enabling the tech industry to self-regulate, but can it continue to pay off?

Will doom-mongering predictions comparing AI to ‘nuclear war’ and ‘global pandemics’ put pay to romanticized views of the technology’s potential to lift humanity out of ever-encroaching global issues?

AI presents a certain duality that evokes both eutopic and dystopic visions of the future, depending on who you listen to.

Regulating such an entity is an unprecedented challenge.

In any case, by the end of 2024, we’ll likely live in a world where AI is near-universally regulated, but what no one can answer is how much the industry – and the technology itself – will have evolved by then.