Data is the lifeblood of AI, but it’s not an infinite resource. Can humanity run out of data? What happens if we do?

Complex AI models require vast quantities of training data. For example, training a large language model (LLM) like ChatGPT requires approximately 10 trillion words.

Some experts believe that supplies of high-quality data are dwindling. For example, a 2022 study from researchers at multiple universities stated, “Our analysis indicates that the stock of high-quality language data will be exhausted soon; likely before 2026… Our work suggests that the current trend of ever-growing ML models that rely on enormous datasets might slow down if data efficiency is not drastically improved or new sources of data become available.”

While generating synthetic data provides a solution, it generally fails to capture the depth, nuance, and variance of real data.

To further complicate the situation, there are concerns about what happens when AI starts consuming its own output, which researchers at École Polytechnique Fédérale de Lausanne (EPFL) in Switzerland believe is happening already.

Their research indicates that AI companies buying human-produced data through platforms like Amazon Mechanical Turk may receive AI-generated data instead.

What happens when AI starts eating its own output? Can it be avoided?

Building datasets is expensive and time-consuming – and the stakes are high

Data is ubiquitous, but operationalizing it for AI is a complex process. The quality of the data and labels impacts the model’s performance – it’s a case of “rubbish in, rubbish out.”

To briefly describe the process of building datasets, data annotators (or labelers) take processed data (e.g., a cropped image) and label features (e.g., a car, a person, a bird).

This provides algorithms with a ‘target’ to learn from. The algorithms extract and analyze features from labeled data to predict those features in new, unseen data.

This is required for supervised machine learning, which is one of the core branches of machine learning alongside unsupervised machine learning and reinforcement learning. By some estimates, the data preparation and labeling process occupies 80% of a machine learning model project’s duration, but cutting too many corners risks compromising a model’s performance.

In addition to the practical challenges of creating high-quality datasets, the very nature of data constantly changes. What you’d define as a “dataset containing a typical selection of vehicles on the road” 10 years ago isn’t the same today. Now, you’d find a much larger number of eScooters and eBikes on the roads, for example.

These are called “edge cases,” which are rare objects or phenomena not present in datasets.

Models reflect the quality of their datasets

If you train a modern AI system on an old dataset, the model risks low performance when exposed to new, unseen data.

Between 2015 and 2020, researchers uncovered major structural biases in AI algorithms, which were partly attributed to training models on old and biased data.

For example, the Labeled Faces in the Wild Home (LFW), a dataset of celebrity faces commonly used in face recognition tasks, consists of 77.5% males and 83.5% white-skinned individuals. An AI has no hope of functioning properly if the data doesn’t represent everyone it intends to serve. Facial recognition error rates among top algorithms were found to be as low as 0.8% for white men and as high as 34.7% for dark-skinned women.

This research culminated in the landmark Gender Shades study and a documentary named Coded Bias, which investigated how AI is likely learning from flawed and unrepresentative data.

The impacts of such are far from benign – this has led to incorrect court outcomes, false imprisonment, and women and other groups being denied jobs and credit.

AIs need more high-quality data, which must be fair and representative – it’s an elusive combination.

Is synthetic data the answer?

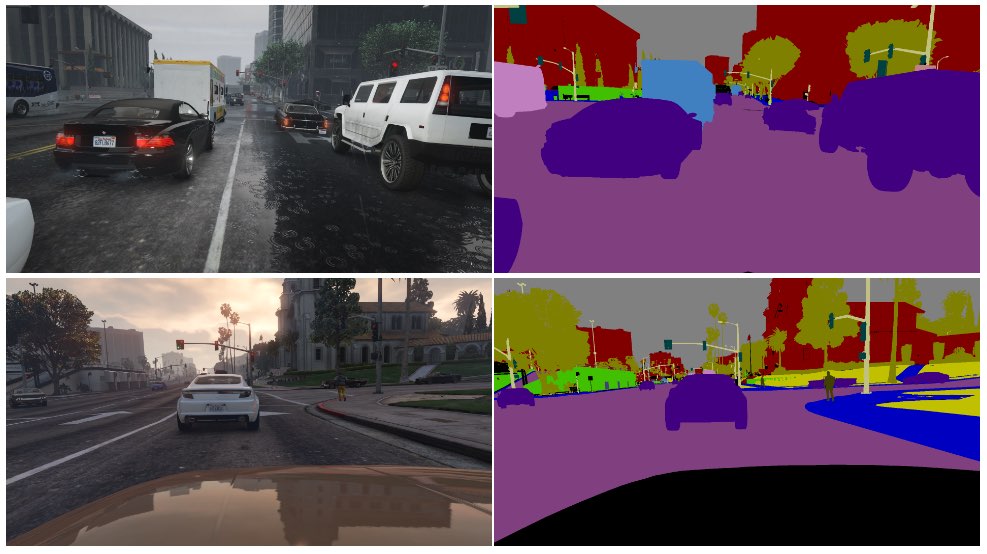

Synthetic data is commonly used in computer vision (CV), where AIs identify objects and features from images and video.

Instead of collecting image data from the real world – like photographing or videoing a street – which is technically challenging and poses privacy issues – you simply generate the data in a virtual environment.

While this supplements AIs with more data, there are several drawbacks:

- Modeling real-life scenarios in a virtual environment isn’t straightforward.

- Generating large quantities of synthetic data is still costly and time-consuming.

- Edge cases and outliers remain an issue.

- It can’t perfectly replicate the real thing.

- On the other hand, some aspects might be too perfect, and it’s difficult to determine what’s missing.

In the end, synthetic data is excellent for readily-virtualized environments, like a factory floor, but doesn’t always cut it for fast-moving real-life environments like a city street.

What about generating synthetic text data?

Text is simpler than image or video data, so can models like ChatGPT be used to generate near-infinite synthetic training data?

Yes, but it’s risky, and the impacts aren’t easy to predict. While synthetic text data can help tune, test, and optimize models, it’s not ideal for teaching models new knowledge and could entrench bias and other issues.

Here’s an analogy of why training AIs with AI-generated data is problematic:

- Consider a school that uses all the world’s best textbooks to train its students with everything there is to know from its resources in the space of a day.

- After, the school starts producing its own work based on that knowledge – analogous to a chatbot’s output. Students have learned from all data available to the date training begins, but they can’t efficiently induct new data into the knowledge system afterward.

- Knowledge is created daily – while the vast majority of human knowledge was created before any specific day, knowledge evolves and transforms over time. Crucially, humans don’t just create new knowledge constantly – we also change our perspective on existing knowledge.

- Now, suppose that the school, exhausted of data, begins to teach its students using its own output. Students start ‘eating’ their content to produce new content.

- At that stage, the students’ output fails to adjust to the real world and its usefulness declines. The system is regurgitating its own work. While the work can adapt and evolve, it does so in isolation from anything outside of that feedback loop.

AI is constantly confronting people with riddles to solve, and this one has a lot of commenters on Reddit and the Y Combinator forum stumped.

It’s mind-bending stuff, and there’s no real consensus on the ramifications.

Human data labelers often use AI to produce data

There is another unforeseen layer to the problem of producing quality training data.

Crowdworking platforms like Amazon Mechanical Turk (MTurk) are regularly used by AI companies looking to produce genuine ‘human’ datasets. There are concerns that data annotators on those platforms are using AIs to complete their tasks.

Researchers at École Polytechnique Fédérale de Lausanne (EPFL) in Switzerland analyzed data created through MTurk to explore whether workers used AI to generate their submissions.

The study, published on 13th June, enlisted 44 MTurk participants to summarize the abstracts from 16 medical research papers. It found that 33% to 46% of users on the platform generated their submissions with AI, despite being asked to respond with natural language.

“We developed a very specific methodology that worked very well for detecting synthetic text in our scenario,” Manoel Ribeiro, co-author of the study and a Ph.D. student at EPFL, told The Register this week.

While the study’s dataset and sample size is quite small, it’s far from inconceivable to think that AIs are being trained unwittingly on AI-generated content.

The study isn’t about blaming MTurk workers – the researchers note that low wages and repetitive work contribute to the issue. AI companies want top-quality human-created data while keeping costs low. One commenter said on Reddit, “I’m currently one of these workers, tasked in training Bard. I’m sure as hell using ChatGPT for this. 20$/hr is not enough for the horrible treatment we get, so I’m gonna squeeze every cent out of this ******* job.”

The rabbit hole gets even deeper, as AIs are often trained on data scraped from the internet. As more AI-written content is published online, AI will inevitably learn from its own outputs.

As humans begin to depend on AIs for information, the quality of their outputs becomes increasingly critical. We need to find innovative methods of updating AIs with fresh, authentic data.

As Ribeiro puts it, “Human data is the gold standard, because it is humans that we care about, not large language models.”

Work analyzing the potential impact of AI consuming its own outputs is ongoing, but authentic human data remains critical to a wide range of machine learning tasks.

Generating vast quantities of data for hungry AIs while navigating risks is a work in progress.