NVIDIA announced a range of new hardware, computing platforms, and simulation engines aimed at accelerating the development of generative AI and robotics at its GTC event.

Yesterday, NVIDIA founder and CEO Jensen Huang showcased the latest products the company will provide to developers of tomorrow’s AI solutions.

Huang said, “Accelerated computing has reached the tipping point. General-purpose computing has run out of steam. We need another way of doing computing so that we can continue to scale, so that we can continue to drive down the cost of computing, so that we can continue to consume more and more computing while being sustainable.”

To deliver the massive upgrade the world’s AI infrastructure needs, Huang unveiled the following new hardware and software solutions:

- The Blackwell GPU and computing platform

- NVIDIA DGX SuperPOD supercomputer

- NIM microservices – a new way to create AI software

- Omniverse – a real world simulator for training robots

- Isaac Perceptor software and Project GR00T – a general-purpose foundation model for humanoid robots and robotics software

Here’s a closer look at these exciting new releases.

Blackwell

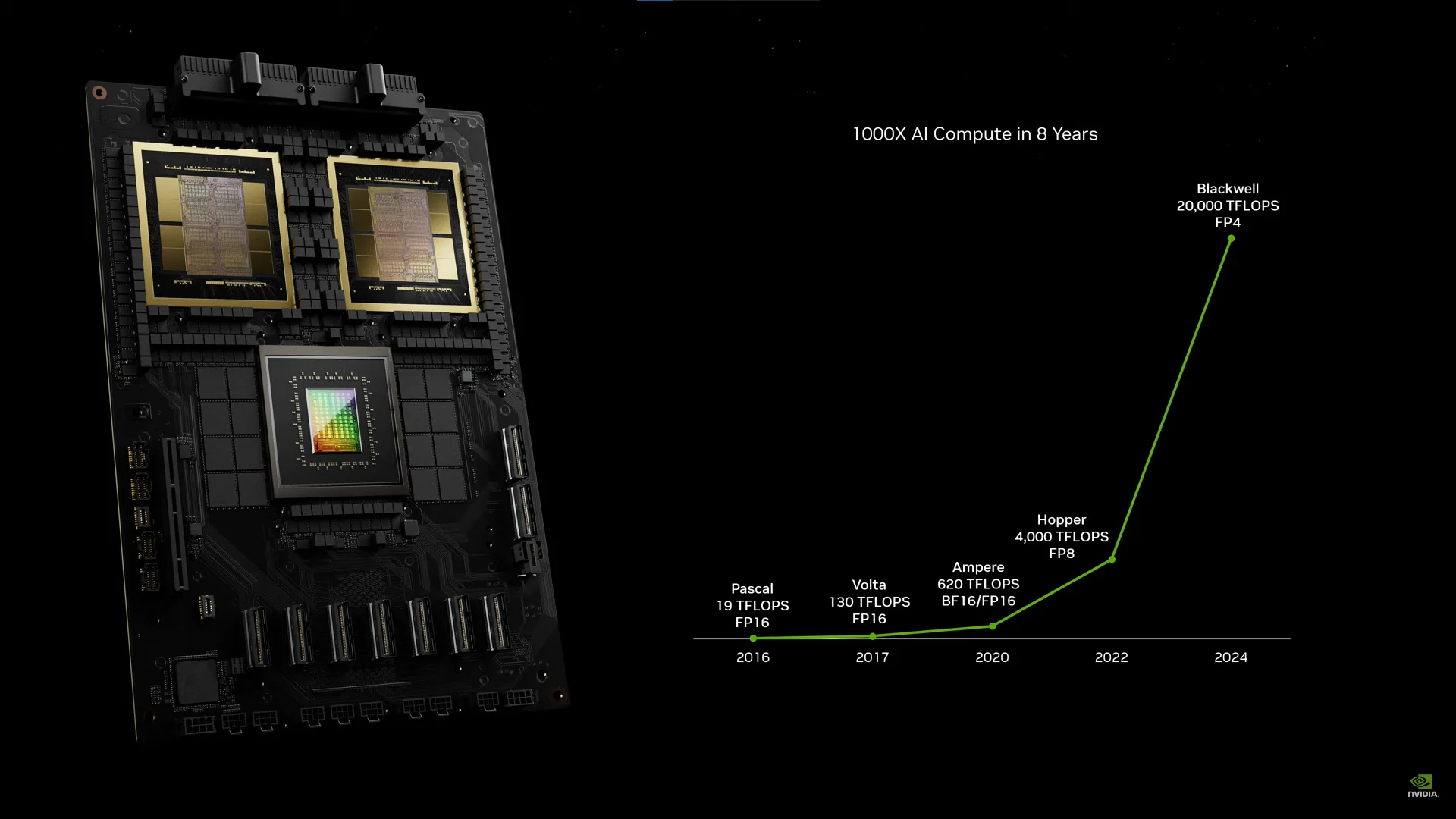

Huang said that to enable multimodal training of ever-larger AI models the industry needs much bigger GPUs. NVIDIA claims its new Blackwell chip is “the largest chip physically possible” and contains 104 billion transistors.

Connecting two of them into a GPU delivers a significant boost in processing. Blackwell delivers 2.5x NVIDIA’s Hopper architecture performance in FP8 for training, per chip, and 5x with FP4 for inference.

The NVLink interconnect that connects these GPUs is twice as fast as its predecessor and allows for 576 Blackwell GPUs to be interconnected.

Connecting two Blackwell GPUs and a Grace CPU results in the Grace Blackwell Superchip that forms the basis of NVIDIA’s GB200 NVL2 racks. These deliver exaflop computing in a single rack.

NVIDIA connected a few of these to make its new AI supercomputer called NVIDIA DGX SuperPOD which delivers 11.5 exaflops of AI supercomputing at FP4 precision.

The media release listed AWS, Google Cloud, Microsoft Azure, and Oracle as first in line for the new computing hardware which NVIDIA says can “build and run real-time generative AI on trillion-parameter large language models at up to 25x less cost and energy consumption than its predecessor.”

This is what NVIDIA’s computing progress looks like over the last 8 years.

NVIDIA NIMs

“How do we build software in the future? It is unlikely that you’ll write it from scratch or write a whole bunch of Python code or anything like that,” Huang said. “It is very likely that you assemble a team of AIs.”

Huang said that instead of writing software, companies will “assemble AI models, give them missions, give examples of work products, review plans, and intermediate results.”

NVIDIA launched a collection of pre-built containers, or microservices, it calls NIM (NVIDIA Inference Microservice).

NIMs are like little boxes of AI software with a pretrained model, APIs, and other software components inside. Companies will be able to deploy these similarly to how we use GPTs or Zapier, instead of having to recreate the functionality from scratch.

Omniverse

Embodied AI, or physical AI, is where a lot of development is happening now. Training robots in the physical world is expensive and inefficient and NVIDIA says it has the solution for that.

Omniverse is a virtual world simulation engine that acts like a virtual “gym” for a robot to learn articulation and the physics of interacting with the real world.

NVIDIA provides API access to developers to train their robots in Omniverse. Developers can create a digital twin of a physical space, like a warehouse, and optimize automated equipment and robots before deploying them in the physical space.

Isaac software and Project GR00T

Huang announced new software to support robotics developers. Isaac Perceptor software and the Isaac Manipulator library will help robots see, navigate, and manipulate their environments.

NVIDIA also unveiled, Project GR00T (General Robotics 003), a general-purpose foundation model for humanoid robots. This model, along with the Isaac Perceptor software will run on a new computer, Jetson Thor, to help train robots in Omniverse and then deploy them zero-shot into the real world.

The first day of GTC saw some major new tech announcements that will likely see NVIDIA’s share price continue to climb. It’ll be interesting to see what other surprises Huang has for us over the next few days.

You can watch Huang’s keynote here.