Nvidia unveiled an updated version of its Grace Hopper Superchip (GH200) that it announced 2 months ago. The GH200 was designed to power data centers for large-scale AI processing.

The GH200 hasn’t gone into serial production yet but it has already had a major upgrade in its memory. The secret sauce in these new chips is the upgraded high-bandwidth memory called HBM3e which is capable of accessing data at 5 TB/s.

This represents a 50% improvement on the HBM3 memory in the chip that was announced at the end of May.

Nvidia connected this high-speed memory to powerful CPUs and GPUs on the same device. The resulting increase in processing speed will dramatically improve on the AI processing ability that current data centers have.

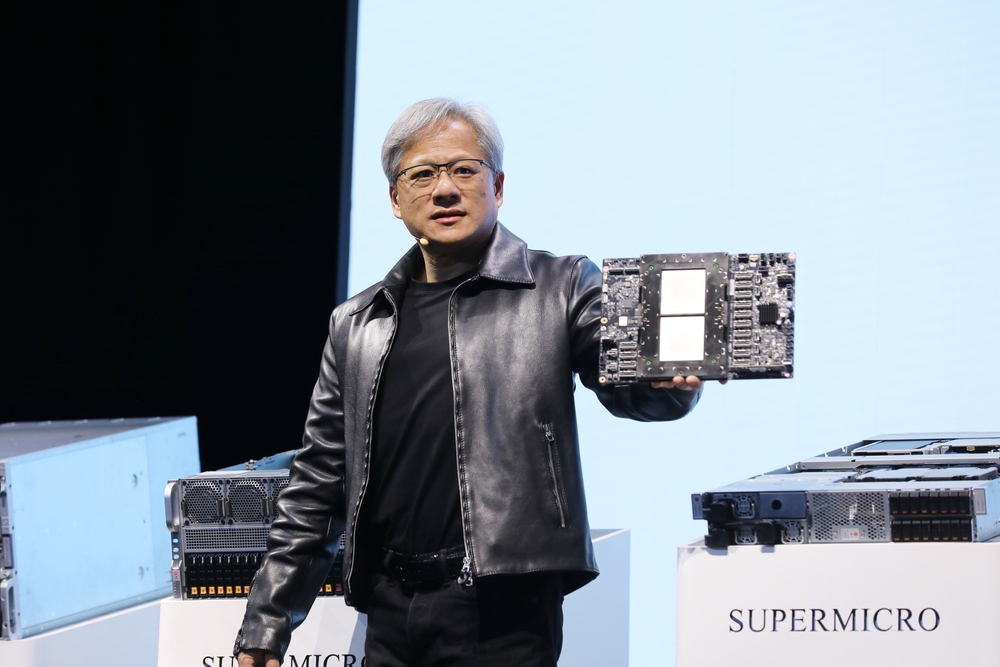

In addition to the updated chip, Nvidia also announced the GH200 Grace Hopper platform which allows for 2 of the GH200 chips to be connected on a single board.

The platform delivers eight petaflops of AI processing and 282GB of HBM3e memory with a bandwidth of 10TB/s.

How will Nvidia’s chip change data centers?

The numbers can be a little hard to get your head around. So how big a deal is this new chip and platform?

In his presentation, Nvidia CEO Jensen Huang used the example of a typical data center running 8800 x86 processors performing a variety of computing functions (ISO-budget).

A data center like this running Llama 2 for inference, with a vector database being queried and SDXL generating the outputs would cost you around $100m and use 5MW of power.

A $100m data center using 2500 GH200 platforms could run the same models 12 times faster and would use only 3MW of power.

If a data center was focused on a very specific set of tasks (ISO-workload) then the comparison becomes even more staggering.

You would only need to spend $8m for 210 GH200 devices burning through 20 times less energy to match the $100m data center performance.

Needless to say, any AI cloud computing data centers that were in the planning phase before this release will be heading back to the drawing board.

The GH200 chips will be rolling out in Q2 2024 and are expected to eat into a lot of x86 processor business. AMD’s new MI300 chip is Nvidia’s closest rival but its larger memory advantage seems to be eroded by the upgrade in the new GH200.

The advancement in processor technology that we’re seeing is exceeding Moore’s law and shows no sign of slowing down. Generative AI applications are already delivering impressive performance but it looks like they’re about to get a lot faster.