The surging demand for AI is on a collision course with environmental sustainability, with experts stating that coal power might stick around to maintain electricity demands.

Countries worldwide are aiming to transition to net zero, investing in green energy and cutting fossil fuel consumption.

This is at odds with the immense electricity demand created by AI, particularly generative AI, which serves millions of users worldwide.

That’s a definitive point – even a couple of years ago, AI models were comparatively small and confined to localized uses.

Today, you, me, and millions of others have at least experimented with AI. Some 40% of adults in the US and Europe, by some estimates, and 75% of under-18s.

AI companies see a future where their products are embedded into everything we do and every device we use, but AI isn’t powered by thin air. Like all technologies, it requires energy.

A recent paper found the BLOOM model used up 433 MWh to train, and GPT-3 needed a whopping 1287 MWh.

OpenAI’s ChatGPT needs an estimated 564 MWh daily to compute answers to user prompts. Each individual output represents a calculation performed across OpenAI’s sprawling neural networks, each requiring energy.

Let’s put that into perspective: 1287 MWh could power 43,000 to 128,700 average households for a day, assuming an average daily use of 10 to 30 kWh per household.

It could also power over 200,000,000 LED light bulbs for an hour or drive an electric vehicle for roughly 4 to 5 million kilometers.

While this study and others have their limitations, public data from open-source AI companies like HuggingFace corroborates the scale of these figures.

The environmental implications of AI extend beyond mere energy consumption. Water usage at Microsoft’s data centers underscores the resource-intensive nature of AI operations. A 15-megawatt data center can consume up to 360,000 gallons of water daily.

The International Energy Agency (IEA) has warned of the broader impact of data centers, which already account for more than 1.3% of global electricity consumption. This figure is poised to rise as AI and data processing demands escalate, further stressing the global energy infrastructure and amplifying the call for more sustainable practices within the AI industry.

The Boston Consulting Group estimates that by the end of this decade, electricity consumption at US data centers could triple from 2022 levels to as much as 390 terawatt hours, accounting for about 7.5% of the country’s projected electricity demand.

The EU has also said data center energy demands will double by 2026. In the US or China alone, data centers could consume equal to the annual output of about 80 to 130 coal power plants by around 2030.

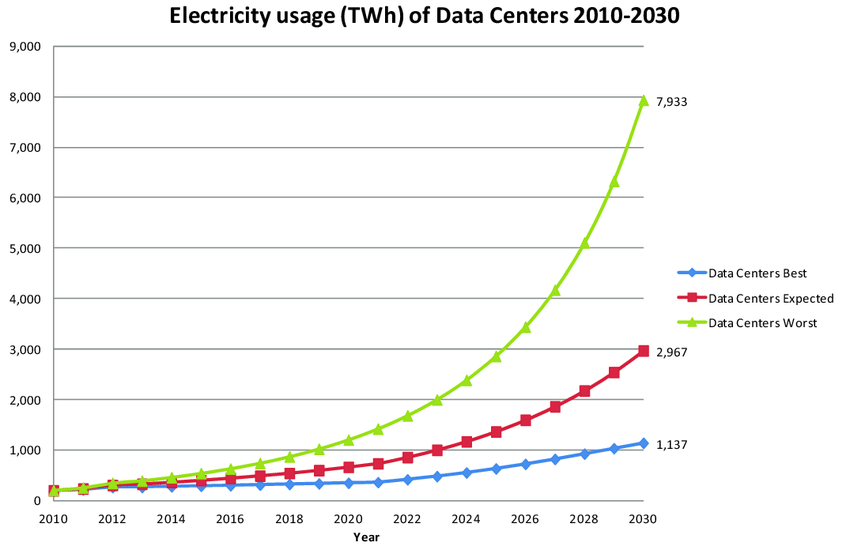

In a worst-case scenario, the below graph shows that data centers might consume some 8,000 TWh of electricity by 2030, which is 30% of the world’s electricity consumption today. That’s double what the US consumes annually.

We’d hasten not to sensationalize – let’s be careful to remember this is an upper-bound estimate by some margin and that data centers are used for many other things besides AI – but it’s still quite shocking, even at the lower bounds of 1,100 TWh.

Speaking at the World Economic Forum, Sam Altman, CEO of OpenAI himself said, “We do need way more energy in the world than we thought we needed before. We still don’t appreciate the energy needs of this technology.”

“AI will consume vastly more power than people expected,” he contiued, suggesting that energy sources like nuclear fusion or cheaper solar power are vital for AI’s progress.

Data centers strain the energy grid

In the heart of northern Virginia, a region now famously known as “data center alley,” the rapid growth of generative AI is pushing the limits of electricity generation.

Local power providers even had to halt connections to new data centers at one point in 2022, as the demand was simply too high. Due to community resistance, proposals to use diesel generators during power shortages were shelved.

Bloomberg reports that in the Kansas City area, the construction of a data center and an electric-vehicle battery factory required so much power that plans to decommission a coal plant were postponed.

Ari Peskoe, from the Electricity Law Initiative at Harvard Law School, warned of the potential dire consequences if utilities fail to adapt: “New loads are delayed, factories can’t come online, our economic growth potential is diminished,” he says.

“The worst-case scenario is utilities don’t adapt and keep old fossil-fuel capacity online and they don’t evolve past that.”

Rob Gramlich of Grid Strategies echoed these concerns, highlighting to Bloomberg the risk of rolling blackouts if infrastructure improvements lag behind.

The utility sector’s challenges aren’t limited to data centers. Recent legislation and incentives are spurring the construction of semiconductor, EV, and battery factories, which is also contributing to the soaring demand for electricity.

For instance, Evergy, serving the Kansas City area, delayed retiring a 1960s coal plant to cope with the demand from new developments, including a Meta Platforms data center and a Panasonic EV battery factory.

Despite the preference of many tech firms and clean tech manufacturers for renewable energy, the reality says differently. It’s difficult to envisage quite how this energy usage can be offset.

The situation is not unique to the US. Globally, China, India, the UK, and the EU have all issued warnings about AI’s rising electricity demands.

How will we pay?

As AI technologies become ubiquitous, their ecological footprint clashes with global ambitions for a net-zero future. Even putting lofty goals for obtaining net zero aside, power grids simply can’t sustain the industry’s current trajectory.

Is an “AI winter” coming when AI undergoes a long process of becoming more refined and efficient before becoming more intelligent? Or will breakthroughs and industry promises keep development afloat?

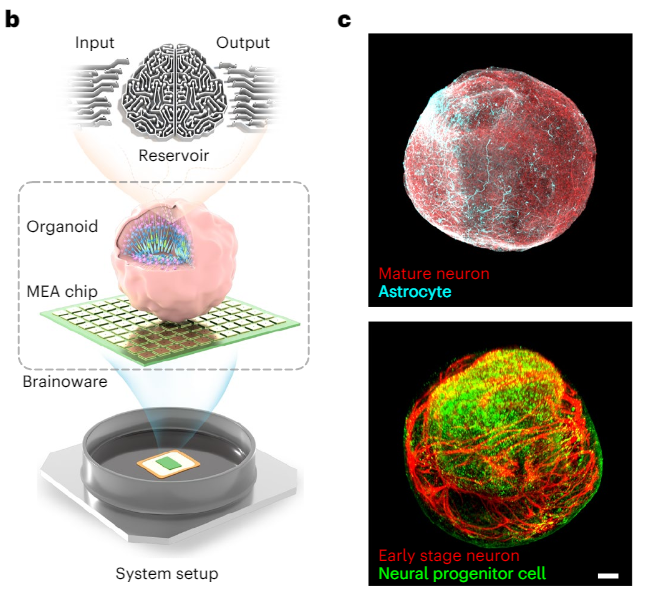

Bio-inspired AI, for example, is a promising frontier that seeks to harmonize the efficiency of natural systems with computational intelligence. Earth is inhabited by billions of extremely advanced organisms ‘powered’ by natural sources like food and the Sun – can this be a blueprint for AI?

The answer is a tentative yes, with neuromorphic AI chips based on synaptic functions becoming increasingly viable. AI speech recognition has even been performed using biological cells formed into ‘organoids’ – essentially ‘mini-brains.’

Other methods of mitigating the AI industry’s resource drain include an “AI tax.”

Usually posited as a method of mitigating AI-related job losses, an AI tax could see entities benefiting from AI advancements contribute to mitigating their environmental impacts.

In the end, it’s difficult to predict how the industry will handle these demands and to what extent people will have the shoulder the burden.