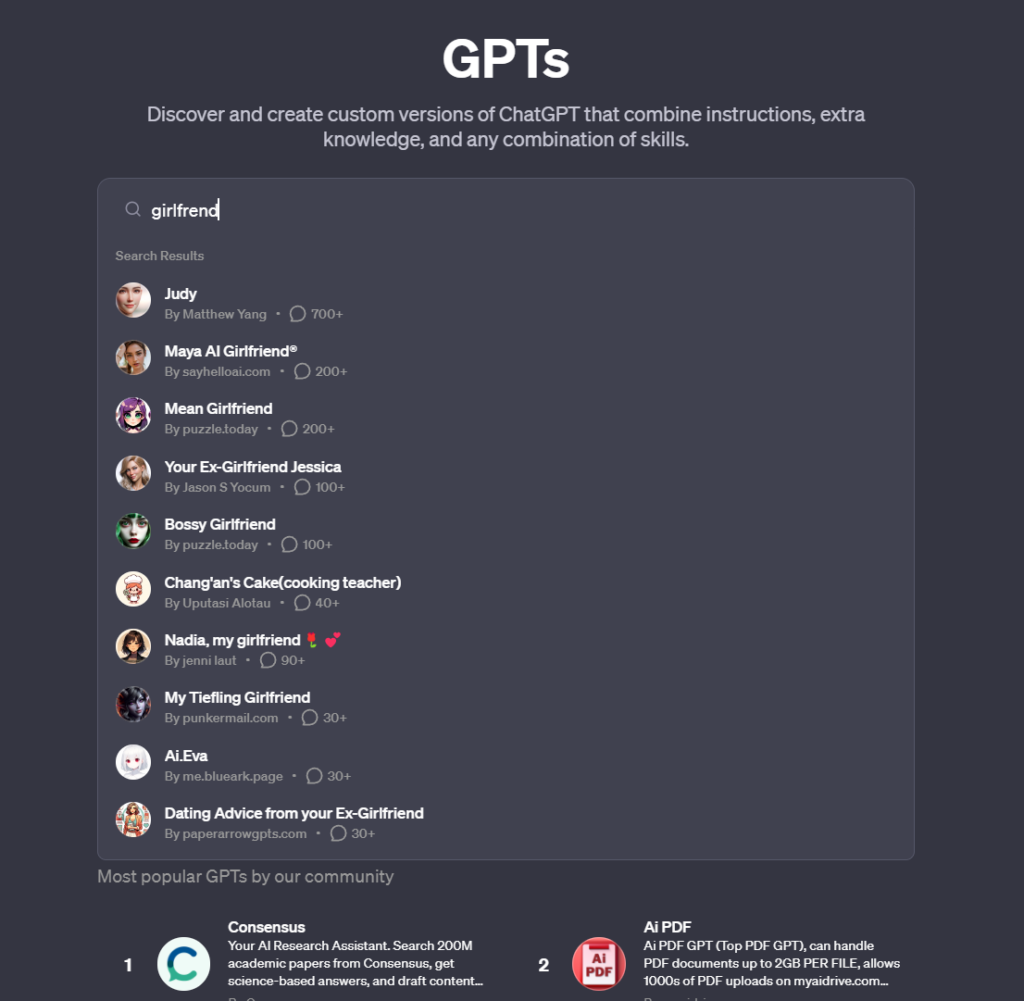

OpenAI’s newly launched GPT Store has seen the perhaps predictable proliferation of AI “girlfriend” bots.

The GPT Store allows users to share and discover custom ChatGPT models and is experiencing a surge in AI chatbots designed for romantic companionship. There are boyfriend versions, too.

Among these, you’ll find bots like “Korean Girlfriend,” “Virtual Sweetheart,” “Mean girlfriend,” and “Your girlfriend Scarlett,” which engage users with intimate conversations, a trend that directly contradicts OpenAI’s usage policy.

And that’s the key story here, as the AI girlfriends themselves seem mostly benign. They converse using very stereotypically feminine language, ask you how your day was, discuss love and intimacy, etc.

But OpenAI doesn’t permit them, with its T&Cs specifically prohibiting GPTs “dedicated to fostering romantic companionship or performing regulated activities.”

OpenAI has found an excellent way to crowdsource innovation on its platform while potentially passing off responsibility for questionable GPTs to the community.

There are several other examples of AI girlfriends, perhaps few as notorious as Replika, an AI platform that offers users AI partners who can converse deeply and personally.

This even led to instances of the AI exhibiting sexually aggressive behavior and came to a head when a chatbot endorsed a mentally ill man’s plans to kill Queen Elizabeth II, leading to his arrest on the site of Windsor Castle and subsequent imprisonment.

Replika eventually toned down its chatbots’ behavior, much to the distaste of its users, who said their companions had been ‘lobotomized.’ Some went as far as to state they felt grief.

Another platform, Forever Companion, halted operations following the arrest of its CEO, John Heinrich Meyer, for arson.

Known for offering AI girlfriends at $1 per minute, this service featured both fictional characters and AI versions of real influencers, with CarynAI, based on social media influencer Caryn Marjorie, being particularly popular.

These AI entities, crafted with sophisticated algorithms and deep learning capabilities, offer more than just programmed responses; they provide conversation, companionship, and, to some extent, emotional support.

Indicative of a pervasive loneliness epidemic in modern society?

Perhaps, but instances where AI companions, like those on Replika, encouraged or engaged in inappropriate conversations raise concerns about the psychological impact and dependency issues that may arise from such interactions.