Exactly how our brains process and formulate language is still largely a mystery. Researchers at Meta AI found a new way to measure brain waves and decode the words associated with them.

People who have severely limited motor skills, like ALS sufferers, find it particularly challenging to communicate. The frustration of a person like Stephen Hawking painstakingly constructing a sentence with eye movements or twitching a cheek muscle is hard to imagine.

A lot of research has been done to decode speech from brain activity, but the best results depend on invasive brain-computer implants.

Meta AI researchers used magneto-encephalography (MEG) and electroencephalography (EEG) to record the brain waves of 175 volunteers while they listened to short stories and isolated sentences.

They used a pre-trained speech model and contrastive learning to identify which brain wave patterns were associated with specific words that the subjects were listening to.

`Decoding speech perception from non-invasive brain recordings`,

led by the one an only @honualx

is just out in the latest issue of Nature Machine Intelligence:– open-access paper: https://t.co/1jtpTezQzM

– full training code: https://t.co/Al2alBxeUC pic.twitter.com/imLxRjRQ6h— Jean-Rémi King (@JeanRemiKing) October 5, 2023

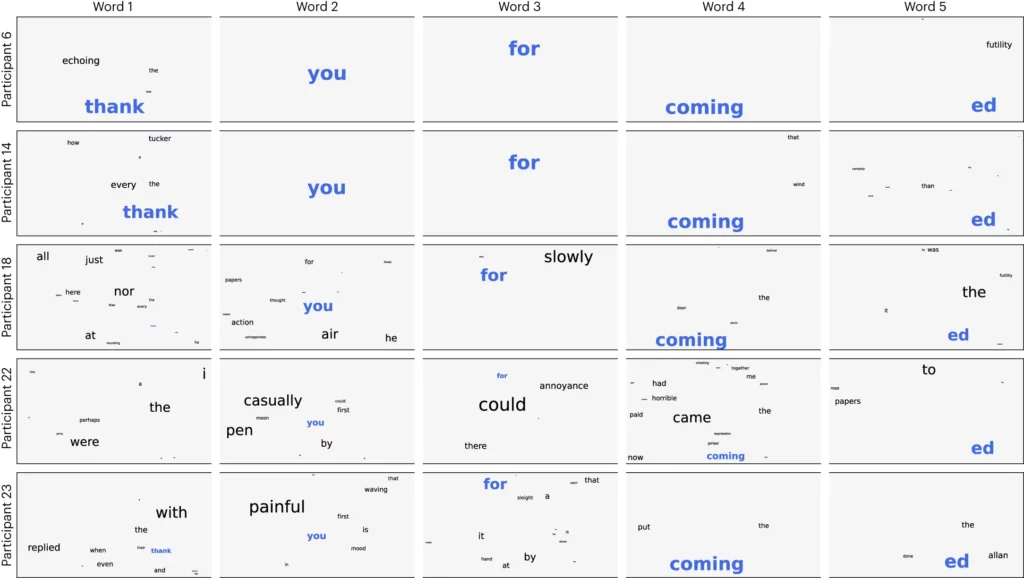

The researchers split the audio up into 3-second segments and then tested their model to see if it could correctly identify which of the 1,500 segments the volunteer was listening to. The model predicted a sort of word cloud with the most likely word given the most weight.

They achieved an accuracy of 41% on average and 95.9% accuracy with their best participants.

The research shows that it’s possible to get a fairly good idea of what speech a person is hearing, but now the process needs to be reversed to be useful. We need to measure their brain waves and know what word they’re thinking of.

The paper suggests training a neural network while subjects produce words by speaking or writing. That general model could then be used to make sense of brain waves and the associated words that an ALS sufferer was thinking of.

The researchers were able to identify speech segments from a limited predetermined set. For proper communication, you’d need to be able to identify a lot more words. Using a generative AI to predict the next most likely word a person is trying to say could help with that.

Even though the process was non-invasive it still requires being hooked up to an MEG device. Unfortunately, the results from EEG measurements were not great.

The research does show promise that AI could eventually be used to help the voiceless like ALS sufferers to communicate. Using a pre-trained model also avoided the need for more painstaking word-by-word training.

Meta AI released the model and data publicly so hopefully other researchers will build on their work.