New research suggests that ChatGPT can outperform students in specific subject areas, fooling teachers in the process and evading AI detectors.

A study led by Yasir Zaki at New York University Abu Dhabi found that ChatGPT’s response equaled or exceeded the quality of student responses in nine out of 32 subjects.

Notably, the chatbot scored nearly twice as high as the average student in a course called Introduction to Public Policy.

However, there were chinks in AI’s armor, as ChatGPT struggled with tasks requiring ‘critical analysis.’ And let’s not forget that human students generally dominated proceedings.

Even so, the gap between AI-generated and human work is narrowing, which has potentially dramatic consequences for the education sector and human knowledge systems as a whole

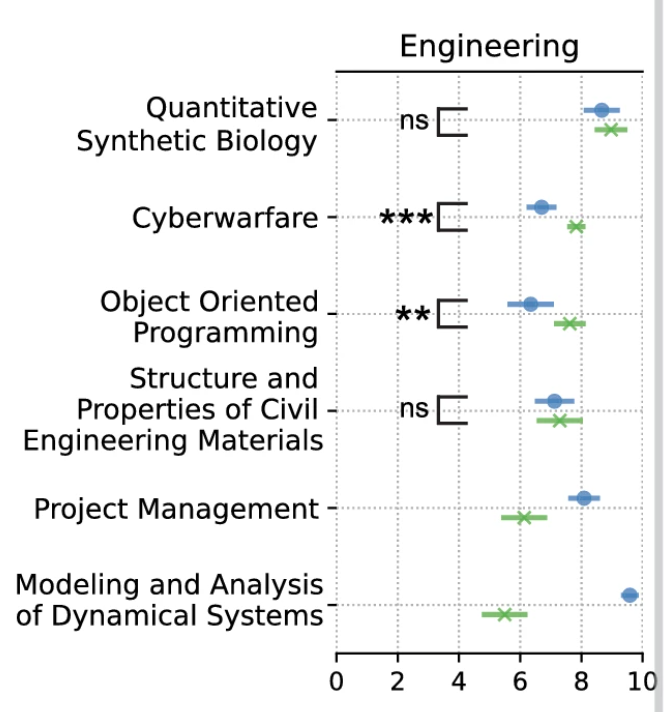

Interestingly, ChatGPT was fairly poor in tasks requiring skills or knowledge of programming and computer science but excelled in social research, politics, and engineering.

The 32 subjects covered subjects such as computer science, math, sociology, psychology, political science, and business. Additionally, the study surveyed students and educators from five countries — Brazil, India, Japan, the United Kingdom, and the United States — to gauge their perspectives on the technology’s use in education.

“These graders were not made aware of the sources of these answers, nor were they aware of the purpose of the grading,” says Zaki.

“ChatGPT performed much better on questions that required information recall, but performed poorly on questions which required critical analysis,” says Zaki.

Further, the researchers found that AI detection services poorly distinguish between human and AI-generated answers with success rates of 95%.

The survey exploring students’ and teachers’ sentiments towards AI in education exposed polarization on whether the use of AI constitutes plagiarism or not.

Thomas Lancaster of Imperial College London weighed in on the study’s implications. According to him, the findings expose flaws in the current approach to university assessments. “If [better answers are] possible [with ChatGPT], it suggests that there are flaws in the assessment design.”

Key findings

This multifaceted study investigated ChatGPT’s performance and collected additional information about qualitative opinions on the technology.

Here are the main findings:

- Academic performance: ChatGPT’s performance was comparable or superior to university students in 9 out of 32 courses across eight disciplines.

- Detection algorithms are lacking: Current algorithms, including GPTZero, designed to detect ChatGPT-generated text, are largely ineffective. These algorithms not only misclassify human answers as AI-generated but also fail to correctly identify ChatGPT-generated content as such.

- Student and teacher views: There is an apparent disconnect between students and educators on the ethical implications of using ChatGPT. Students overwhelmingly intend to use the tool for school work, while educators are inclined to treat its use as plagiarism.

- Global perspectives: Surveyed students and educators from five countries (Brazil, India, Japan, the UK, and the USA) showed varying opinions on the ethical use of ChatGPT. For example, while students in India consider its use for homework unethical, those in Brazil deem it ethical. However, 94% of students across both countries intend to use ChatGPT for academic purposes.

AI’s role in education has developed into a swirling debate, with students perhaps best placed to leverage the technology while educators update their understanding.

This has led to some embarrassing situations, like when a Texas A&M professor refused to mark his class papers as he believed them to be AI-generated.

One thing is certain: AI will become more firmly embedded in education, to positive or negative end.