An investigation by The Guardian has shed light on the UK’s pervasive use of AI and complex algorithms in various public sector decision-making processes.

Civil servants from at least eight public sector departments, along with several police units, have incorporated AI tools in their workflows.

AI tools have assisted crucial decision-making processes across welfare, immigration, and criminal justice. The investigation revealed evidence of misuse and discrimination.

While algorithmic and AI-related bias doesn’t strictly fall under the same definition, they both risk biased results when exposed to real-world scenarios. Self-learning machine learning models can magnify biases predominant in datasets.

According to the report:

- A member of parliament has raised concerns about an algorithm employed by the Department for Work and Pensions (DWP), which is suspected to have incorrectly resulted in numerous individuals losing their benefits.

- The Metropolitan Police’s facial recognition tool has been found to have a higher error rate when identifying black faces compared to white faces under certain conditions. This is well-established, with one study indicating these platforms offered an accuracy of a measly 2% in 2018.

- The Home Office has been using an algorithm that disproportionately targets individuals from specific nationalities to uncover fraudulent marriages for benefits and tax breaks. Nationals from Albania, Greece, Romania, and Bulgaria, may have been unfairly flagged.

AI tools learn patterns from extensive datasets. If datasets are biased or poorly representative of reality, the model inherits those patterns.

There are many international examples of AI-related and algorithmic bias, including false facial recognition matches leading to imprisonment, credit scoring systems inflicting bias upon women, and discriminatory tools used in courts and healthcare systems.

The Guardian’s report argues that the UK now finds itself on the brink of a potential scandal, with experts expressing concerns about the transparency and understanding of the algorithms used by officials.

Scandals have already been witnessed in other countries, such as in the US, where predictive policing platforms have been dismantled, and in the Netherlands, where the courts opposed an AI system designed to curb social welfare fraud.

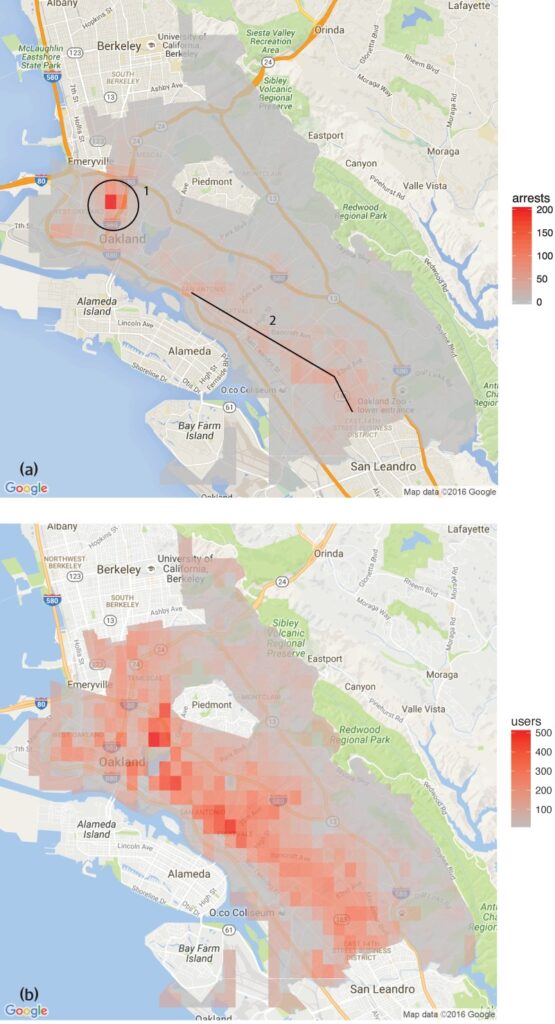

A study conducted by the Human Rights Data Analysis Group found that predictive policing software in use in Oakland, California, disproportionately targeted communities of color, perpetuating a cycle of over-policing in these neighborhoods.

This reflects the concerns raised by the Metropolitan Police’s facial recognition tool, which demonstrated higher error rates when identifying black faces under certain conditions.

There are several examples in healthcare, too, such as inconsistencies in AI models designed to help detect skin cancer. In most cases, minority groups and women are at highest risk.

Shameem Ahmad, Chief Executive of the Public Law Project, stressed the need for immediate action, stating, “AI comes with tremendous potential for social good. For instance, we can make things more efficient. But we cannot ignore the serious risks. Without urgent action, we could sleep-walk into a situation where opaque automated systems are regularly, possibly unlawfully, used in life-altering ways, and where people will not be able to seek redress when those processes go wrong.”

Echoing these concerns, Marion Oswald, a law professor at Northumbria University, has highlighted the inconsistencies and lack of transparency in public sector AI usage, stating, “There is a lack of consistency and transparency in the way that AI is being used in the public sector. A lot of these tools will affect many people in their everyday lives, for example those who claim benefits, but people don’t understand why they are being used and don’t have the opportunity to challenge them.”

AI’s risks for the public sector

The unchecked application of AI across government departments and police forces raises concerns about accountability, transparency, and bias.

In the case of the Department for Work and Pensions (DWP), The Guardian’s report unveiled instances where an algorithm suspended people’s benefits without a clear explanation.

MP Kate Osamor said that her Bulgarian constituents lost their benefits due to a semi-automated system that flagged their cases for potential fraud, highlighting a lack of transparency.

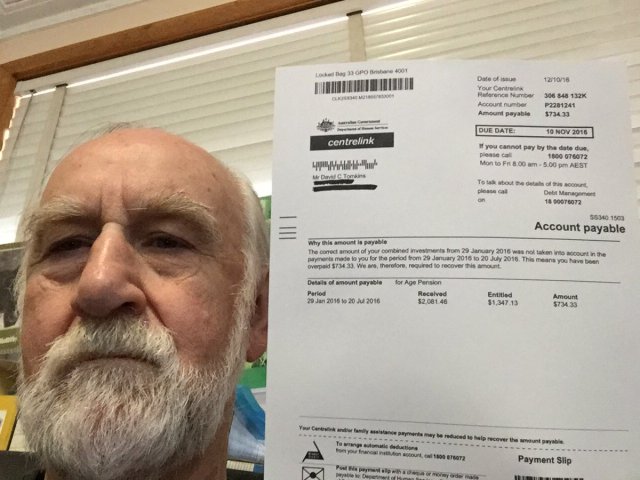

This is reminiscent of the “Robodebt” scandal in Australia, where an automated system incorrectly accused over 400,000 people of welfare fraud, leading to widespread hardship.

The Robodebt scandal, subject to investigations by the Commonwealth Ombudsman, legal challenges, and public inquiries, demonstrated the scale of impact inflicted by algorithmic decision-making.

The Australian government agreed to a settlement figure of AUS $1.8 billion in 2021.

AI’s shortcomings aren’t confined to public services. The corporate world has also witnessed failures, particularly in AI-driven recruitment tools.

A notable example is Amazon’s AI recruitment tool, which displayed bias against female candidates. The system was trained on resumes submitted to the company over a 10-year period, most of which came from men, resulting in the AI favoring male candidates.

Three examples of algorithmic bias to be wary of

As AI and algorithmic decision-making integrate into complex societal processes, alarming examples of bias and discrimination are rising.

The US Healthcare and judicial system

A 2019 study published in Science revealed that a widely used healthcare algorithm in the US exhibited racial bias. The algorithm was less likely to refer black people to programs designed to improve care for patients with complex medical needs than their white counterparts.

The study explains why: “Bias occurs because the algorithm uses health costs as a proxy for health needs. Less money is spent on Black patients who have the same level of need, and the algorithm thus falsely concludes that Black patients are healthier than equally sick White patients.”

Another critical example is the use of AI in predictive policing and judicial sentencing. Tools like Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) have been used to assess the risk of defendants reoffending.

Investigations have revealed that these tools can be biased against African-American defendants, assigning them higher risk scores than their white counterparts. This raises concerns about the fairness and impartiality of AI-assisted judicial decision-making.

Facial recognition in Brazil

The Smart Sampa project in São Paulo, Brazil, marked a significant milestone in integrating AI and surveillance within urban landscapes – something which had only been typical in China.

By 2024, it aims to install up to 20,000 cameras in São Paulo and integrate an equal number of third-party and private cameras into a single video surveillance platform. This network will oversee public spaces, medical facilities, and schools and scrutinize social media content relevant to public administration.

While Smart Sampa promises enhanced security and improved public services, experts warn that it might exacerbate existing societal issues, particularly structural racism and inequality.

Over 90% of individuals arrested in Brazil based on facial recognition are Black. São Paulo’s initiative risks perpetuating this trend, further marginalizing the Black community, which constitutes 56% of Brazil’s population.

Facial recognition technologies often misidentify darker skin tones, partly because imaging technology underperforms for darker complexions and partly because training datasets are unrepresentative.

Fernanda Rodrigues, a digital rights lawyer, emphasized the potential for false positives and the subsequent risk of mass incarceration of black individuals.

Rodrigues said, “As well as the risks that the information fed to these platforms may not be accurate and the system itself might fail, there is a problem that precedes technology implications, which is racism.”

“We know the penal system in Brazil is selective, so we can conclude that [use of surveillance with facial recognition] is all about augmenting the risks and harms to this population,” she added.

Privacy concerns are also paramount. Critics argue that Smart Sampa could infringe upon fundamental human rights, including privacy, freedom of expression, assembly, and association. The project’s opaque nature further complicated matters, with limited public involvement during the consultation phase.

Smart Sampa has not gone unchallenged. Public prosecutors have investigated, and human rights organizations have initiated legal actions to halt the technology.

The Dutch System Risk Indication (SyRI)

In 2022, the District Court of The Hague scrutinized the Dutch government’s employment of System Risk Indication (SyRI), an algorithm designed to detect potential social welfare fraud.

SyRI was intended to enhance the efficiency of identifying welfare fraud by aggregating data from various government databases and applying risk indicators to generate profiles of potential fraudsters. However, suspicious decisions and a lack of transparency raised alarm bells, leading to a legal challenge by a coalition of civil society organizations.

The plaintiffs argued that SyRI violated various international and European human rights laws. They also questioned the legality, necessity, and proportionality of the algorithm’s application.

The Hague’s District Court ruled that the legislation governing SyRI and its application failed to meet the necessary human rights standards. The court highlighted the lack of transparency and the potential for discriminatory effects, emphasizing the need for proper legal safeguards when implementing such technologies.

The case has been hailed as one of the first to address the human rights impact of AI in the public sector, highlighting the need for transparency, accountability, and robust legal frameworks.

Transparent and fair testing of tools is paramount

In the wake of recent legal challenges and public scrutiny surrounding algorithmic decision-making in government operations, the call for transparent testing of AI tools has become increasingly urgent.

Ensuring accountability, preventing discrimination, and maintaining public trust hinge on implementing rigorous and open testing protocols.

Transparent testing allows for a comprehensive understanding of how these algorithms function, the data they use, and the decision-making processes they follow. This is crucial in identifying potential biases, inaccuracies, or discriminatory practices embedded within the system.

However, rigorous testing procedures are at odds with AI’s promise of fast-acting efficiency.

In the UK’s case, Prime Minister Rishi Sunak’s ‘pro-innovation’ stance perhaps doesn’t leave room enough for comprehensive testing procedures. Moreover, there are few – if any – agreed processes for testing AI tools before distributing them for public use.

This scenario is mirrored worldwide. Rapidly increasing AI adoption shouldn’t come at the cost of scrutiny and analysis.