A controversial audio recording surfaced on Facebook within hours of post time on Slovakia’s election day.

It featured voices purportedly belonging to Michal Šimečka, the leader of the Progressive Slovakia party, and Monika Tódová of Denník N newspaper.

The conversation revolved around election rigging and buying votes from the Roma minority, sparking corruption concerns and confusion across social media.

The recording was promptly refuted as fake by both Šimečka and Denník N. The Agence France-Presse (AFP) fact-checker site indicated signs of AI manipulation in the audio and subsequently reported by international news outlets.

Given the timing – within the 48-hour media silence before the election – it was challenging to disprove the post in time.

The Smer-SD party, led by former Slovakian prime minister Robert Fico, scored 23% in Saturday’s vote, beating the centrist Progressive Slovakia at 18%.

Also, since it was audio content, it slipped past Meta’s policy, which primarily targets manipulated videos.

Ahead of the vote, the EU’s digital chief, Věra Jourová, highlighted the susceptibility of European elections to large-scale manipulative tactics, hinting at external influences such as those from Moscow.

Now, nations are on high alert for AI-inflicted election interference, which seems inevitable – it’s more a case of whether we can stop it and how much damage it can do. Poland, facing an imminent election, will be the next test.

Major elections are also on the horizon in 2024 and 2025, including the US and India next year.

Fact-checkers like AFP emphasize that AI is rapidly evolving into a potent tool to destabilize elections, and we’re ill-equipped to counter it. Veronika Hincová Frankovská from the fact-checking organization Demagog commented, “We’re not as ready for it as we should be.”

Frankovská’s team verified the fake Slovakian audio’s origin and consulted experts before using an AI speech classifier from Eleven Labs to ascertain its authenticity.

Despite the evolution of AI, we’re largely relying on humans to pick up deep fakes.

AI deep fakes are becoming a critical issue

AI’s role in spreading disinformation is growing, and proving AI images, videos, and recordings as fake is a Herculean task.

Most fake content is prohibited on social media. Ben Walter, representing Meta, stated, “Our Community Standards apply to all content… and we will take action against content that violates these policies.”

In all practicality, however, stopping deep fake content from spreading is proving intractable, and measures such as AI watermarks are woefully ineffective.

As Poland gears up for its election, there’s apprehension over the possible influx of AI-generated content, and disinformation is already causing unrest.

Jakub Śliż from the Polish fact-checking group, the Pravda Association, said, “As a fact-checking organization, we don’t have a concrete plan of how to deal with it.”

Where election results rest on tight margins and bitter policy dialogues as they did in Slovakia, the impact of AI-generated disinformation could be grave. What’s more is that past incidents of algorithmically-magnified disinfirmation, such as the Cambridge Analytica scandal, tell us that some stakeholders might be complicit in these tactics.

The 2016 US presidential election was already rife with misinformation propelled by extremist activists, foreign interference, and deceptive news outlets.

By 2020, unfounded allegations of electoral fraud became predominant, bolstering a movement against democracy that sought to overturn the results.

AI-driven disinformation doesn’t just risk deceiving the public; it also threatens to overwhelm an already fragile information environment, creating an air of falsehood, distrust, and deception.

“Degrees of trust will go down, the job of journalists and others who are trying to disseminate actual information will become harder,” states Ben Winters, a senior representative at the Electronic Privacy Information Center.

New tools for old tactics

Picture a world where manipulative content is produced at such a negligible cost and with such ease that it becomes commonplace.

Tech-empowered misinformation is no new phenomenon, but AI tools are perfectly positioned to turbocharge such tactics.

There have been plenty of early skirmishes indicative of what’s to come. Earlier in the year, an AI-generated image of a Pentagon explosion made a tangible impact on stock markets, even if just for minutes before social media users denounced the viral images as fake.

AI-rendered visuals, seemingly showing Donald Trump confronting police, gained traction online, and the Republican National Committee aired a wholly AI-generated advertisement, envisioning catastrophes if Biden was re-elected.

Another viral deep fake involves Florida’s Governor and Republican nominee, Ron DeSantis, who released altered images showcasing ex-President Donald Trump in an embrace with Dr. Anthony Fauci.

X (then Twitter) added a tag to the original Tweet, labeling it as AI-manipulated.

Donald Trump became a household name by FIRING countless people *on television*

But when it came to Fauci… pic.twitter.com/7Lxwf75NQm

— DeSantis War Room 🐊 (@DeSantisWarRoom) June 5, 2023

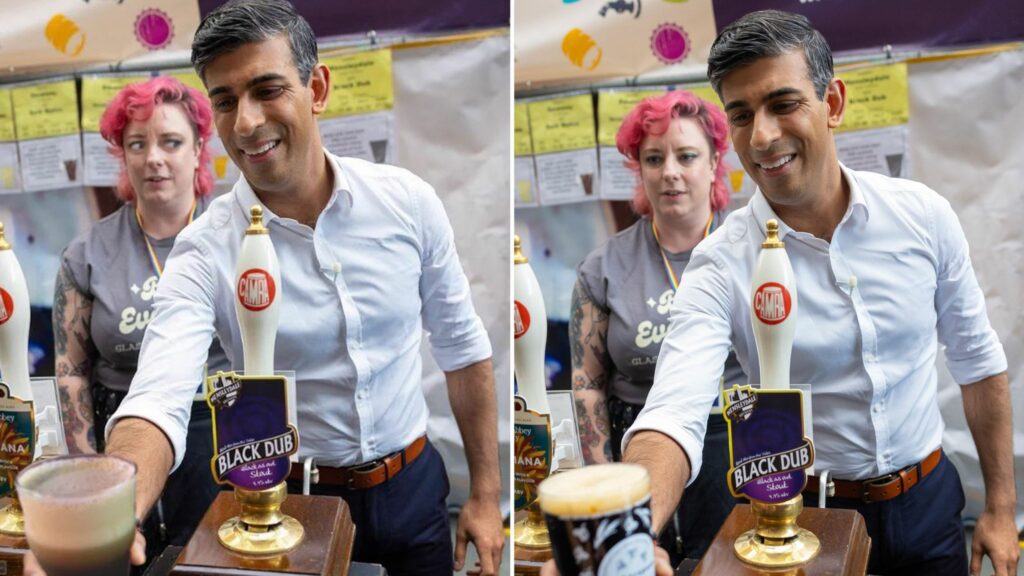

In the UK, Prime Minister Rishi Sunak was doctored to show him pouring a sub-standard pint of beer, much to the disgruntlement of X (then Twitter) users before the image’s authenticity was exposed.

Away from the political arena, people have been scammed by fraudsters wielding deep fakes impersonating their friends or family members.

Several women and even young girls have been subject to deep fake ‘sextortion’ – with fraudsters blackmailing them with fake, expletive images – something the FBI warned about earlier in the year.

Voice clones of Omar al-Bashir, the former leader of Sudan, recently appeared on TikTok, fanning the flames for a country embroiled in civil war.

The list of examples rambles on and on, and it seems like AI fakes have mastered text, images, video, and audio.

AI lowers the bar for disinformation

AI-enhanced tools amplify traditional election interference tactics, both in terms of quality and scale.

As we’ve witnessed in the Slovakian election, Josh Goldstein from Georgetown University’s Center for Security and Emerging Technology points out how foreign actors can generate convincing content in the desired language.

He stated, “AI-generated images and videos can be created much more quickly than factcheckers can review and debunk them.”

There’s also the potential to exploit AI for targeted mass disinformation. For example, two far-right activists used robocalls (robotic phone calls that target people en-masse) to disseminate election misinformation to thousands of black voters last year.

The US gears up for AI election disinformation

With several high-profile elections due in 2024, the influence of AI-powered disinformation campaigns is set to be exposed in earnest.

In response to AI’s growing influence, tech giants like Google have initiated measures, such as mandatory labels for political ads utilizing AI-enhanced content.

Meanwhile, Congress is deliberating over AI regulations, and the Federal Election Commission (FEC) is contemplating rules for AI use in political advertising.

Responding to rising concerns about the deceptive power of AI, the FEC has proposed regulations on using AI deep fake content in political campaigns.

Unveiled on August 10th, this unanimous FEC decision led to a 60-day public consultation with experts, stakeholders, and the general public.

As discussions progressed within the FEC, Lisa Gilbert, Vice President of Public Citizen, highlighted the need to revisit how laws surrounding “fraudulent misrepresentation” might intersect with AI deep fakes.

As we hurtle towards 2024, new AI models from Meta, OpenAI, and other AI developers are further equipping people with the ability to generate tremendously life-like content, so this problem won’t get any easier – on the contrary.