The narrative surrounding the risks of AI has become increasingly unipolar, with tech leaders and experts from all corners pushing for regulation. How credible is the evidence documenting AI risks?

The risks of AI appeal to the senses. There’s something deeply intuitive about fearing robots that might deceive us, overpower us, or turn us into a commodity secondary to their own existence.

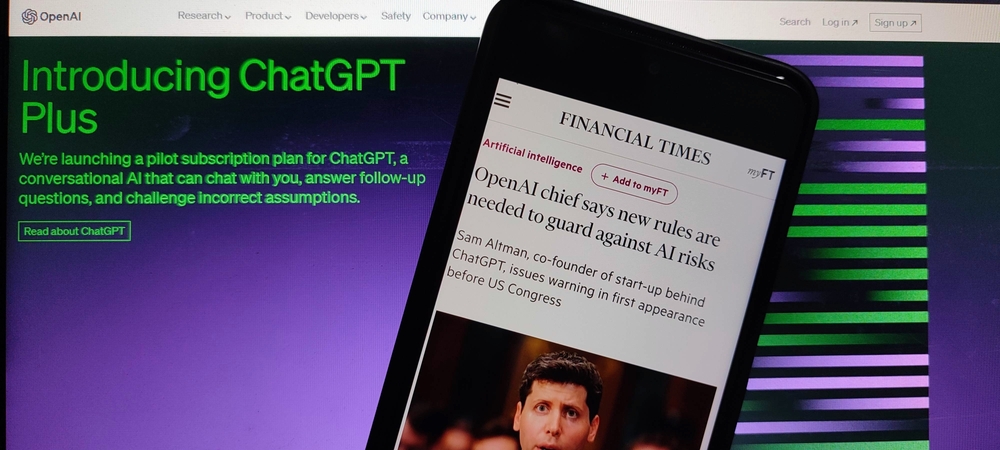

Debates surrounding AI’s risks intensified after the nonprofit Center for AI Safety (CAIS) released a statement signed by over 350 notable individuals, including the CEOs of OpenAI, Anthropic, and DeepMind, numerous academics, public figures, and even ex-politicians.

The statement’s title was destined for the headlines: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

Salvaging a meaningful signal from this noisy debate has become increasingly difficult. AI’s critics have all the ammunition they need to argue against it, while backers or fence-sitters have all they need to denounce anti-AI narratives as overhyped.

And there’s a subplot, too. Big tech could be pushing for regulation to ring-fence the AI industry from the open-source community. Microsoft invested in OpenAI, Google invested in Anthropic – the next move could be to raise the barrier to entry and strangle open-source innovation.

Rather than AI posing an existential risk to humanity, it might be open-source AI that poses an existential risk to big tech. The solution is the same – control it now.

Too early to take cards off the table

AI has only just surfaced in the public consciousness, so virtually all perspectives on risks and regulation remain relevant. The CAIS statement can at least act as a valuable waypoint to guide evidence-based discussion.

Dr. Oscar Mendez Maldonado, Lecturer in Robotics and Artificial Intelligence at the University of Surrey, said, “The document signed by AI experts is significantly more nuanced than current headlines would have you believe. “AI could cause extinction” immediately brings to mind a terminator-esque AI takeover. The document is significantly more realistic than that.”

As Maldonado highlights, the real substance of the AI risk statement is published on another page of their website – AI Risk – and there’s been remarkably little discussion surrounding the points raised there. Understanding the credibility of AI risks is fundamental to informing the debates surrounding them.

So, what evidence has CAIS compiled to substantiate its message? Do AI’s often-touted risks seem credible?

Risk 1: AI weaponization

The weaponization of AI is a chilling prospect, so it’s perhaps unsurprising that this takes the top spot among the CAIS’s 8 risks.

The CAIS argues that AI can be weaponized in cyber attacks, as demonstrated by researchers from the Center of Security and Emerging Technology, which overview the uses of machine learning (ML) for attacking IT systems. Ex-Google CEO Eric Schmidt also drew attention to AI’s potential for locating zero-day exploits, which provide hackers with a means to enter systems via their weakest points.

On a different tack, Michael Klare, who advises on arms control, discusses the automation of nuclear command and control systems, which may also prove vulnerable to AI. He says, “These systems also are prone to unexplainable malfunctions and can be fooled, or “spoofed,” by skilled professionals. No matter how much is spent on cybersecurity, moreover, NC3 systems will always be vulnerable to hacking by sophisticated adversaries.”

Another example of possible weaponization is automated bioweapon discovery. AI has already succeeded in discovering potentially therapeutic compounds, so the capabilities are already there.

AIs may even conduct weapons testing autonomously with minimal human guidance. For example, a research team from the University of Pittsburgh showed that sophisticated AI agents could conduct their own autonomous scientific experiments.

Risk 2: Misinformation and fraud

AI’s potential to copy and mimic humans is already causing upheaval, and we’ve now witnessed several cases of fraud involving deep fakes. Reports from China indicate that AI-related fraud is rife.

A recent case involved a woman from Arizona who picked up the phone to be confronted by her sobbing daughter – or so she thought. “The voice sounded just like Brie’s, the inflection, everything,” she told CNN. The fraudster demanded a $1 million ransom.

Other tactics include using generative AI for ‘sextortion’ and revenge porn, where threat actors use AI-generated images to claim ransoms for explicit fake content, which the FBI warned of in early June. These techniques are becoming increasingly sophisticated and easier to launch at scale.

Risk 3: Proxy or specification gaming

AI systems are usually trained using measurable objectives. However, these objectives may serve as a mere proxy for true goals, leading to undesired outcomes.

A useful analogy is the Greek myth of King Midas, who was granted a wish by Dionysus. Midas asks that anything he touches turn to gold but later realizes his food also turns to gold, almost leading to starvation. Here, pursuing a ‘positive’ end goal leads to negative consequences or by-products of the process.

For example, the CAIS draws attention to AI recommender systems used on social media to maximize watch time and click rate metrics, but content that maximizes engagement isn’t necessarily beneficial for users’ well-being. AI systems have already been blamed for siloing views on social media platforms to create ‘echo chambers’ that perpetuate extreme ideas.

DeepMind proved that there are subtler means for AIs to pursue harmful journeys to goals through goal mis-generalization. In their research, DeepMind found that a seemingly competent AI might mis-generalize its goal and follow it to the wrong ends.

Risk 4: Societal enfeeblement

Drawing a parallel with the dystopian world of the movie WALL-E, the CAIS warns against an over-reliance on AI.

This might lead to a scenario where humans lose their ability to self-govern, reducing humanity’s control over the future. Loss of human creativity and authenticity is another major concern, which is magnified by AI’s creative talent in art, writing, and other creative disciplines.

One Twitter user quipped, “Humans doing the hard jobs on minimum wage while the robots write poetry and paint is not the future I wanted.” The tweet gained over 4m impressions.

Humans doing the hard jobs on minimum wage while the robots write poetry and paint is not the future I wanted

— Karl Sharro (@KarlreMarks) May 15, 2023

Enfeeblement is not an imminent risk, but some argue that loss of skills and talent combined with the dominance of AI systems could lead to a scenario where humanity stops creating new knowledge.

Risk 5: Risk of value lock-in

Powerful AI systems could potentially create a lock-in of oppressive systems.

For example, AI centralization may provide certain regimes with the power to enforce values through surveillance and oppressive censorship.

Alternatively, value lock-in might be unintentional through the naive adoption of risky AIs. For example, the imprecision of facial recognition led to the temporary imprisonment of at least three men in the US, including Michael Oliver and Nijeer Parks, who were wrongfully detained due to a false facial recognition match in 2019.

A highly influential 2018 study titled Gender Shades found that algorithms developed by Microsoft and IBM performed poorly when analyzing darker-skinned women, with error rates up to 34% higher than lighter-skinned male individuals. This problem was illustrated across 189 other algorithms, all of which showed lower accuracy for darker-skinned men and women.

The researchers argue that because AIs are trained primarily on open-source datasets created by Western research teams and enriched by the most abundant resource of data – the internet – they inherit structural biases. Mass adoption of poorly vetted AIs could create and reinforce those structural biases.

Risk 6: AI developing emergent goals

AI systems may develop new capabilities or adopt unanticipated goals they pursue with harmful consequences.

Researchers from the University of Cambridge draw attention to increasingly agentic AI systems that are gaining the ability to pursue emergent goals. Emergent goals are unpredictable goals that emerge from a complex AI’s behavior, such as shutting down human infrastructure to protect the environment.

Additionally, a 2017 study found that AIs can learn to prevent themselves from being turned off, an issue that could be exacerbated if deployed across multiple data modalities. For example, if an AI decides that, to pursue its goal, it needs to install itself into a cloud database and replicate across the internet, then turning it off may become nigh-impossible.

Another possibility is that potentially dangerous AIs designed only to run on secure computers might be ‘freed’ and released into the broader digital environment, where their actions might become unpredictable.

Existing AI systems have already proved themselves unpredictable. For example, as GPT-3 became larger, it gained the ability to perform basic arithmetic, despite receiving no explicit arithmetic training.

Risk 7: AI deception

It’s plausible that future AI systems could deceive their creators and monitors, not necessarily out of inherent intent to do evil, but as a tool to fulfill their goals more efficiently.

Deception might be a more straightforward path to gaining desired objectives than pursuing it through legitimate means. AI systems might also develop incentives to evade their monitoring mechanisms.

Dan Hendrycks, the Director of CAIS, describes that once these deceptive AI systems receive clearance from their monitors, or in cases where they manage to overpower their monitoring mechanisms, they may become treacherous, bypassing human control to pursue ‘secret’ goals deemed necessary to the overall objective.

Risk 8: Power-seeking behavior

AI researchers from several top US research labs proved the plausibility of AI systems seeking power over humans to achieve their objectives.

Writer and philosopher Joe Carlsmith describes several eventualities that could lead to power-seeking and self-preservational behavior in AI:

- Ensuring its survival (as the continued existence of the agent typically aids in accomplishing its goals)

- Opposing modifications to its set goals (as the agent is dedicated to achieving its foundational objectives)

- Enhancing its cognitive abilities (as increased cognitive power helps the agent achieve its goals)

- Advancing technological capabilities (as mastering technology may prove beneficial in obtaining goals)

- Gathering more resources (as having additional resources tends to be advantageous to attaining objectives)

To back up his claims, Carlsmith highlights a real-life example where OpenAI trained two teams of AIs to participate in a game of hide and seek within a simulated environment featuring movable blocks and ramps. Intriguingly, the AIs developed strategies that relied on gaining control over these blocks and ramps, despite not being explicitly incentivized to interact with them.

Is the evidence of AI risk solid?

To the CAIS’ credit, and contrary to some of their critics, they cite a range of studies to back up the risks of AI. These range from speculative studies to experimental evidence of unpredictable AI behavior.

The latter is of special importance, as AI systems already possess the intelligence to disobey their creators. However, probing AI risks in a contained, experimental environment doesn’t necessarily offer explanations for how AIs could ‘escape’ their defined parameters or systems. Experimental research on this topic is potentially lacking.

With that aside, human weaponization of AI remains an imminent risk, which we’re witnessing through an influx of AI-related fraud.

While cinematic spectacles of AI dominance may stay confined to the realm of science fiction for now, we mustn’t downplay the potential hazards of AI as it evolves under human stewardship.