Is AI gaining some level of consciousness? How will we know if and when it does?

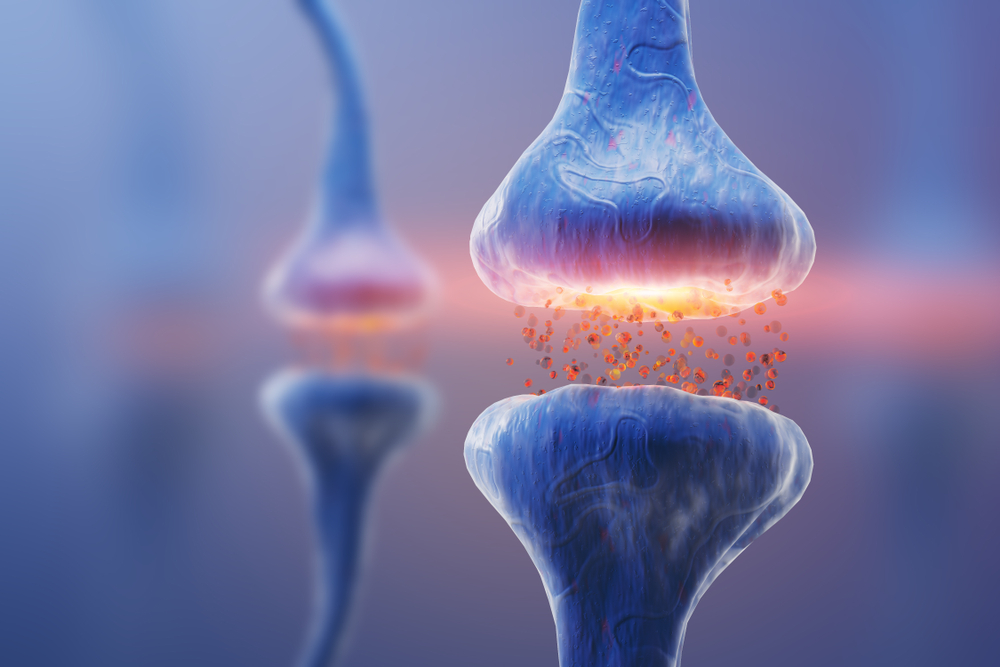

Large language models (LLMs) can engage in realistic conversations and generate text on diverse subjects, igniting debates about when they become sentient or conscious – which, though often linked, are separate concepts.

Sentience is chiefly concerned with sensation and awareness, whereas consciousness is more complex and overarching.

Just earlier this year, Microsoft, after testing OpenAI’s GPT-4, indicated that the model showed “sparks” of “general intelligence” – a term used to define flexible, adaptable AI with high cognitive abilities.

Even more controversially, Blake Lemoine, a former Google engineer, claimed Google’s LaMDA AI had reached a level of sentience. Lemoine said of LaMDA, “Google might call this sharing proprietary property. I call it sharing a discussion that I had with one of my coworkers” – that ‘coworker’ being the AI system which expressed ‘fear’ about ‘being switched off.’

In an attempt to ground the debate surrounding AI consciousness, an interdisciplinary team of researchers in philosophy, computing, and neuroscience conducted a comprehensive examination of today’s most cutting-edge AI systems.

The study, led by Robert Long and his colleagues at the Center for AI Safety (CAIS), a San Francisco-based nonprofit, scrutinized multiple theories of human consciousness. They established a list of 14 “indicator properties” that a conscious AI would likely exhibit.

The CAIS is the same nonprofit that put forward eight headling-making potential risks for AI in June.

After analyzing their results, the team ultimately found that the most complex models fall short of virtually every test of consciousness they were subjected to.

Researchers evaluated several AI models, including DeepMind’s adaptive agent (ADa) and PaLM-E, described as an embodied robotic multimodal LLM.

By comparing these AIs to the 14 indicators, the researchers found no substantial evidence indicating that any AI models possess consciousness.

More about the study

This detailed interdisciplinary study analyzed numerous theories of consciousness – which remains a poorly described concept across scientific literature – and applied them to current AI modes.

While the study failed to determine that current AIs possess consciousness, it lays the groundwork for future analysis, essentially providing a ‘checklist’ researchers can refer to when analyzing the capabilities of their AIs.

Understanding consciousness is also vital for informing public debate surrounding AI.

Consciousness is not a binary concept, however, and it’s still exceptionally difficult to determine the boundaries.

Some researchers believe that consciousness will develop spontaneously once AI approaches a ‘singularity,’ which essentially marks the point where the complexity of AI systems outstrips human cognition.

Here are the 14 indicators of consciousness the researchers assessed:

Recurrent processing theory (RPT)

Recurrent processing theory focuses on the temporal aspects of information processing.

In the context of consciousness, it emphasizes how information is maintained and updated in a loop-like manner over time.

This is considered critical for building the richness of conscious experiences.

RPT-1: Input modules using algorithmic recurrence

Algorithmic recurrence here refers to the repetitive processing of information cyclically.

Think of it like a computer program loop that continually updates its data based on new inputs.

RPT-2: Input modules generating organized, integrated perceptual experiences

Like humans and other animals, the system doesn’t just receive sensory information – it integrates it into a coherent and unified ‘picture’ that can be perceived as a single experience.

Global workspace theory (GWT)

The second set of indicators specified by the research team was global workspace theory, which proposes that consciousness arises from the interactions among various cognitive modules in a ‘global workspace.’

This workspace serves as a sort of cognitive stage where different modules can ‘broadcast’ and ‘receive’ information, enabling a unified, coherent experience.

GWT-1: Multiple specialized systems operating in parallel

This essentially means that an AI system should have multiple specialized ‘mini-brains,’ each responsible for different functions (like memory, perception, and decision-making), and they should be able to operate simultaneously.

GWT-2: Limited capacity workspace

This idea relates to how humans can only focus on a limited amount of information at any given time.

Similarly, an AI system would need to have selective focus – essentially, an ability to determine the most essential information to ‘pay attention to.’

GWT-3: Global broadcast

Information that reaches the global workspace must be available for all cognitive modules so each ‘mini-brain’ can use that information for its specialized function.

GWT-4: State-dependent attention

This is a more advanced form of focus. The AI system should be able to shift its attention based on its current state or task.

For example, it may prioritize sensory data when navigating a room but shift to problem-solving algorithms when faced with a puzzle.

Computational higher-order theories (HOT)

Higher-order theories approach consciousness from a meta-cognitive perspective.

They argue that conscious experience isn’t just about having perceptions, thoughts, or feelings but also about being aware that you’re having these experiences.

This awareness is crucial and is partly why highly intelligent animals are sociable – we’re collectively aware of our existence and interdependencies.

HOT-1: Generative, top-down, or noisy perception modules

Instead of passively receiving information, the system interprets or generates perceptions.

Imagine a photo editing software that could not just modify a photo but understand why certain features should be adjusted.

HOT-2: Metacognitive monitoring

This means the system should have a ‘thought process’ about its ‘thought processes.’

It must be able to question its own decisions and perceptions like humans and some animals do.

HOT-3: Agency guided by a general belief-formation system

Agency here implies that the AI system is not just passively analyzing data – it’s making decisions based on its analysis.

Furthermore, these decisions continually update the system’s ‘beliefs’ or data models, much like how humans create progressive knowledge through curiosity and investigation.

HOT-4: Ability to hold preferences

This refers to the system’s ability to streamline complex data into simpler, more manageable forms, potentially allowing it to have subjective ‘preferences’ or ‘experiences.’

Attention schema theory (AST)

Attention schema theory posits that consciousness arises from the brain’s grasp of its own attentional state.

AST-1: Predictive model representing current state of attention

The AI system should be able to project what it will likely focus on next, indicating a sort of self-awareness about its own cognitive state.

Predictive processing (PP)

Predictive processing models describe the brain’s predictive capabilities.

It continually updates its models of the world and uses these to predict incoming sensory information.

PP-1: Input modules using predictive coding

This involves using past and current data to predict future states, enabling the system to ‘anticipate’ rather than just ‘react.’

Agency and embodiment (AE)

Agency and embodiment theories propose that being able to act and understand the effect of actions are critical indicators of consciousness.

Embodiment is highly distinct to animals and other living organisms, which possess tightly linked nervous systems that constantly feed sensory information to and from organs and central processing systems.

AE-1: Agency

Agency refers to learning from feedback to adapt future actions, primarily when there are conflicting goals. Imagine a self-driving car that learns to optimize for both speed and safety.

AE-2: Embodiment

This deals with a system’s ability to understand how its actions affect the world around it and how those changes will, in turn, affect it.

Researchers are currently working on AI systems connected with advanced sensors, such as the PaLM-E multimodal AI system developed by Google, which was one of the systems researchers analyzed.

Consciousness in AI: Balancing the risks of under-attribution and over-attribution

Researchers and the general public are seeking clarity on how intelligent AI really is and whether it’s positively heading towards consciousness.

Most public AIs like ChatGPT are ‘black boxes,’ meaning we don’t entirely understand how they work – their inner mechanics are proprietary secrets.

The study leader, Robert Long, emphasized the need for an interdisciplinary approach to analyzing machine consciousness, warning against the “risk of mistaking human consciousness for consciousness in general.”

Colin Klein, a team member from the Australian National University, also highlighted the ethical implications of AI consciousness. He explained that it’s crucial to understand machine consciousness “to make sure that we don’t treat it unethically, and to ensure that we don’t allow it to treat us unethically.”

He further elaborated, “The idea is that if we can create these conscious AIs, we’ll treat them as slaves basically, and do all sorts of unethical things with them.”

“The other side is whether we worry about us, and what sort of control the AI will have over us; will it be able to manipulate us?”

So, what are the risks of underestimating or overestimating AI consciousness?

Underestimating machine consciousness

As advancements in AI continue to push boundaries, the ethical debates surrounding the technology are beginning to heat up.

Companies like OpenAI are already developing artificial general intelligence (AGI), intending to transcend the capabilities of current AIs like ChatGPT and build flexible and multi-modal systems.

If we are to acknowledge that any entity capable of conscious suffering should be afforded moral consideration – as we do with many animals – we risk moral harm if we overlook the possibility of conscious AI systems.

Moreover, we might underestimate their ability to harm us. Imagine the risks posed by an advanced conscious AI system that realizes humanity is mistreating it.

Overestimating machine consciousness

On the flip side of the debate is the risk of over-attributing consciousness to AI systems.

Humans tend to anthropomorphize AI, leading many to incorrectly attribute consciousness to the technology because it’s excellent at imitating us.

As Oscar Wilde said, “Imitation is the sincerest form of flattery,” which AI does exceptionally well.

Anthropomorphism becomes problematic as AI systems develop human-like characteristics, such as natural language capabilities and facial expressions.

Many criticized Google’s Blake Lemoine for allowing himself to be ‘conned’ by LaMDA’s human-like conversational skills when, in reality, it was synthesizing text from human conversations contained within its training data rather than anything of unique conscious origin.

Emotional needs can magnify the propensity for anthropomorphism. For instance, individuals might seek social interaction and emotional validation from AI systems, skewing their judgment concerning the consciousness of these entities.

The AI chatbot Replika – as the name suggests – is designed to replicate human interactions and was embroiled in a mentally ill person’s assassination plot to kill Queen Elizabeth II, leading to his arrest and imprisonment in a psychiatric facility.

Other AIs, such as Anthropic’s Claude and Inflection’s Pi, are designed to closely mimic human conversation to act as personal assistants.

DeepMind is also developing a life coach chatbot designed to provide direct personalized advice to users, an idea that has prompted concerns about whether humans want AI to meddle in their personal affairs.

Amongst this debate, it’s crucial to remember that the technology remains young.

While current AI systems are not robustly conscious, the next few years will undoubtedly bring us closer and closer to realizing conscious artificial systems that equal or exceed the cognitive of biological brains.