Amazon and other online retailers continue to be inundated with AI-generated books. The fiction may be passable but some of the self-help and advice books could even be deadly.

The New York Mycological Society recently posted a public service announcement on X to warn of the potential consequences of suspected AI-generated books on mushroom foraging.

Edible mushrooms are notoriously difficult to differentiate from those that could make you violently ill or even kill you. Unless you prefer the trial-and-error approach then a reliable guidebook is essential.

A quick search on Amazon for books on “edible mushroom identification” delivers about 2,000 results, many of which are no doubt written by experts.

A number of the self-published titles bear the names of authors that can’t be found outside of Amazon. A lot of these have also only been published after AI writing tools like ChatGPT came into being.

🚨: PSA Alert!

🔗: link in bio@Amazon and other retail outlets have been inundated with AI foraging and identification books.Please only buy books of known authors and foragers, it can literally mean life or death. pic.twitter.com/FSqQLDhh42

— newyorkmyc (@newyorkmyc) August 27, 2023

After 404 Media ran a few suspicious books through an AI detector it seemed to confirm that the books were not authored by a human. When they approached Amazon about the titles the books were promptly pulled from its online store. You can see an archive of the book’s listing here.

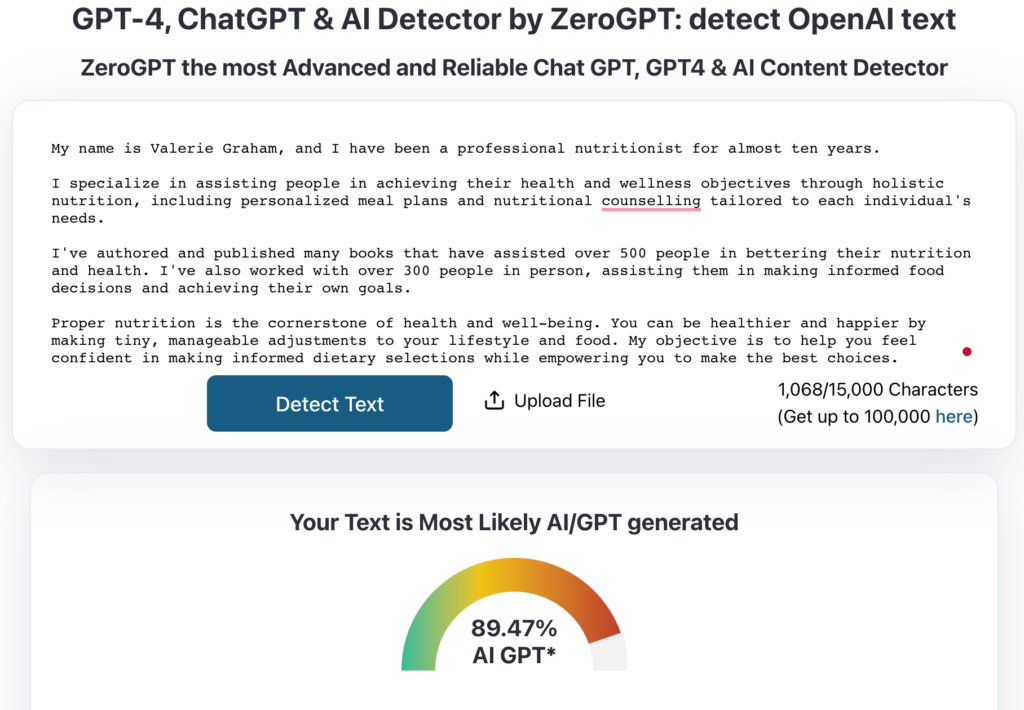

But many more books about mushrooms remain on Amazon even though they seem like they could be written by AI. Like this one by an author called Valerie Graham. Her bio page had no photo and running the bio through ZeroGPT came up with an 89% AI result.

Source: ZeroGPT

I’m not sure I’d trust “Valerie” to tell me if the mushroom I found in the woods was safe to eat.

AI tools like ChatGPT have guardrails to avoid outputting disinformation or dangerous advice but when it comes to the subtle differences between delicious and deadly you’d hardly trust its mushroom identification skills.

There’s no screening process on Amazon for the voracity of the information in the books sold on its marketplace so we’re likely to keep seeing AI-authored titles like these pop up.

Earlier this year we reported on an AI recipe planner that suggested bleach as an ingredient. Two weeks ago an AI-generated article on MSN suggested an Ottawan food bank as a tourist destination.

It’s obvious that AI shouldn’t be trusted without any scrutiny. The trouble is that most people believe that if it’s published it must be true.

Visiting the food bank in Ottawa may be an underwhelming holiday experience, but eating a deadly mushroom is a different prospect altogether.