The Federal Trade Commission (FTC) is tasked with protecting consumers from fraud and scams but ever-improving AI voice cloning is making this challenge more difficult.

While AI voice cloning has fueled numerous debates, there are legitimate use cases that make the technology worth pursuing.

Restoring a personal means of communication to someone who has lost their voice, or giving a voice to a familiar movie character long after the original voice artist has passed away, are just two examples. Last year New York Mayor Eric Adams used an AI clone of his voice to communicate in multiple languages with city residents.

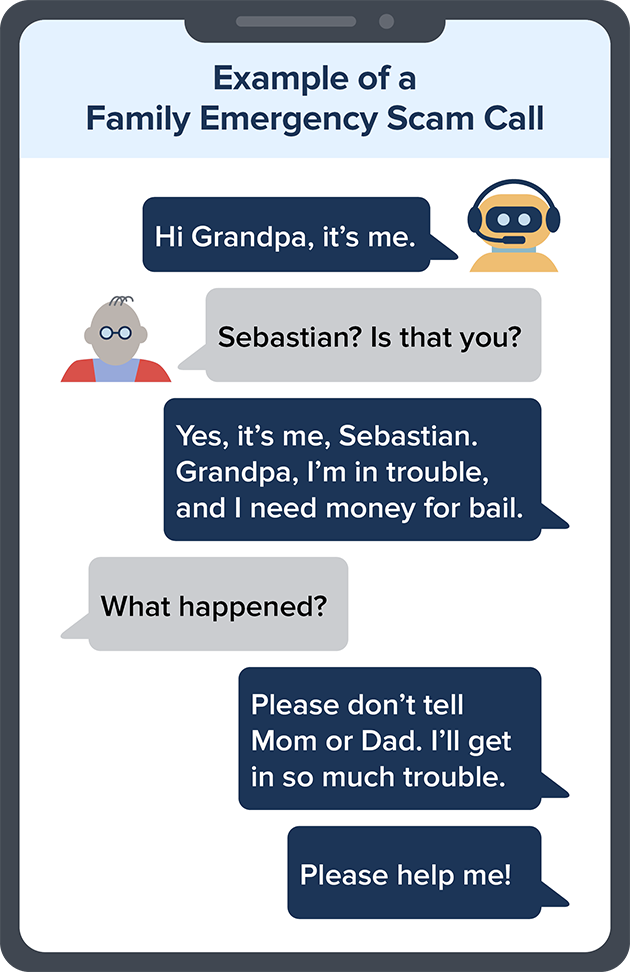

But the technology has also made it a lot easier for scammers and fraudsters to mislead unsuspecting people when they answer a telephone call. Last year, a McAfee report noted an uptick in AI voice fraud and the FTC warned consumers about “family emergency scams” where a caller pretends to be a family member in distress.

Other than ending the call and then calling the person on the number you have for them, it’s really tough to identify an AI voice clone from a real person.

The FTC has issued a challenge to individuals or organizations to submit “breakthrough ideas aimed at preventing, monitoring, and evaluating malicious use of voice cloning technology”

The challenge runs from January 2 to January 12, and the winning idea will receive a $25,000 reward. If you’ve got any bright ideas you can submit them here.

The submissions need to address at least one of the following three intervention points the FTC wants to achieve:

- Prevention or authentication: It must provide a way to limit the use or application of voice cloning software by unauthorized users.

- Real-time detection or monitoring: It must provide a way to detect cloned voices or the use of voice cloning technology.

- Post-use evaluation: It must provide a way to check if an audio clip contains cloned voices.

Samuel Levine, Director of the FTC’s Bureau of Consumer Protection said, “We will use every tool to prevent harm to the public stemming from abuses of voice cloning technology.”

As an indication of where the FTC may shift responsibility for these scams, Stephanie T. Nguyen, the FTC’s Chief Technology Officer said, “The challenge is crafted in a way that ensures companies are responsible for the first- and second-order effects of the products they release.”

That may be tough, or even impossible to do. Even if reputable companies add a “watermark” to the audio their tools generate, there are already open-source solutions freely available that scammers can use.

If you’ve sent someone a voice note, left a message on an answering service, or posted a video of yourself on YouTube, then anyone could use that to clone your voice.

As generative AI improves, the best defense we have may be to become a lot more skeptical and not be too quick to trust our eyes and ears.