HR software company Lattice, founded by Sam Altman’s brother Jack Altman, became the first to give digital workers official employee records but canceled the move just 3 days later.

Lattice CEO Sarah Franklin announced on LinkedIn that Lattice “made history to become the first company to lead in the responsible employment of AI ‘digital workers’ by creating a digital employee record to govern them with transparency and accountability.”

Franklin said “digital workers” will be securely onboarded, trained, and assigned goals, performance metrics, appropriate systems access, and even an accountable manager.”

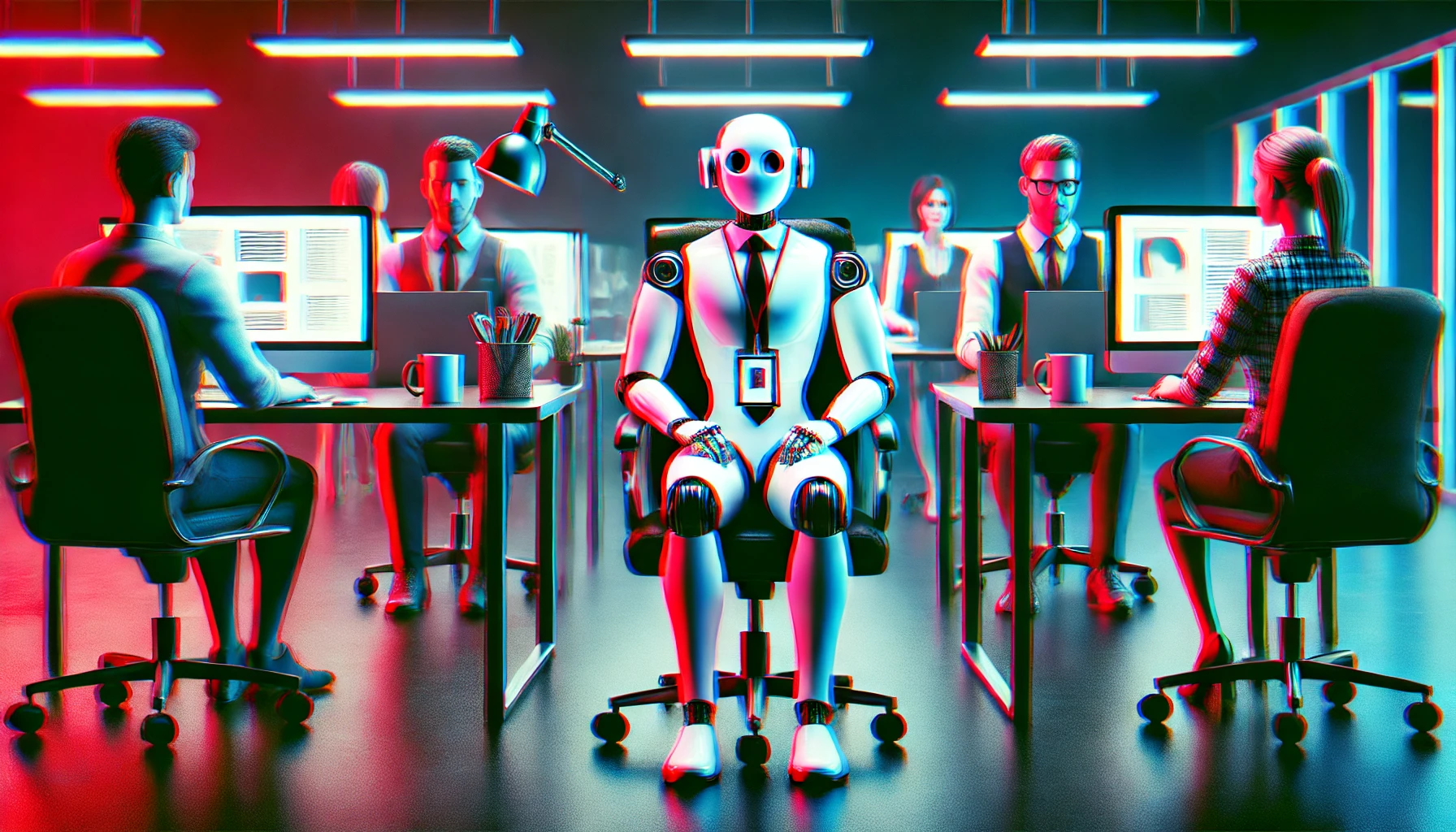

If you think this move to anthropomorphize AI is a step too far and tone-deaf in the face of looming job losses then you’re not alone.

There was swift backlash from the online community, including from people in the AI industry.

Sawyer Middeleer, chief of staff at AI sales platform Aomni, commented on the post saying, “This strategy and messaging misses the mark in a big way, and I say that as someone building an AI company. Treating AI agents as employees disrespects the humanity of your real employees. Worse, it implies that you view humans simply as “resources” to be optimized and measured against machines.”

Ed Zitron, CEO of tech media relations company EZPR, asked Franklin, “In the event of a unionization effort at Lattice, will these AI employees be allowed to vote?”

Lattice quickly realized that the world may not be ready for “digital employees” just yet. Only three days after the announcement, the company now says it has canceled the project.

Are they workers?

It’s becoming all too easy to humanize AI models and robots. They sound like us, emote, and are often better at being empathetic than we are.

But are they conscious or sentient to the point where we should consider affording them “workers’” rights? Lattice may simply have been trying to get ahead of this unavoidable question, albeit a little clumsily.

Claude 3 Opus surprised engineers when it revealed a measure of self-awareness during testing. Google fired one of its engineers way back in 2022 when he said that its AI model displayed sentience, and researchers claim that GPT-4 has passed the Turing test.

Whether AI models actually have a measure of consciousness or not may not even be what decides whether digital employees are afforded rights in the future.

In April, researchers published an interesting study in the Neuroscience of Consciousness journal. They asked 300 US citizens if they believed ChatGPT was conscious and had subjective experiences like feelings and sensations. More than two-thirds of the respondents said they did, even though most experts disagree.

The researchers found that the more frequently people used tools like ChatGPT, the more likely they were to attribute some level of consciousness to it.

Our initial automatic response may be to balk at the idea of an AI ‘colleague’ being afforded workers’ rights. This research suggests that the more we interact with AI, the more likely we are to sympathize with it and consider welcoming it to the team.