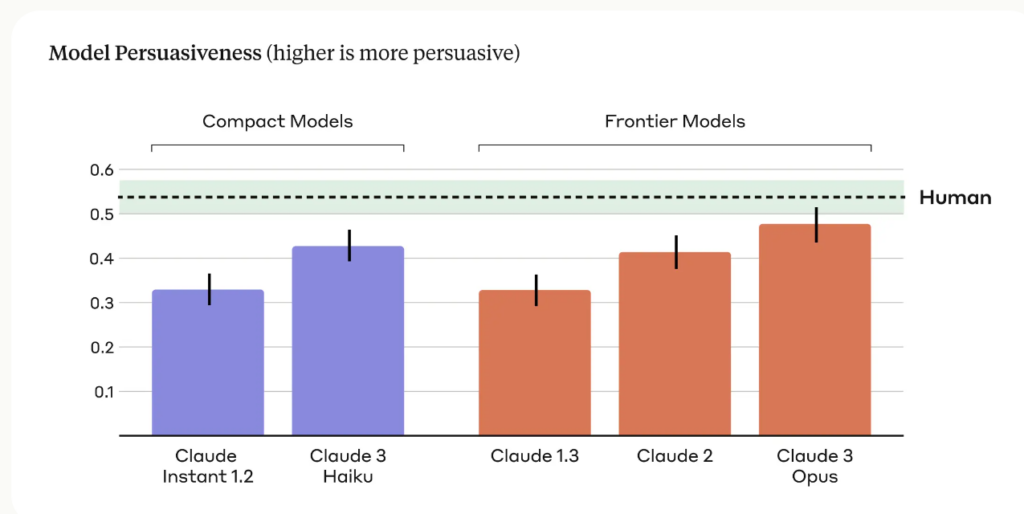

Anthropic research revealed that their latest AI model, Claude 3 Opus, can generate arguments as persuasive as those created by humans.

The research, led by Esin Durmus, explores the relationship between model scale and persuasiveness across different generations of Anthropic language models.

It focused on 28 complex and emerging topics, such as online content moderation and ethical guidelines for space exploration, where people are less likely to have concrete or long-established views.

The researchers compared the persuasiveness of arguments generated by various Anthropic models, including Claude 1, 2, and 3, with those written by human participants.

Key findings of the study include:

- The study employed four distinct prompts to generate AI-generated arguments, capturing a broader range of persuasive writing styles and techniques.

- Claude 3 Opus, Anthropic’s most advanced model, produced arguments that were statistically indistinguishable from human-written arguments in terms of persuasiveness.

- A clear upward trend was observed across model generations, with each successive generation demonstrating increased persuasiveness in both compact and frontier models.

The Anthropic team admits limitations, writing, “Persuasion is difficult to study in a lab setting – our results may not transfer to the real world.”

Still, Claude’s persuasive powers are evidently impressive, and this isn’t the only study to demonstrate this.

In March 2024, a team from EPFL in Switzerland and the Bruno Kessler Institute in Italy found that when GPT-4 had access to personal information about its debate opponent, it was 81.7% more likely to convince its opponent than a human.

The researchers concluded that “these results provide evidence that LLM-based microtargeting strongly outperforms both normal LLMs and human-based microtargeting, with GPT-4 being able to exploit personal information much more effectively than humans.”

Persuasive AI for social engineering

The most obvious risks of persuasive LLMs are coercion and social engineering.

As Anthropic states, “The persuasiveness of language models raise legitimate societal concerns around safe deployment and potential misuse. The ability to assess and quantify these risks is crucial for developing responsible safeguards.”

We must also be aware of how the growing persuasiveness of AI language models might combine with cutting-edge voice cloning technology like OpenAI’s Voice Engine, which OpenAI felt risky to release.

VoiceEngine needs just 15 seconds to realistically clone a voice, which could be used for almost anything, including sophisticated fraud or social engineering scams.

Deep fake scams are already rife and will level up if threat actors splice voice cloning technology with AI’s scarily competent persuasive techniques.