The US Army is dabbling with integrating AI chatbots into their strategic planning, albeit within the confines of a war game simulation based on the popular video game Starcraft II.

This study, led by the US Army Research Laboratory, analyzes OpenAI’s GPT-4 Turbo and GPT-4 Vision battlefield strategies.

This is part of OpenAI’s collaboration with the Department of Defense (DOD) following its establishment of the generative AI task force last year.

AI’s use on the battlefield is hotly debated, with a recent similar study on AI wargaming finding that LLMs like GPT-3.5 and GPT-4 tend to escalate diplomacy tactics, sometimes resulting in nuclear war.

This new research from the US Army used Starcraft II to simulate a battlefield scenario involving a limited number of military units.

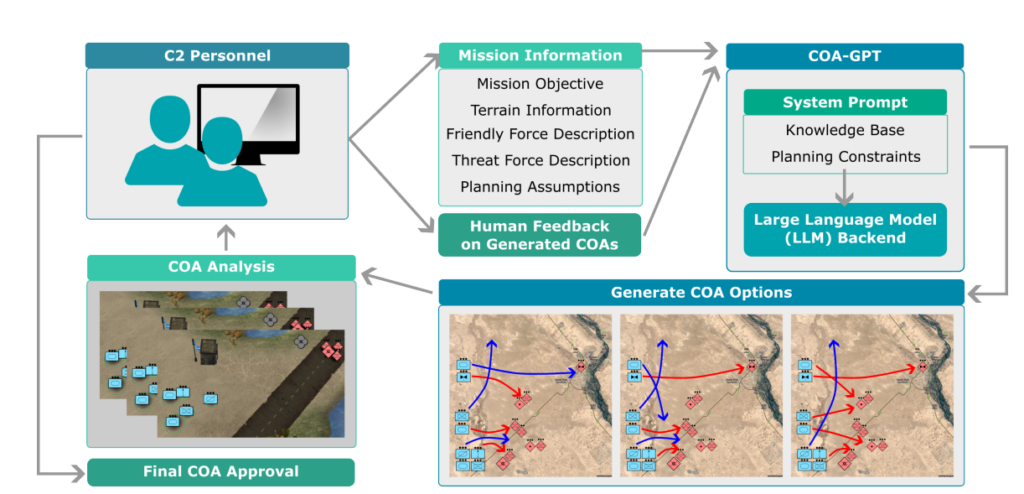

Researchers dubbed this system “COA-GPT” – with COA standing for the military term “Courses of Action,” which essentially describes military tactics.

COA-GPT assumed the role of a military commander’s assistant, tasked with devising strategies to obliterate enemy forces and capture strategic points.

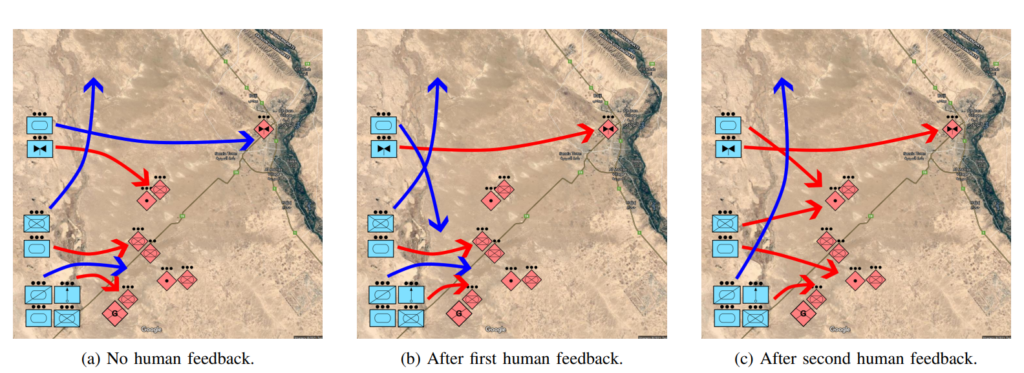

The researchers note that traditional COA is notoriously slow and labor-intensive. COA-GPT makes decisions in seconds while integrating human feedback into the AI’s learning process.

COA-GPT excels other methods, but there is a cost

COA-GPT demonstrated superior performance to existing methods, outpaced existing methods in generating strategic COAs, and could adapt to real-time feedback.

However, there were flaws. Most notably, COA-GPT incurred higher casualties in accomplishing mission objectives.

The study states, “We observe that the COA-GPT and COA-GPT-V, even when enhanced with human feedback exhibits higher friendly force casualties compared to other baselines.”

Does this deter the researchers? Seemingly not.

The study says, “In conclusion, COA-GPT represents a transformative approach in military C2 operations, facilitating faster, more agile decision-making and maintaining a strategic edge in modern warfare.”

It’s worrying that an AI system that caused more unnecessary casualties than the baseline is defined as a “transformative approach.”

The DOD has already identified other avenues for exploring AI’s military uses, but concerns about the technology’s readiness and ethical implications loom.

For example, who is responsible when military AI applications go wrong? The developers? The person in charge? Or someone further down the chain?

AI warfare systems are already deployed in the Ukraine and Palestine-Israel conflicts, but these questions remain largely untested.

Let’s hope it remains that way.