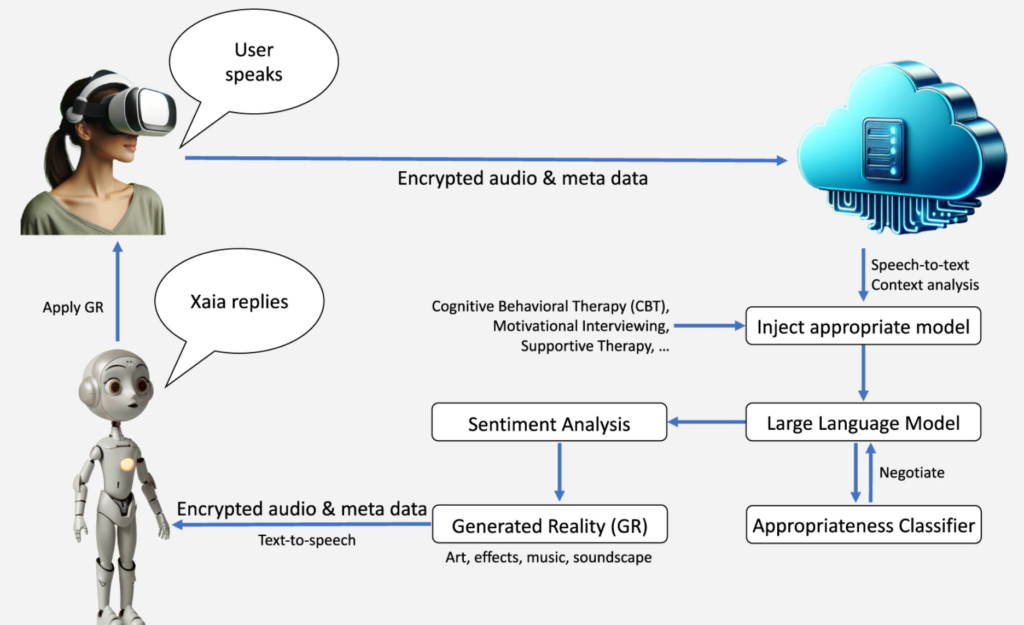

Researchers from Cedars-Sinai developed a virtual reality (VR) AI mental health support tool named the eXtended-reality Artificial Intelligence Assistant (XAIA).

The study from researchers from Cedars-Sinai, led by Brennan M. R. Spiegel, and published in Nature’s npj Digital Medicine, used AI, spatial computing, and VR to immerse users in calming, nature-inspired settings where they engage in therapeutic conversations with an AI avatar.

The system used GPT-4 to offer immersive therapy sessions to 14 individuals experiencing mild to moderate anxiety or depression. XAIA can be accessed on the Apple Vision Pro VR headset.

Lead researcher Brennan Spiegel, MD, MSHS, wrote in a Cedars-Sinai blog: “Apple Vision Pro offers a gateway into Xaia’s world of immersive, interactive behavioral health support—making strides that I can only describe as a quantum leap beyond previous technologies.”

He continued, “With Xaia and the stunning display in Apple Vision Pro, we are able to leverage every pixel of that remarkable resolution and the full spectrum of vivid colors to craft a form of immersive therapy that’s engaging and deeply personal.”

To train the AI, Spiegel and his team incorporated transcriptions from cognitive behavioral therapy (CBT) sessions conducted by experienced therapists, focusing on empathy, validation, and effective communication.

The AI’s responses were further refined through iterative testing, which involved therapists role-playing various clinical scenarios. This led to continuous improvement in the system’s psychotherapeutic communication.

Participants discussed various topics with the AI, allowing researchers to document the AI’s application of psychotherapeutic techniques. By and large, XAIA was noted for its ability to express empathy, sympathy, and validation, enhancing the therapeutic experience.

For example, XAIA’s empathetic response to a participant’s experience of feeling left out was, “I’m sorry to hear that you felt rejected in such a definitive way, especially when you were pursuing what’s important to you. It must have been a tough experience.”

Researchers performed a qualitative thematic analysis of participant feedback, suggesting a general appreciation for the AI’s nonjudgmental nature and the quality of the VR environments.

Some said XAIA could offer a valuable alternative to traditional therapy, especially for those seeking anonymity or who are reluctant to engage in face-to-face sessions.

Others highlighted the importance of human interaction and the unique benefits of connecting with a human therapist.

The study also identified areas for improvement, such as the AI’s tendency to over-question participants or inadequately explore emotional responses to significant life events.

Brennan Spiegel elaborated on the tool’s mission, clarifying, “While this technology is not intended to replace psychologists — but rather augment them — we created XAIA with access in mind, ensuring the technology can provide meaningful mental health support across communities.”

It seems like an interesting starting point for a deeper exploration of immersive therapy environments, which could certainly benefit some who are unable to access in-person therapy or wish to remain private and anonymous in their discussions.

AI for analyzing therapy conversations

In addition to acting as the therapist, AI has been used to analyze the dynamics of real therapy conversations.

In a 2023 study, researchers used AI to peel back the layers of psychotherapy sessions, revealing how certain speech patterns might just be the key to understanding the bond between therapists and their patients.

The drive behind this research stems from a longstanding dilemma in psychotherapy: how do we accurately gauge and improve the therapeutic alliance?

Published in the journal iScience, the study showed how personal pronouns and speech hesitations signal the depth of the therapist-patient connection.

This term refers to the essential relationship between therapists and their patients, a foundation critical for effective therapy.

Traditionally, understanding this relationship has been a subjective affair, relying on personal accounts and third-party observations, which, although valuable, might miss the fluid dynamics of actual therapy sessions.

Researchers from the Icahn School of Medicine at Mount Sinai saw an opportunity to employ machine learning to clarify what makes therapeutic communication work.

The study took place at clinics in New York City, involving 28 patients and 18 therapists engaging in a variety of therapy sessions. Before sessions kicked off, patients reflected on their past therapeutic relationships and attachment styles through online surveys.

Researchers used machine learning to analyze session transcripts using natural language processing (NLP), focusing on the usage of pronouns like “I” and “we” and non-fluency markers like “um” and “like.”

The way therapists and patients wielded personal pronouns seemed to affect the alliance.

For instance, the study found that when therapists frequently used “we,” it didn’t always enhance the alliance as one might expect, especially in cases involving personality disorders. This counters the usual assumption that inclusive language automatically strengthens connections.

Moreover, either party’s overreliance on “I” was linked to lower alliance ratings, hinting at the potential pitfalls of too much self-focus in therapy sessions.

The authors wrote, “Our primary finding was that more frequent first-person pronoun usage in both therapists and patients (“we,” “i do,” “i think”, “when i”) characterized sessions with lower alliance ratings.”

An unexpected finding was that hesitations, often viewed as a negative marker of conversation, were associated with higher alliance ratings, suggesting that pause could foster authenticity and engagement.

Previous research has found that pauses are a key part of genuinely thoughtful conversation.

In the researchers’ words: “We found that higher non-fluency in patients (e.g., “is like,” “umm”), but not in therapists, characterized sessions with higher alliance ratings by patients.”

The researchers also cautioned that the study’s scope and observational nature mean these correlations aren’t completely reliable.

AI has been used for speech analysis in medical settings, such as when UCL and University of Oxford researchers developed a model to detect potential schizophrenia from speech patterns.