OpenAI announced that it will use the C2PA standard to add metadata to images generated using DALL-E 3.

The announcement comes as companies continue to look for ways to stop their generative AI products from being used to spread disinformation.

C2PA is an open technical standard that adds metadata to an image including its origin, edit history, and other info. OpenAI says that images generated using DALL-E 3 directly, or via ChatGPT or its API, will now include C2PA data. Mobile users will see this implemented by 12 February.

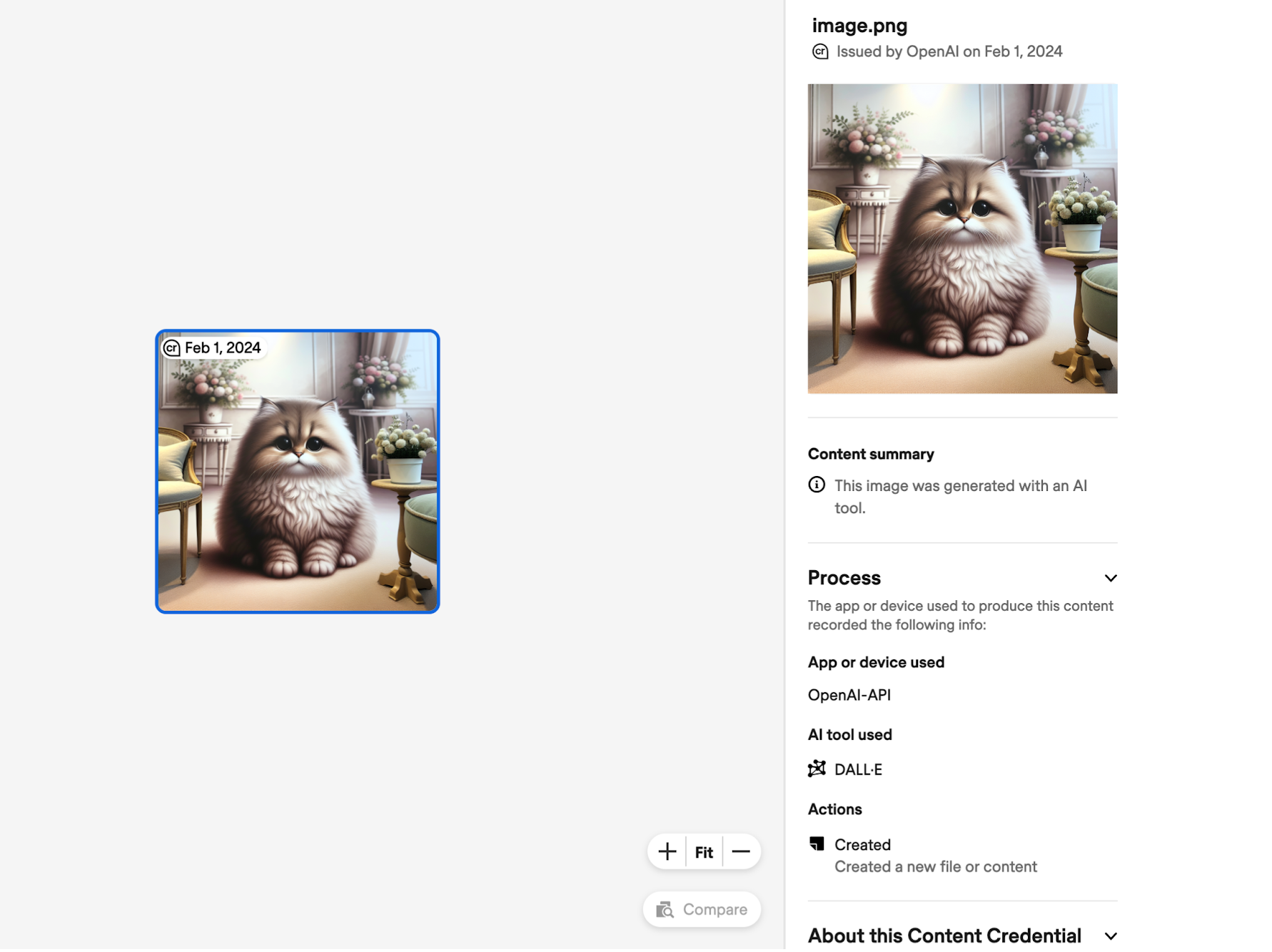

An image that has C2PA metadata added to it can be uploaded to sites like Content Credentials Verify to check the provenance of the image. Here’s an example of the info C2PA reveals about an image made using DALL-E via the API.

Social media platforms could also use this data to flag and label images as AI-generated. Meta recently announced that it would be making a bigger effort to do that.

The addition of C2PA data increases the file size with example estimates from OpenAI quoted as:

- 3.1MB → 3.2MB for PNG through API (3% increase)

- 287k → 302k for WebP through API (5% increase)

- 287k → 381k for WebP through ChatGPT (32% increase)

OpenAI acknowledges that “C2PA is not a silver bullet to address issues of provenance.” The metadata is easily removed, often unintentionally.

If you take a screenshot of the image then the C2PA data isn’t retained. Even converting an image from PNG to JPG will destroy most metadata. Most social media platforms strip metadata from images when they’re uploaded.

OpenAI says that by adding C2PA to DALL-E images it hopes to encourage “users to recognize these signals are key to increasing the trustworthiness of digital information.”

It may do that to a limited degree, but the fact that C2PA is so easy to remove doesn’t help much when a person intentionally wants to use an image to be misleading. If anything, it may just slow the deepfakers down a little.

Google’s SynthID digital watermark seems a far more secure solution but OpenAI is unlikely to use a tool created by its competition.