NVIDIA has released Chat with RTX as a tech demo of how AI chatbots can be run locally on Windows PCs using its RTX GPUs.

The standard approach of using an AI chatbot is to use a web platform like ChatGPT or to run queries via an API, with inference taking place on cloud computing servers. The drawbacks of this are the costs, latency, and privacy concerns with personal or corporate data transferring back and forth.

NVIDIA’s RTX range of GPUs is now making it possible to run an LLM locally on your Windows PC even if you’re not connected to the internet.

Chat with RTX lets users create a personalized chatbot using either Mistral or Llama 2. It uses retrieval-augmented generation (RAG) and NVIDIA’s inference optimizing TensorRT-LLM software.

You can direct Chat with RTX to a folder on your PC and then ask it questions related to the files in the folder. It supports various file formats, including .txt, .pdf, .doc/.docx and .xml.

Because the LLM is analyzing locally stored files with inference happening on your machine, it is really fast and none of your data is shared on potentially unsecured networks.

You could also prompt it with a YouTube video URL and ask it questions about the video. That requires internet access but it’s a great way to get answers without having to watch a long video.

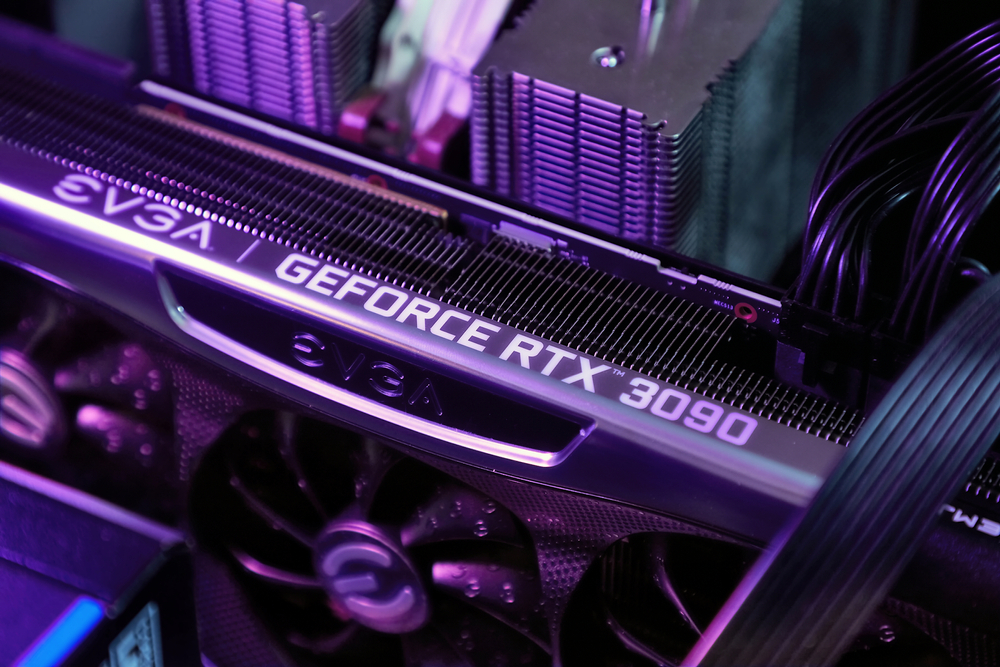

You can download Chat with RTX for free but you’ll need to be running Windows 10 or 11 on your PC with a GeForce RTX 30 Series GPU or higher, with a minimum 8GB of VRAM.

Chat with RTX is a demo, rather than a finished product. It’s a little buggy and doesn’t remember context so you can’t ask it follow up questions. But it’s a nice example of the way we’ll use LLMs in the future.

Using an AI chatbot locally with zero API call costs and very little latency is likely the way most users will eventually interact with LLMs. The open-source approach that companies like Meta have taken will see on-device AI drive the adoption of their free models rather than proprietary ones like GPT.

That being said, mobile and laptop users will have to wait a while yet before the computing power of an RTX GPU can fit into smaller devices.