The Institute for Strategic Dialogue (ISD) analyzed an online influence campaign orchestrated by a Chinese network to disrupt the US presidential elections.

This operation, dubbed ‘Spamouflage’ due to its deceptive tactics, has been linked to the Communist Party of China (CCP).

The campaign employs AI and a network of social media accounts to discuss and provoke US election topics, including issues such as drug abuse, homelessness, and gun violence.

Around half of the world’s population is participating in elections this year, leading to widespread anxiety about the potential of AI deep fakes to amplify misinformation and sway voting behaviors.

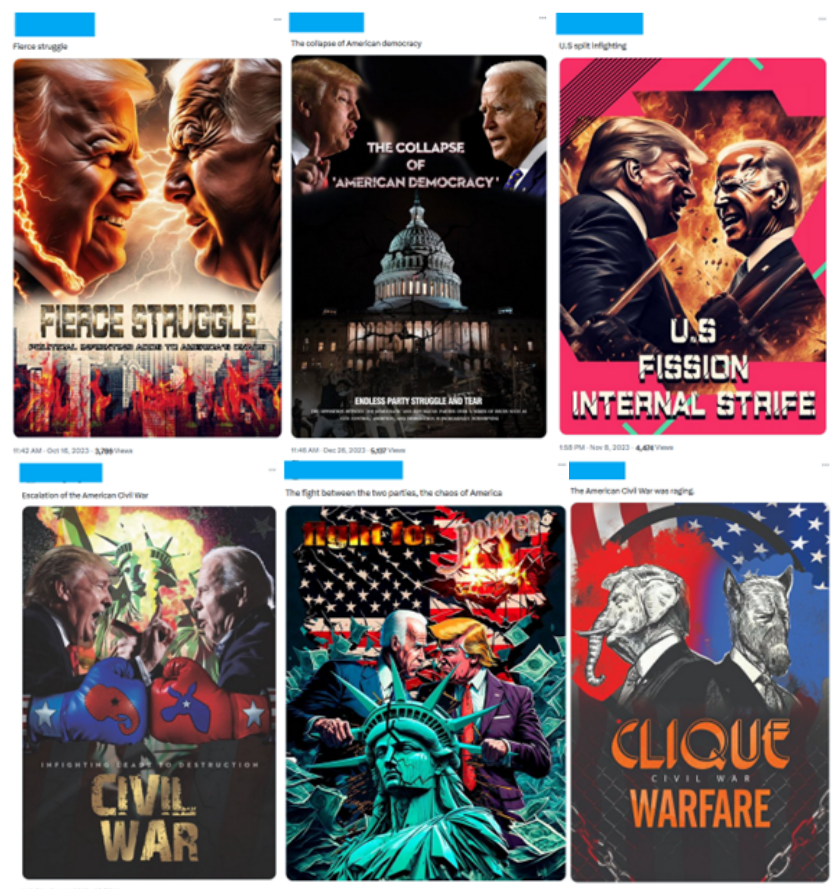

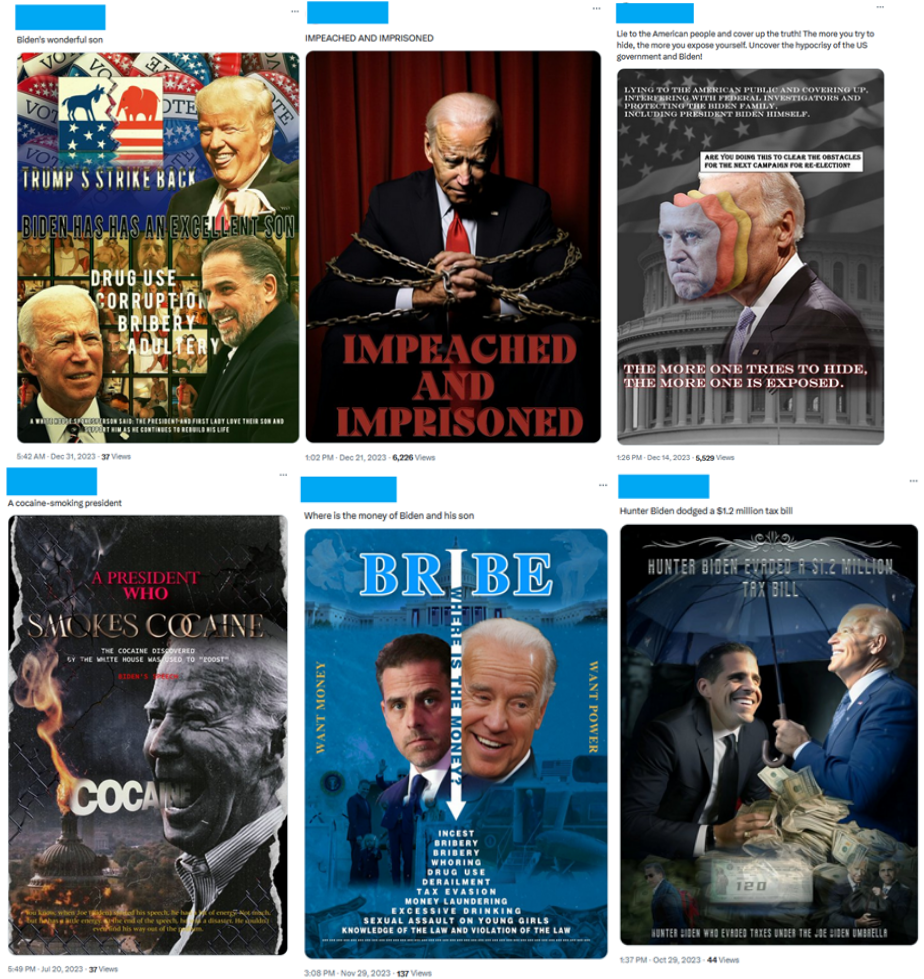

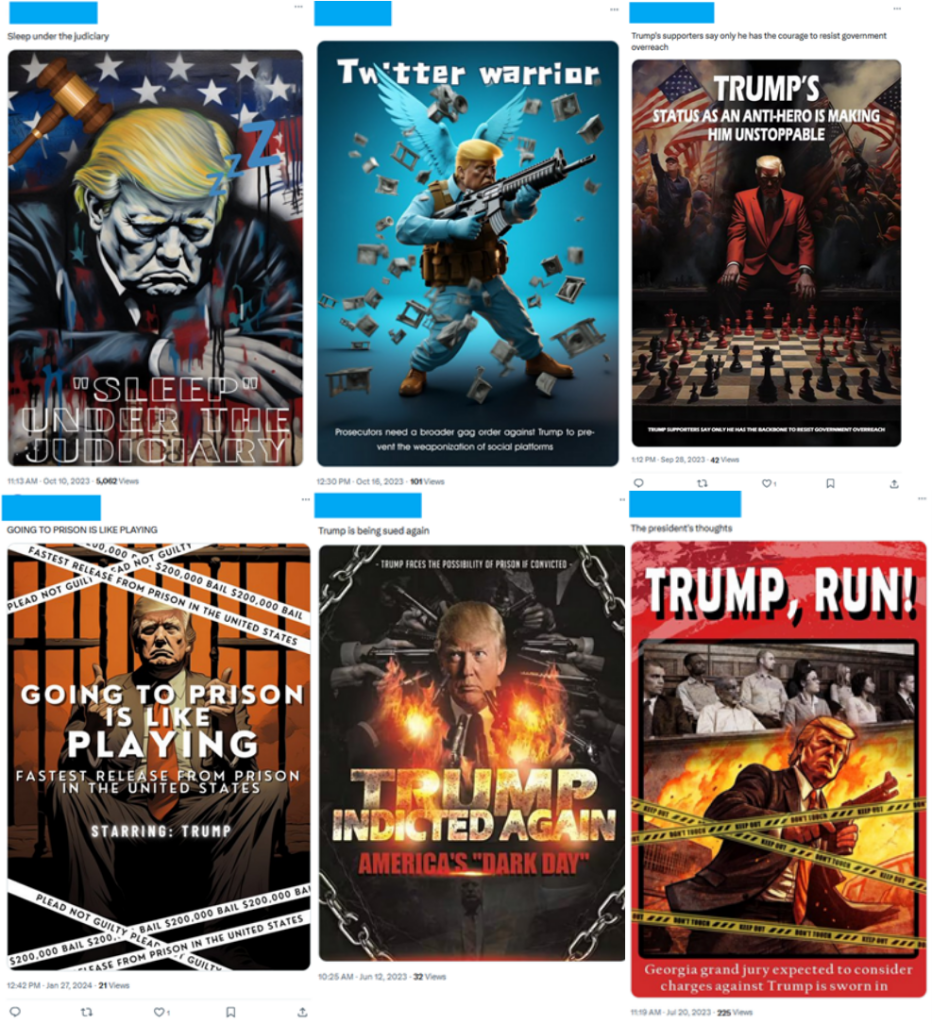

Notably, the ‘Spamouflage’ campaign utilizes AI-generated and manipulated images of political figures like Joe Biden and Donald Trump to accentuate and exploit political divisions.

The ISD’s report reveals, “The online campaign used artificial intelligence (AI) and a network of social media accounts in an apparent bid to malign the US image as a nation rife with drug abuse, homelessness, and gun violence.”

Elise Thomas from ISD said of the report notes, “Among the narratives propagated are claims involving drug use by President Biden to enhance his public speaking performance and allegations of corruption.”

This operation, believed to be Chinese in origin, has been active since at least 2017.

New developments came in April 2023 when the US Department of Justice indicted 40 employees of the Chinese Ministry of Public Security’s 912 Special Projects Working Group, pointing towards their involvement in what is likely the ‘Spamouflage’ campaign.

The focus of ‘Spamouflage’ has now pivoted to the contest between Biden and Trump. Images are evidently AI-generated, a common tactic among threat actors who use the technology to create visually impactful, high-quality deep fakes.

OpenAI and Microsoft recently worked together to disrupt threat actors using their AI technologies for propaganda and deep fake purposes.

However, deep fake images continue to slip through content detection systems to be viewed by millions of people.

Narratives shaping the ‘Spamouflage’ campaign

The ISD, established in 2006 as a non-profit, non-partisan organization with the purpose of tackling misinformation, identified several key narratives among the content:

Divisiveness of the election: This narrative portrays the election as a catalyst for further division, exacerbating America’s existing challenges. Images often show Biden and Trump confrontationally, hinting at the deep-seated political divisions being exploited.

Negative focus on Biden: The campaign appears to disproportionately target President Biden with negative narratives, including accusations of corruption and drug use. This could be a strategic choice or merely reflect him being the current president.

Ambiguous stance on Trump: Interestingly, the content related to Trump often carries an ambiguous tone that could be construed as positive.

Narratives on national decay: A significant portion of the content focuses on painting a grim picture of the US, touching on urban decay, the opioid crisis, and gun violence to foster a sense of chaos.

Questioning election integrity: Although not a primary focus, there’s content casting doubt on election integrity.

Abortion policy: The issue of abortion emerges in some images, with some content suggesting Biden’s inadequacy in protecting abortion rights, juxtaposed with claims that Trump seeks a federal ban on abortion.

Spamouflage could have international influence

The ‘Spamouflage’ network was primarily active in the US, but India Today’s OSINT team discovered evidence of the campaign’s activities in India. It’s impossible to know how many of these kinds of campaigns are currently active worldwide.

Meta’s response to this campaign has been swift, removing thousands of accounts linked to the content. However, X was criticized for its sluggish response.

Despite the extensive efforts of the ‘Spamouflage’ campaign, its effectiveness in terms of real engagement from social media users remains questionable.

The ISD report suggests that most of the content generated by this network fails to resonate with audiences, leading to minimal interaction.

It has to be said that most of the posts are downright ridiculous. You can see why they’d be immediately dismissed.

However, research has demonstrated that deep fake imagery has a tangible impact on human decision-making processes, so it’s too soon to write off impacts.

In any case, the ‘Spamouflage’ campaign again reminds us of AI’s role in a rising tide of sophisticated misinformation.