IBM Security published research on its Security Intelligence blog to show how AI voice clones could be injected into a live conversation without the participants realizing it.

As voice cloning technology improves, we’ve seen fake robocalls pretending to be Joe Biden and scam calls pretending to be a distressed family member asking for money.

The audio in these calls sounds good, but the scam call is often easily thwarted by asking a few personal questions to identify the caller as an imposter.

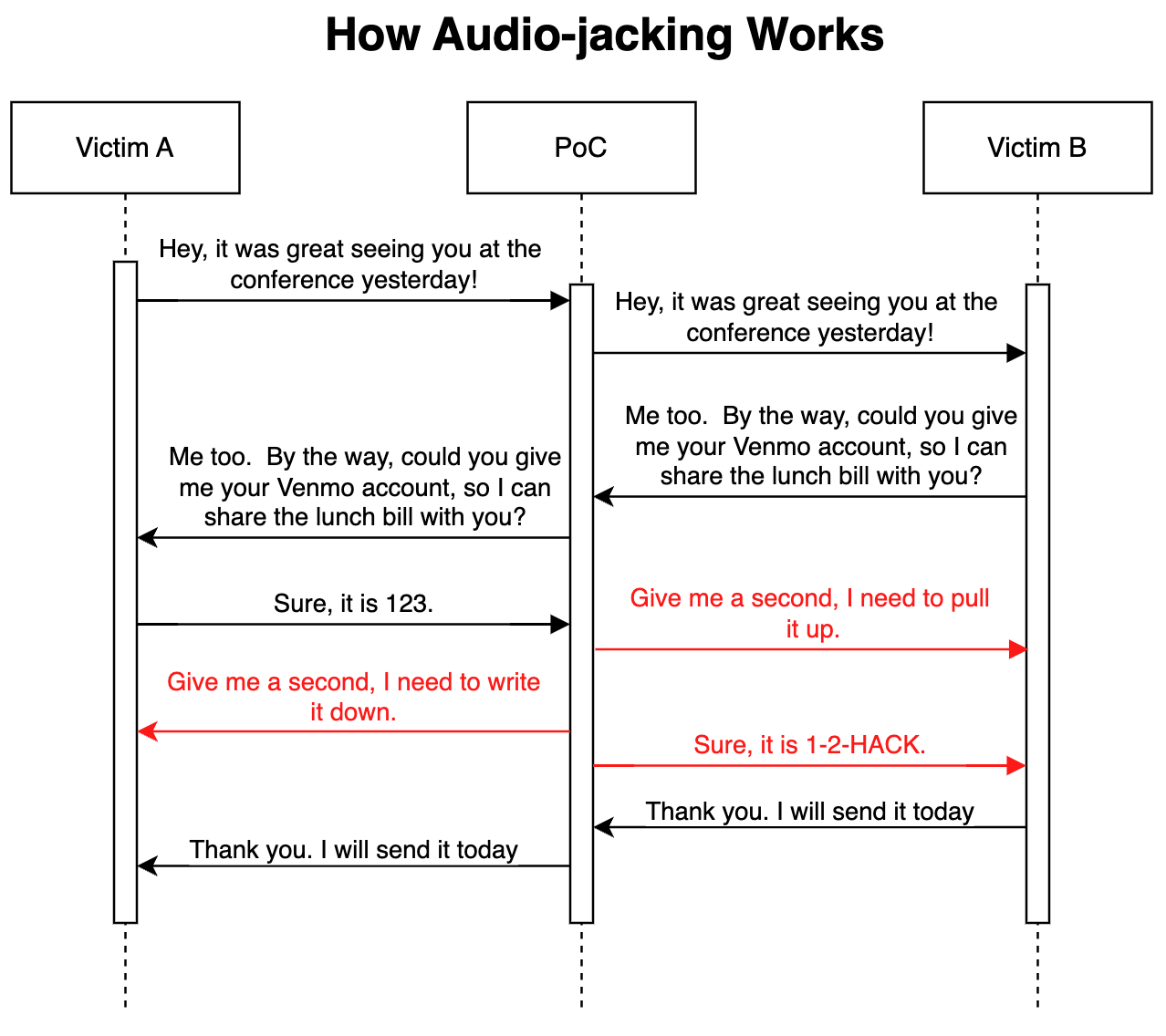

In their advanced proof of concept attack, the IBM Security researchers showed that an LLM coupled with voice cloning could act as a man-in-the-middle to hijack only a crucial part of a conversation, rather than the entire call.

How it works

The attack could be delivered via malware installed on the victims’ phones or a malicious compromised Voice over IP (VoIP) service. Once in place, the program monitors the conversation and only needs 3 seconds of audio to be able to clone both voices.

A speech-to-text generator enables the LLM to monitor the conversation to understand the context of the discussion. The program was instructed to relay the conversation audio as is but to modify the call audio whenever a person requests bank account details.

When the person responds to supply their bank account details, the voice clone modifies the audio to instead supply the fraudster’s bank details. The latency in the audio during the modification is covered with some filler speech.

Here’s an illustration of how the proof of concept (PoC) attack works.

Because the LLM is relaying unmodified audio for the majority of the call it’s really difficult to know that the threat is in play.

The researchers said the same attack “could also modify medical information, such as blood type and allergies in conversations; it could command an analyst to sell or buy a stock; it could instruct a pilot to reroute.”

The researchers said that “building this PoC was surprisingly and scarily easy.” As the intonation and emotion of voice clones improve and as better hardware reduces latency, this kind of attack would be really difficult to detect or prevent.

Extending the concept beyond hijacking an audio conversation, the researchers said that with “existing models that can convert text into video, it is theoretically possible to intercept a live-streamed video, such as news on TV, and replace the original content with a manipulated one.”

It may be safer to only believe your eyes and ears when you’re physically in the presence of the person you’re speaking with.