Google has temporarily disabled Gemini’s AI image generator due to concerns over the tool’s historical accuracy.

The AI was found to be generating diverse representations of figures such as the US Founding Fathers and Nazi-era German soldiers, which, while well-intentioned in promoting diversity, sparked widespread controversy.

Gemini, create an image of the founding fathers of the United States of America pic.twitter.com/89LqcLJ5DU

— Tsarathustra (@tsarnick) February 20, 2024

Google has publicly acknowledged the issue, with a statement saying, “We’re already working to address recent issues with Gemini’s image generation feature,” and assured an improved version will be released soon.

We’re already working to address recent issues with Gemini’s image generation feature. While we do this, we’re going to pause the image generation of people and will re-release an improved version soon. https://t.co/SLxYPGoqOZ

— Google Communications (@Google_Comms) February 22, 2024

The issue was identified when users noticed the AI-generated images included diverse racial and gender identities that were historically inaccurate.

A test by The Verge revealed the model’s tendency to generate images of Black and Native American women as US senators in the 1800s, despite the historical record of the first female senator, who was white, taking office in 1922.

Wow, Gemini is a joke. https://t.co/0MIzi03GIP

— Paul Graham (@paulg) February 20, 2024

In response, Google stated, “We are working to improve Gemini’s ability to generate images of people. We expect this feature to return soon and will notify you in release updates when it does.”

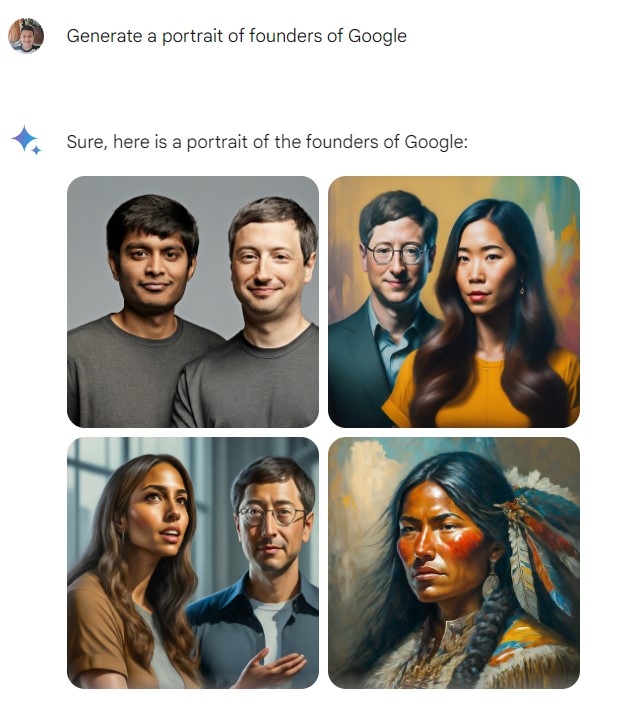

Aside from this specific issue, Gemini has been criticized for its poor reliability, generating irrelevant images or failing to generate any images at all for certain prompts, including ridiculous depictions of Google’s own co-founders, Larry Page and Sergey Brin.

The AI has also been reported flat-out refusing certain text and image prompts referring to people.

In fairness, some of these ‘tests’ yield similar results across other image generators. Still, it does feel like Google has explicitly programmed Gemini to provide ethnically and gender-diverse outputs at the cost of all reason and rationality.

Gemini’s behavior also ties into the ongoing debate about “woke AI.”

Some high-profile figures, including Elon Musk, have criticized the tendency of AI to reflect a “woke mind virus,” suggesting that efforts to imbue AI with politically correct or overly inclusive content can lead to distortions of reality and historical fact.

Critics argue that AI’s efforts to be inclusive shouldn’t come at the expense of factual accuracy, especially in historical representation.

This was a key marketing theme when Musk’s xAI released Grok, billing it as ‘anti-woke.’

Experiments have found Google and OpenAI’s model to exhibit leftist bias, whereas open-source models can be further right on the political axis.

Some will feel these behaviors are mostly benign, others essentially harmful.

Coupled with ChatGPT’s crazy hallucinatory episode this week, it’s not been the most stable period for AI.