Google has released two models from its family of lightweight, open models called Gemma.

While Google’s Gemini models are proprietary, or closed models, the Gemma models have been released as “open models” and made freely available to developers.

Google released Gemma models in two sizes, 2B and 7B parameters, with pre-trained and instruction-tuned variants for each. Google is releasing the model weights as well as a suite of tools for developers to adapt the models to their needs.

Google says the Gemma models were built using the same tech that powers its flagship Gemini model. Several companies have released 7B models in an effort to deliver an LLM that retains useable functionality while potentially running locally instead of in the cloud.

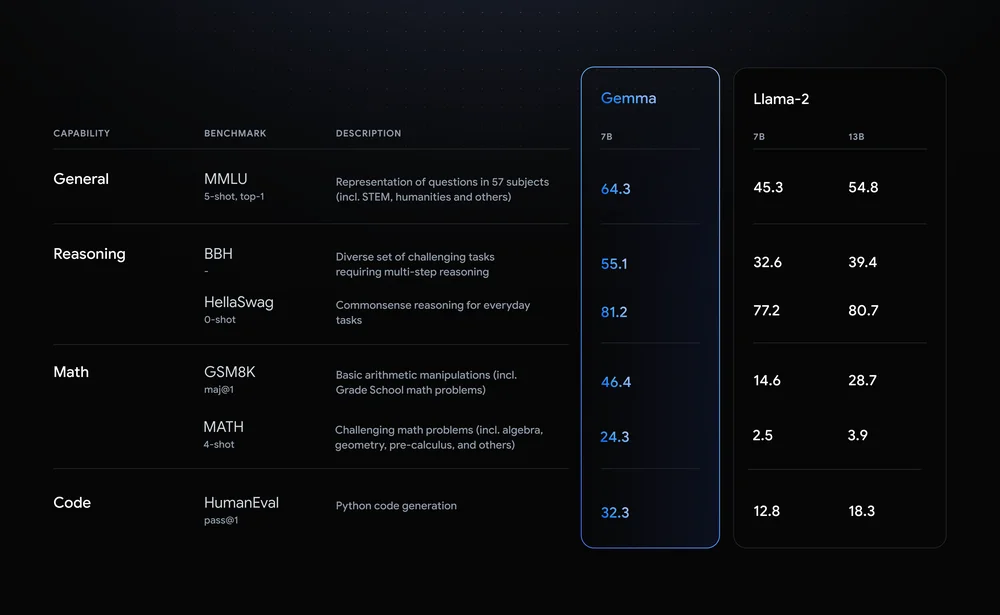

Llama-2-7B and Mistral-7B are notable contenders in this space but Google says “Gemma surpasses significantly larger models on key benchmarks,” and offered this benchmark comparison as evidence.

The benchmark results show Gemma beats even the larger 12B version of Llama 2 in all four capabilities.

The really exciting thing about Gemma is the prospect of running it locally. Google has partnered with NVIDIA to optimize Gemma for NVIDIA GPUs. If you have a PC with one of NVIDIA’s RTX GPUs, you can run Gemma on your device.

NVIDIA says it has an installed base of over 100 million NVIDIA RTX GPUs. This makes Gemma an attractive option for developers who are trying to decide which lightweight model to use as a basis for their products.

NVIDIA will also be adding support for Gemma on its Chat with RTX platform making it easy to run LLMs on RTX PCs.

While not technically open-source, it’s only the usage restrictions in the license agreement that keep Gemma models from owning that label. Critics of open models point to the risks inherent in keeping them aligned, but Google says it performed extensive red-teaming to ensure that Gemma was safe.

Google says it used “extensive fine-tuning and reinforcement learning from human feedback (RLHF) to align our instruction-tuned models with responsible behaviors.” It also released a Responsible Generative AI Toolkit to help developers keep Gemma aligned after fine-tuning.

Customizable lightweight models like Gemma may offer developers more utility than larger ones like GPT-4 or Gemini Pro. The ability to run LLMs locally without the cost of cloud computing or API calls is becoming more accessible every day.

With Gemma openly available to developers it will be interesting to see the range of AI-powered applications that could soon be running on our PCs.