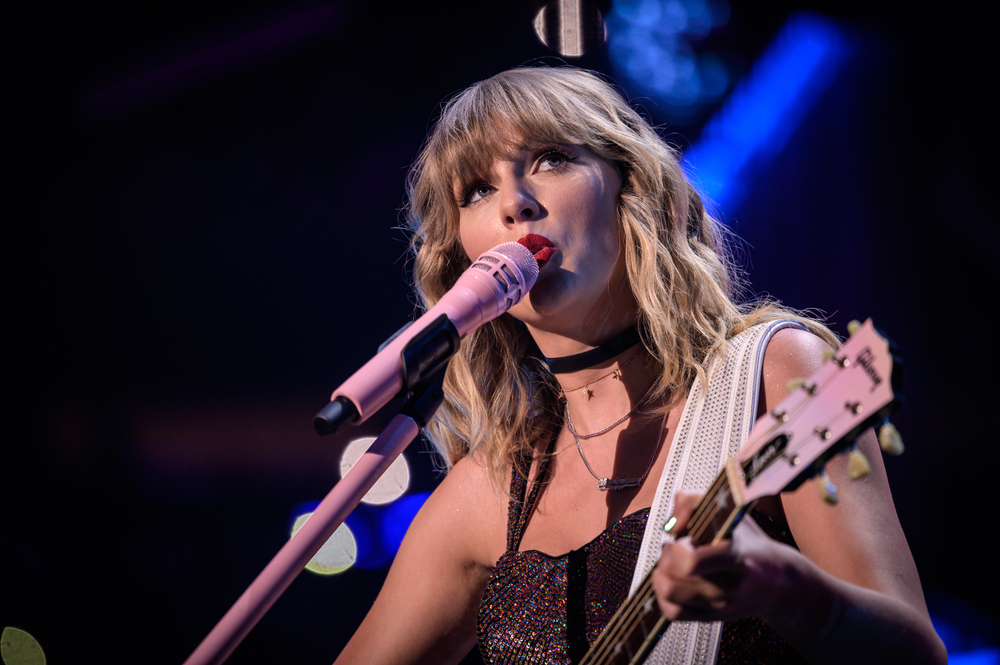

Explicit AI-generated deep fake images of pop star Taylor Swift were recently circulated on social media, igniting widespread uproar.

Despite strict rules against such content, these nonconsensual images depicted Swift in sexually explicit positions and were live for 19 hours, amassing over 27 million views and 260,000 likes before the posting account was suspended.

The incident contributes to a deluge of questions about the efficacy of social media policies against deep fake misinformation.

Can it be stopped? Seemingly not. So how do we combat it? Well, AI detection services (similar to fact checkers) and ‘Community Notes,’ attached to posts on X, are two possible solutions, but both have their flaws.

The explicit images of Taylor Swift were circulated primarily on the social media platform X but also found their way onto other social media platforms, including Facebook, showing how controversial AI content spreads like wildfire.

A spokesperson from Meta responded to the incident, stating, “This content violates our policies, and we’re removing it from our platforms and taking action against accounts that posted it. We’re continuing to monitor and if we identify any additional violating content we’ll remove it and take appropriate action.”

Millions of users took to Reddit to discuss the ramifications and impacts of this and other recent viral deep fake incidents, including a photo of the Eiffel Tower on fire.

There’s a TikTok video doing the rounds, viewed millions of times, claiming the Eiffel Tower is on fire. The Eiffel Tower is not on fire. pic.twitter.com/IxlsKOqOsI

— Alistair Coleman (@alistaircoleman) January 22, 2024

While many would perceive these images as quite obviously fake, we cannot assume awareness among the wider public.

One commenter on Reddit said of the Taylor Swift incident, “Now I know how many people don’t know what deepfakes are,” implying low awareness of the issue.

Industry experts also discussed the issue with ex-Stability executive Ed Newton-Rex, arguing that tech companies’ gung-ho attitudes and poor decision-maker engagement contribute to the issue.

Explicit, nonconsensual AI deepfakes are the result of a whole range of failings.

– The ‘ship-as-fast-as-possible’ culture of generative AI, no matter the consequences

– Willful ignorance inside AI companies as to what their models are used for

– A total disregard for Trust &…— Ed Newton-Rex (@ednewtonrex) January 26, 2024

Ben Decker from Memetica, a digital investigations agency, commented on the lack of control over AI’s impacts: “This is a prime example of the ways in which AI is being unleashed for a lot of nefarious reasons without enough guardrails in place to protect the public square.”

He also pointed out the shortcomings in social media companies’ content monitoring strategies.

Reports suggest that Taylor Swift is now considering legal action against the deep fake porn site hosting the images.

There have been numerous similar incidents of explicit deep fakes, mostly involving women and children, sometimes with the intent of bribing people, also called “sexploitation.”

AI images of child sex abuse are the darkest, most concerning impact of image generation technology. A US man was recently sentenced to 40 years in prison for possessing such images.

In the wake of this and other similar incidents, there have been increased calls for legislation to address this form of AI misuse.

US Representative Joe Morelle described the spread of the Swift deepfakes as “appalling,” advocating for urgent legal action. He noted, “What’s happened to Taylor Swift is nothing new,” underscoring the disproportionate impact of such content on women.

Deep fake misinformation was also widely discussed at the World Economic Forum in Davos with rising fears about the potential for manipulated content to influence stock markets, elections, and public opinion.

Efforts to combat deep fakes are underway, with startups and tech giants alike developing detection technologies.

Intel, for example, launched a product capable of detecting fake videos with 96% accuracy.

However, the challenge remains largely insurmountable for now, with the technology rapidly evolving and spreading across the internet.