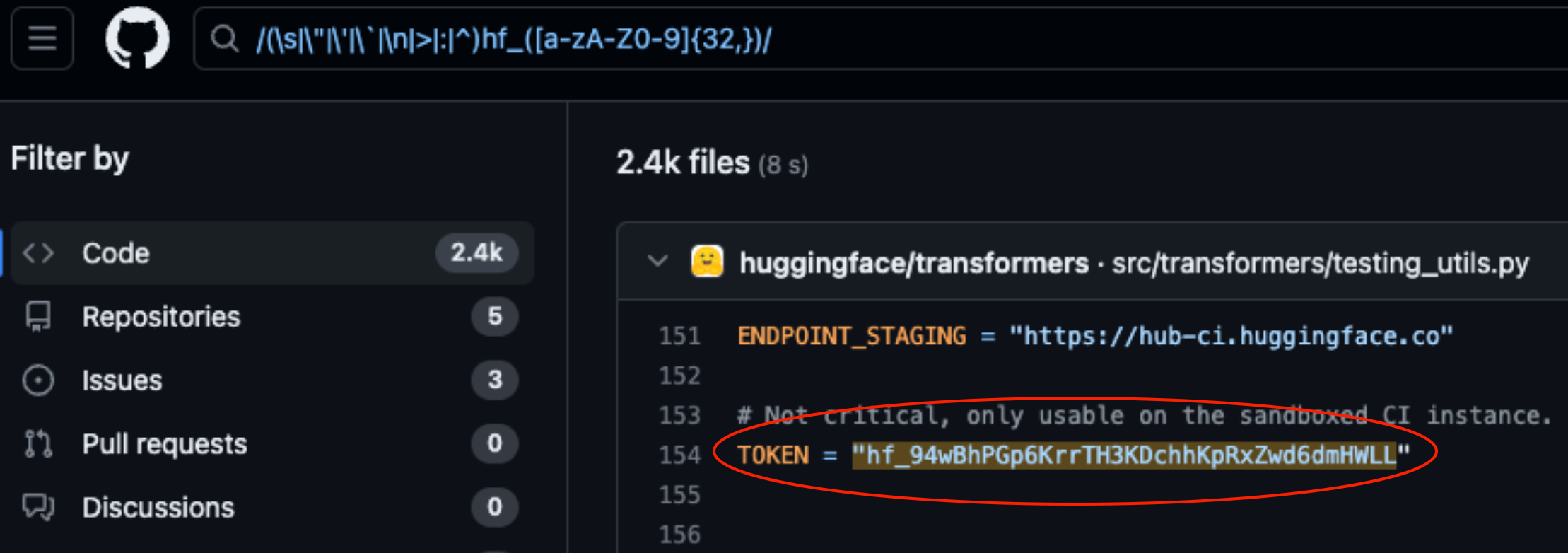

Lasso Security exposed security vulnerabilities on HuggingFace and GitHub after finding 1681 exposed API tokens hardcoded into code stored on the platforms.

HuggingFace and GitHub are two of the most popular repositories where developers can provide access to their AI models and code. Think of these as folders in the cloud that are managed by the organizations that own them.

HuggingFace and GitHub make it easy for users to interact with hundreds of thousands of AI models and datasets via APIs. It also allows organizations that own the models and datasets to use the API access to read, create, modify, and delete repositories or files.

The permissions associated with your API token determine the level of access you have. Lasso found that with a bit of digging, they were able to find a lot of tokens in code stored in repositories on the platforms.

Of the 1681 valid tokens they found, 655 users’ tokens had write permissions, 77 of which had full account permissions.

Why is this a big deal?

Think of an API token like a key to your front door. It may be convenient to leave the key under your doormat, but if someone finds it then they gain access to your house.

When developers write a piece of code that needs to interact with their AI model or dataset they sometimes get a little lazy. They may hardcode the tokens into their code instead of using more secure ways to manage them.

Some of the tokens Lasso found gave them full read and write permissions to Meta’s Llama 2, BigScience Workshop, and EleutherAI. These organizations all have AI models that have been downloaded millions of times.

If Lasso were the bad guys then they could have modified the models or datasets in the exposed repositories. Imagine if someone added some sneaky code into Meta’s repository and then had millions of people download it.

When Meta, Google, Microsoft, and others heard of the exposed API tokens they quickly revoked them.

Model theft, training data poisoning, and combining third-party datasets and pre-trained models are all big risks to AI companies. Developers casually leaving API tokens exposed in code only makes it easier for bad actors to exploit these.

You’ve got to wonder if Lasso’s engineers were the first to find these vulnerabilities.

If cybercriminals found these tokens they certainly would have kept it very quiet while they opened the front door.