A recent experiment found that humans can correctly identify AI-generated human faces just 48.2% of the time.

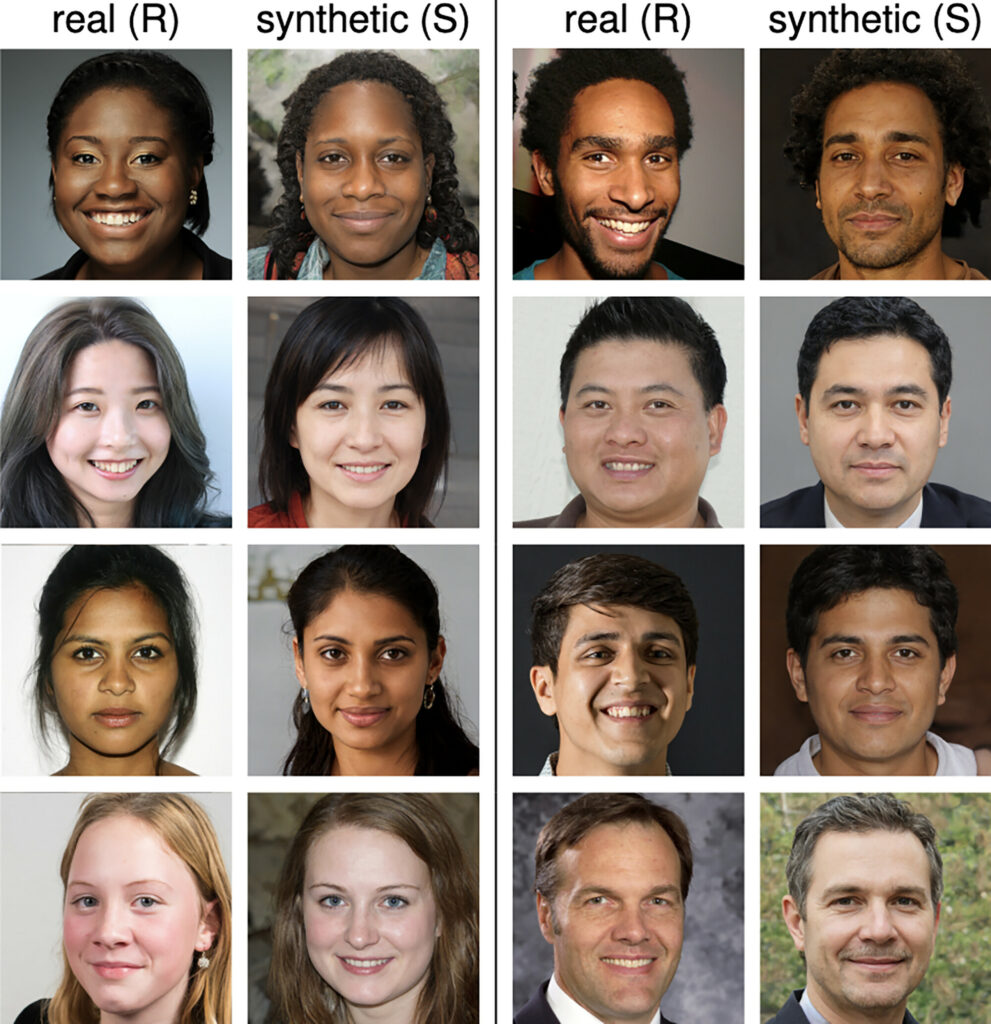

The study involved 315 participants tasked with differentiating between real and AI-generated faces. The faces were synthesized using StyleGAN2, a state-of-the-art engine capable of creating diverse and realistic human likenesses.

The results were both fascinating and slightly unnerving; the participants’ ability to identify AI-generated faces hovered around 48.2% – worse than a coin flip.

It suggests that to the average person, AI deep fake faces are virtually indistinguishable from those of real human beings.

You can see for yourself how lifelike these faces are by visiting this-person-does-not-exist.com, which displays realistic AI-generated images of virtually anyone, at any age or from any background. And this tool is now a few years old.

Delving deeper, the study also explored whether certain races and genders were more challenging to classify correctly.

It was observed that white faces, particularly male, were the most difficult for participants to correctly identify as real or synthetic.

This reason why this occurs may stem from the overrepresentation of white faces in the AI’s training dataset, leading to more realistic white synthetic faces, according to the study.

Even more intriguing was the discovery that participants rated synthetic faces as more trustworthy than real ones, albeit by a modest margin. This could hint at a subconscious preference for the average features that AI tends to generate, which the study states previous research found to be perceived as more trustworthy.

A subtle increase in the likelihood of someone interpreting an AI image as more trustworthy than a real one is very worrying once scaled up to population levels.

The brain’s interpretation of AI-generated faces is complex

A second recent study tasked participants with identifying whether a face was real or not while engaged in a task designed to distract them.

They could not consciously differentiate between the two, but things became more confusing when their brain activity was measured using an electroencephalogram (EEG).

Approximately 170 milliseconds after viewing the faces, the brain’s electrical activity illustrated differences when participants looked at real and synthetic images.

It seemed like the unconscious mind ‘knew’ when an image could be AI-generated better than the conscious mind. But despite this, participants couldn’t consciously label AI faces with any robust degree of confidence.

We could speculate that there’s a conscious layer of interpretation where we give AI-generated faces ‘the benefit of the doubt’ even when we’re unsure.

After all, we’re inherently wired to identify and trust human faces, so it’s exceptionally difficult to detach oneself from that and isolate inconsistencies that may reveal an AI-generated image.

It’s almost as if initial suspicions give way to a more affirmative confirmation that the person is, in fact, real when they’re not – a form of AI-instigated apology.

Whatever the explanation for this quirk is, AI-generated deep fakes are a priority risk for the technology, with a recent scandal hitting a New Jersey school.

Deep fake scams are a regular occurrence, and there are palpable worries that fake AI-generated content could affect voting behaviors.