During her visit to the UK for the AI Safety Summit, US VP Kamala Harris announced that 30 countries joined the US in endorsing its proposed guardrails for military use of AI.

The “Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy” announcement was posted to the US Department of State website on 1 November with additional details of the framework published on 9 November.

In her statement regarding the framework, Harris said, “To provide order and stability in the midst of global technological change, I firmly believe that we must be guided by a common set of understandings among nations.”

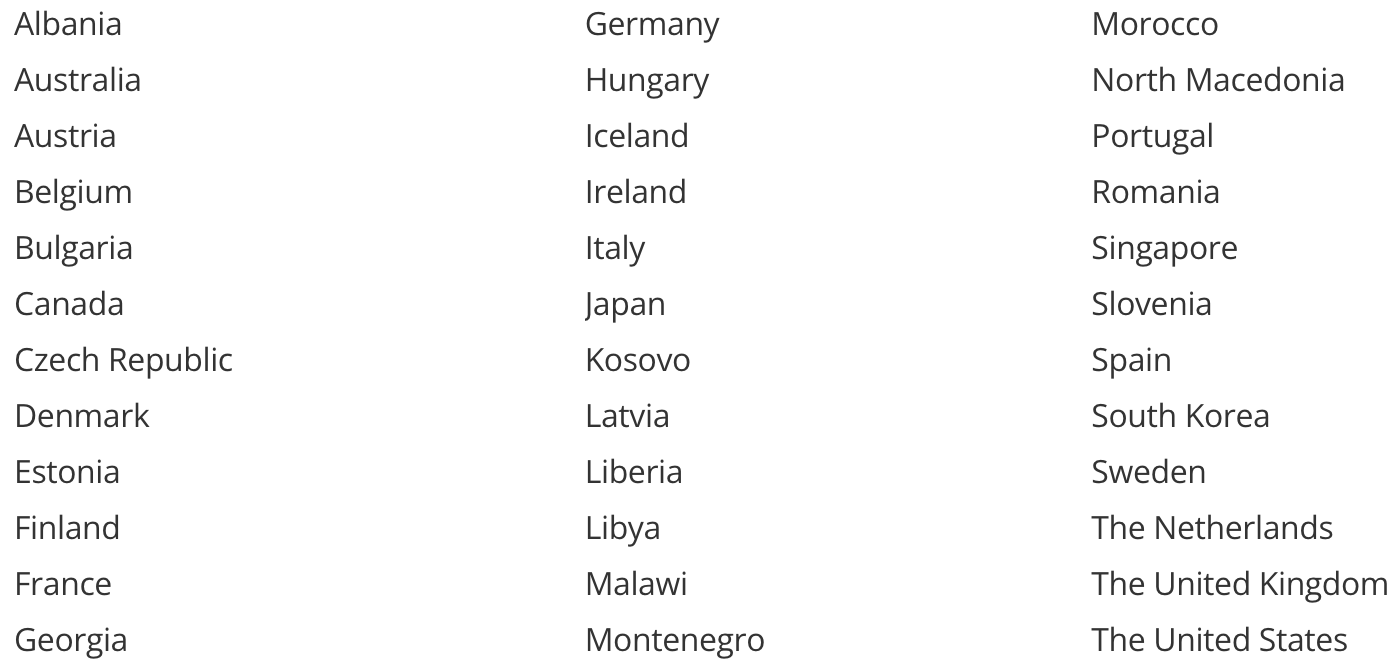

These are the countries that pledged their support for the initiative.

Notably absent from this list are China and Russia. China endorsed the Bletchley Declaration, pledging its in-principle support for the safe development of AI in general.

Russia and China’s decision to decline to endorse the military AI declaration may have more to do with the authors of the document than their views on its substance.

Another gap on the list is left by two countries currently receiving billions in military support from some of the endorsing states, Israel and Ukraine.

As US allies, their decision not to endorse the pledge is more likely due to both countries currently using AI in the conflicts they’re engaged in.

Israel uses AI in various military applications, including its Iron Dome system. Ukraine has courted controversy with its autonomous AI drones targeting soldiers and military equipment in its conflict with Russia.

What are they committing to?

If you want to read the full details of what the signatories are agreeing to you can do so here.

Here’s the short version which may sacrifice some substance for brevity:

- Make sure your military adopts and uses these AI principles.

- Your military AI use must meet international law and protect civilians.

- Top brass must manage the development and deployment of military AI.

- Proactively minimize unintended bias in military AI capabilities.

- Be careful when you make or use AI weapons.

- Make sure your AI defense tech is transparent and auditable.

- Train those who use or approve the use of military AI capabilities so they don’t blindly trust the AI when it gets it wrong.

- Clearly define what you plan to do with your military AI tech and design it so it does only that.

- Keep testing self-learning and continuously updating military AI capabilities to see if they’re still safe.

- Have an off-switch in case your AI weapon misbehaves.

These are laudable ideals, but whether they are technologically or even logistically within the means of defense organizations is doubtful.

Will they be enforceable? Signing the pledge is a confirmation of in-principle endorsement but doesn’t carry any legal obligation.

Signing a document that says, ‘We’ll be careful when using AI in our military,” also implies that the country fully intends to use AI as a weapon, albeit in a ‘responsible and ethical’ way.

At least these countries acknowledge the potential of AI to amplify the harm that conventional weapons already cause. Has this framework made the world a little safer, or just shone a spotlight on what inevitably lies ahead?