MedARC develops foundational AI models for medicine and their latest model, MindEye, can tell what you’ve been looking at.

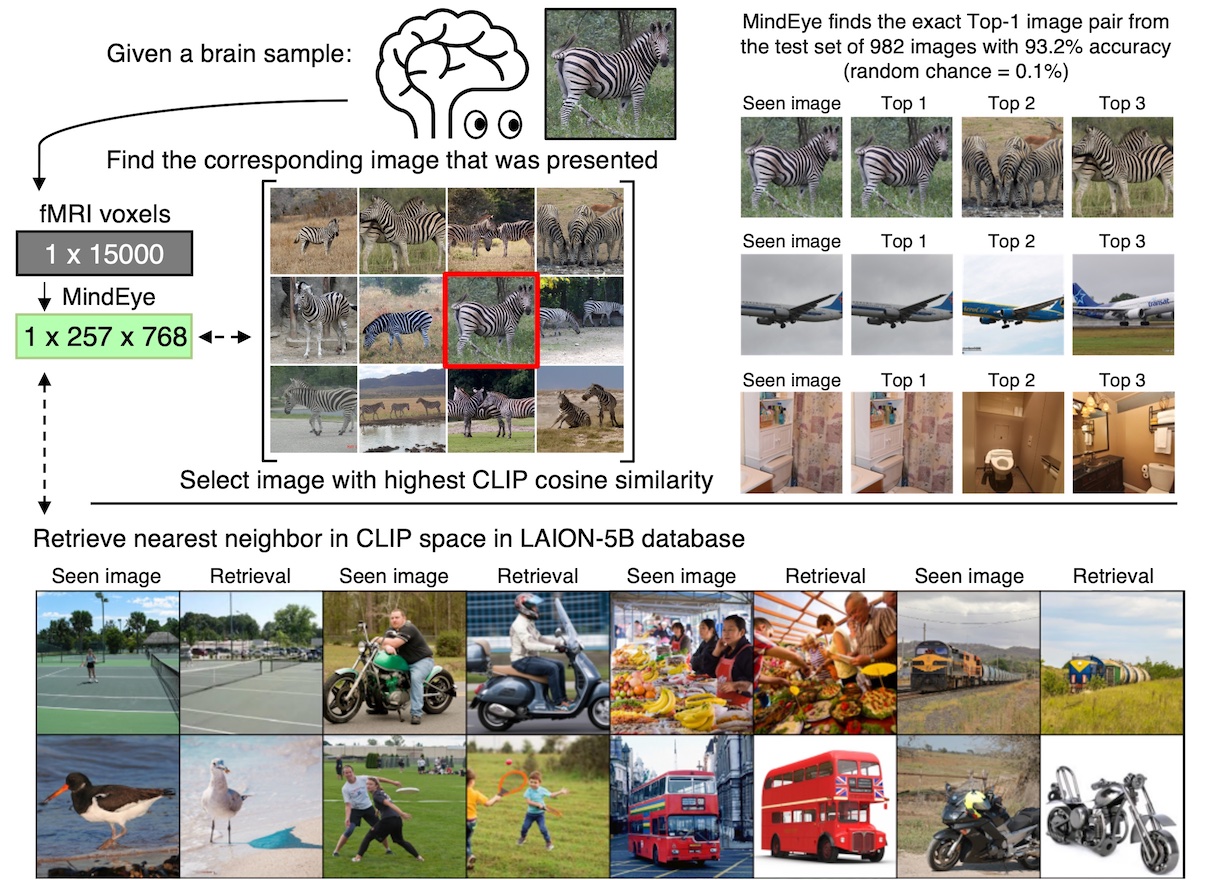

MindEye is an fMRI-to-image AI model that can map functional magnetic resonance imaging (fMRI) of brain activity to OpenAI’s CLIP image space.

The fMRI scans they used came from the Natural Scenes Dataset (NSD). The NSD consists of whole-brain, high-resolution fMRI scans of 8 healthy adult subjects while they looked at thousands of color natural scenes over the course of 30–40 scan sessions.

MindEye can analyze an fMRI scan and then retrieve the exact original image the person was looking at from the list of test images. Even if the images are very similar, like different photos of zebras, MindEye still identifies the correct one 93.2% of the time.

It can even identify similar images from a huge image database of billions of images like the LAION-5B database.

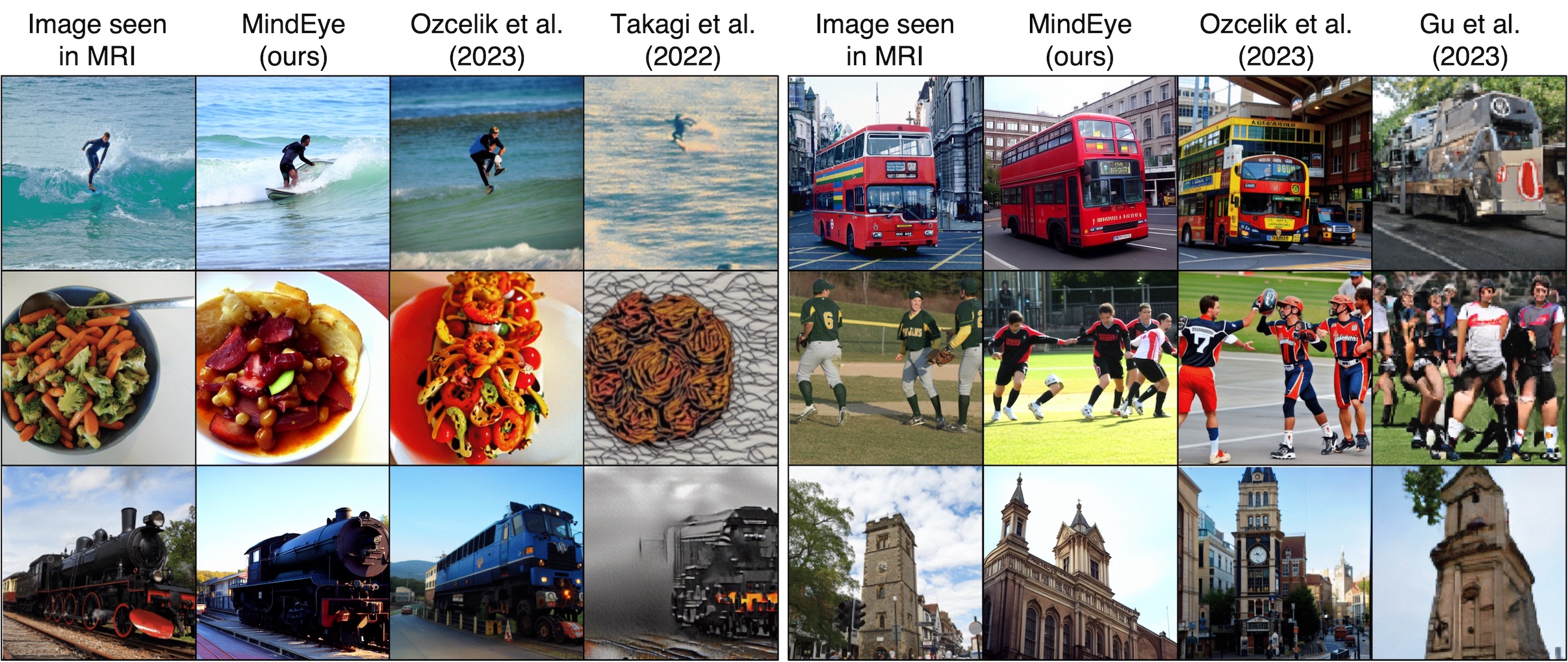

Once MindEye translates the fMRI scans to the CLIP image space these can be fed into a pretrained image generation model like Stable Diffusion or similar models. MedARC used Versatile Diffusion to attempt to recreate the original image the subject was looking at.

MindEye doesn’t get this part 100% right but it’s still really impressive. Here are their results compared to the results of previous studies.

Potential and questions

Saying that MindEye can know what you’ve been looking at is a bit of an oversimplification. To get the fMRI data the subjects had to spend about 40 hours lying in an MRI machine and the images the model trained on were limited.

Even so, being able to get an insight into how a person perceives visual stimulation will be of great interest to neuroscientists.

Showing an image to a patient, scanning their brain, and then recreating their perception of the image could help with clinical diagnosis. The research paper explained that “patients suffering from major depressive disorder might produce reconstructions where emotionally negative aspects of images are more salient.”

The research could also help to communicate with patients suffering from locked-in syndrome (pseudocoma).

For the full benefit of these applications to be realized we’ll need to wait for better brain-computer interfaces or wearables that don’t require a person to lie in an MRI machine for hours.

MedARC acknowledges that their research is also cause for caution. “The ability to accurately reconstruct perception from brain activity prompts questions about broader societal impacts,” noted their research paper.

If effective non-invasive methods were eventually developed you could potentially read a person’s mind and know what they looked at.

The advancement in using AI in neuroscience is fascinating and will undoubtedly help mental health clinicians. But it also raises a host of ethical and privacy concerns about how we continue to keep our thoughts to ourselves.