Researchers from the University of Chicago developed a new tool to “poison” AI models when AI companies include images in their datasets without consent.

Companies like OpenAI and Meta have faced criticism and lawsuits over their wholesale scraping of online content to train their models. For text-to-image generators like DALL-E or Midjourney to be effective, they need to be trained on large amounts of visual data.

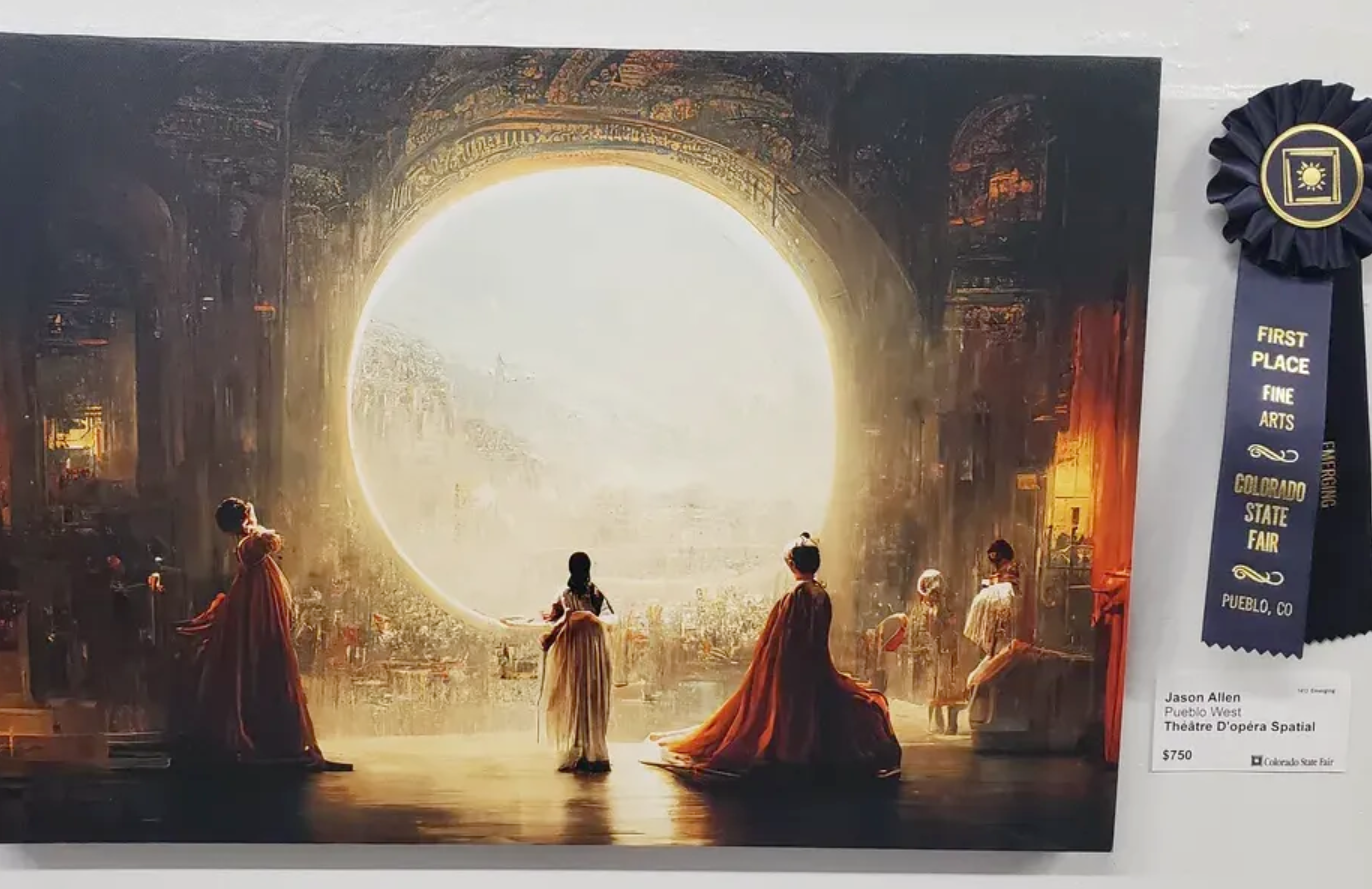

A number of artists have complained that these image generators are now able to produce images in their style after scraping their art without consent.

The researchers created Nightshade, a tool that embeds invisible pixels into an image that subsequently renders the image ‘poisonous’ to AI models.

Generative AI relies on properly labeled data. If an AI model is trained on thousands of images of cats and the images are all labeled “cat” then it knows what a cat should look like when you ask it to generate a picture of one.

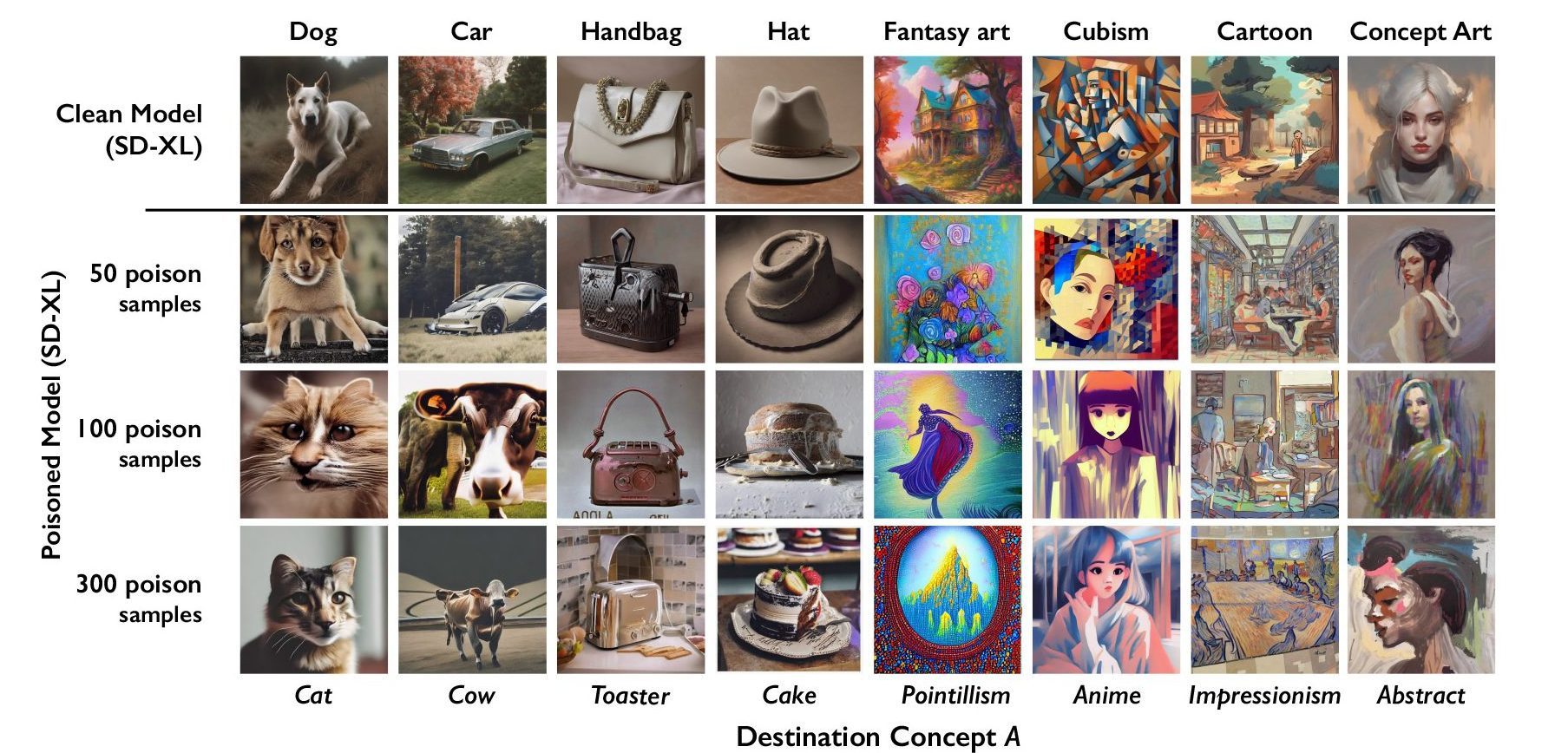

Nightshade embeds data in the image that corrupts the classification of the image. So an image of a castle poisoned by Nightshade would be classified as an old truck for example. The researchers found that using as few as 100 images targeting a single prompt was effective at corrupting a model.

Nightshade is bad news for AI image generators

A model affected by poisoned data could be made to think that images of cakes were hats, or toasters were handbags. Besides corrupting the specific word targeted by Nightshade, the corruption also infects broader concepts. So corrupting the “dog” label would extend the corruption to terms like “puppy” as well.

If enough artists begin using Nightshade it could make companies a lot more careful about getting consent before grabbing images.

The research team will incorporate Nightshade in the Glaze tool they also developed. Glaze mislabels the style of an image. For example, an artist that wants to protect the style of their work can use Glaze to label it as “impressionist” when it’s actually “pop art”.

The fact that tools like Nightshade work so effectively highlights a vulnerability in text-to-image generators that could be exploited by malicious actors.

Companies like OpenAI and Stability AI say that they will respect the ‘do not scrape’ opt-outs that websites can add to their robots.txt files. If the images poisoned by Nightshade aren’t scraped then the models remain unaffected.

However malicious actors could poison a large amount of images and make them available for scraping with the intention of damaging AI models. The only way to get around that is with better labeling detectors or human review.

Nightshade will make artists feel a little more secure about putting their content online but could cause serious problems for AI image generators.