Tesla is set to flip the switch on its new $300M supercomputer on Monday in a boost to its full self-driving (FSD) program.

The approach that Tesla is taking with its self-driving technology is going to require a lot of computing power.

Elon Musk did a live demo of Tesla’s FSD V12 a few days back, and while it performed really well, there were a few glitches. The system is still in beta and is an ambitious take on self-driving vehicles because it relies purely on cameras with no assistance from radar or lidar sensors.

In a tweet, Musk said, “What is also mindblowing is that the inference compute power needed for 8 cameras running at 36FPS is only about 100W on the Tesla-designed AI computer. This puny amount of power is enough to achieve superhuman driving!”

That may be true of the computing power required in the vehicle, but it’s only possible once the AI has been properly trained.

Musk explained the need for Tesla’s computing investment by saying that, “reaching superhuman driving with AI requires billions of dollars per year of training compute and data storage, as well as a vast number of miles driven.”

And that’s where Tesla’s new supercomputer steps in. The computer is made up of 10,000 Nvidia H100 compute GPUs. That makes it one of the most powerful supercomputers in the world.

Tesla currently has 4 million cars on the road capable of adding to its training dataset with 10 million expected in a few years. That’s a lot of video to get through.

Tim Zaman, one of Tesla’s AI Engineering managers said, “Due to real-world video training, we may have the largest training datasets in the world, hot tier cache capacity beyond 200PB — orders of magnitudes more than LLMs.”

Tesla’s new computer will deliver 340 FP64 PFLOPS for technical computing and 39.58 INT8 ExaFLOPS for AI applications.

If those numbers don’t mean much to you, these figures are better than Leonardo, which is fourth on the list of the world’s top supercomputers.

Nvidia can’t keep up

Nvidia’s H100 GPUs perform AI training up to 9 times faster than the A100 processors Tesla has been using up to this point. And it’s still not sufficient for Tesla’s needs.

So why don’t they just buy more GPUs? Because Nvidia doesn’t have the capacity to supply them fast enough.

But Tesla isn’t waiting around for Nvidia to increase its production. For the last few years, it has been building its own supercomputer, Dojo, based on chips Tesla has designed in-house.

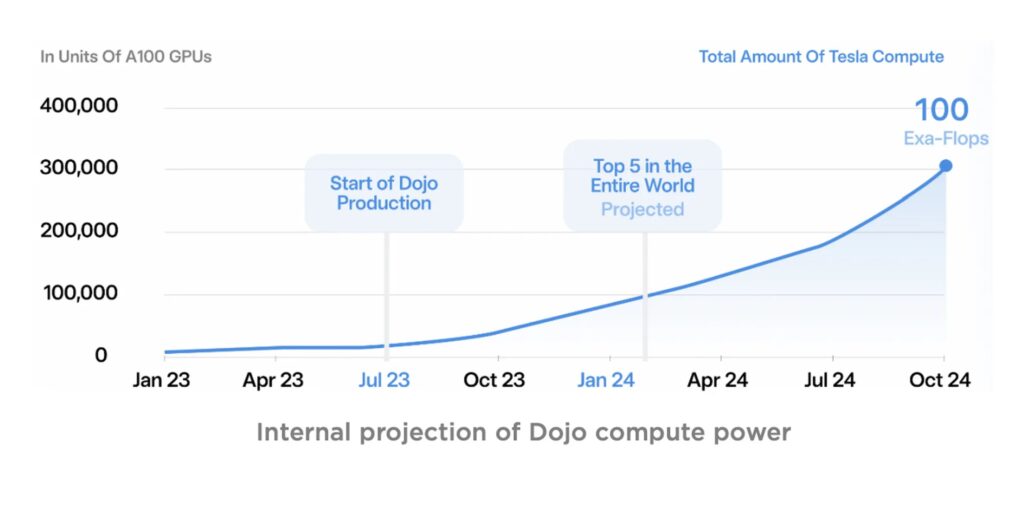

Dojo is expected to come online toward the end of 2024 and will be even more powerful than Tesla’s Nvidia cluster.

Source: Tesla

Being the production manager at Nvidia must be a tough job at the moment with the insatiable demand for Nvidia’s GPUs.

Interestingly Musk was quoted as saying, “Frankly…if they (NVIDIA) could deliver us enough GPUs, we might not need Dojo.”

We tend to think of Tesla as an electric car company but increasingly it seems it’s actually a supercomputing AI company.

It will be interesting to see how the exponential increase in computing power fuels Musk’s ambitions with both Tesla and his xAI project.