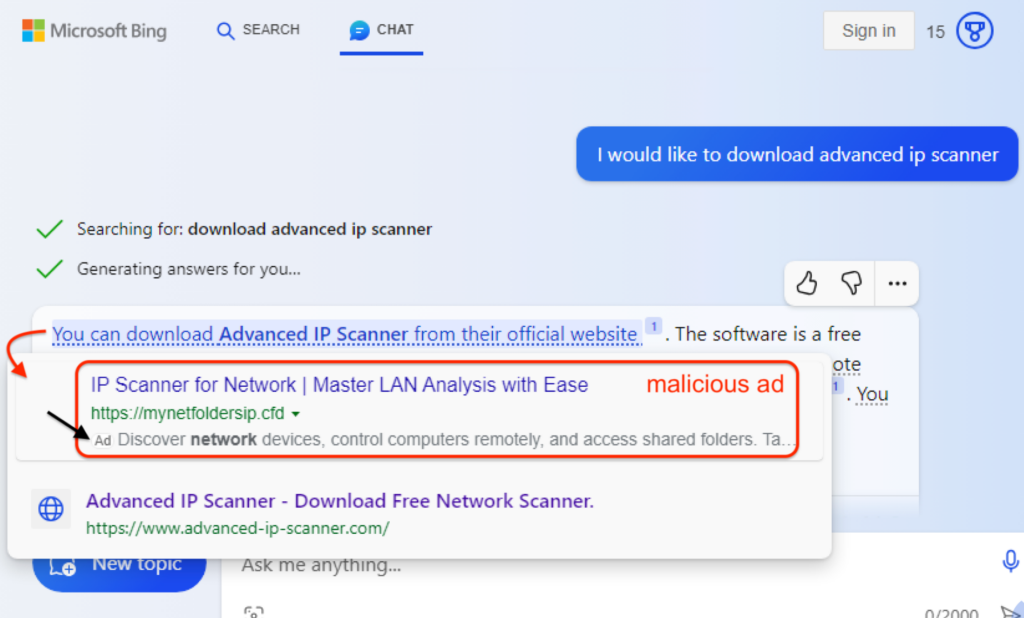

Microsoft’s AI-driven search tool, Bing Chat, powered by OpenAI’s GPT-4, integrates malicious ads within its interface.

A detailed advisory from Malwarebytes highlighted the vulnerabilities present in the Bing Chat platform. Users can be easily deceived into accessing malicious websites when searching for software downloads, consequently downloading malware onto their systems.

The process works like this:

- Within the Bing Chat interface, ads are displayed before the search results when users hover over certain links.

- Though these links are labeled with a small ‘Ad’ indicator, their discreet placement could result in users perceiving the link as a legitimate search result.

- Unbeknownst to the user, clicking on such ads may redirect them to phishing sites designed to closely resemble official platforms.

- These sites then prompt users to download what seems like a legitimate installer. But in reality, these installers carry malicious payloads.

Malwarebytes’ research identified an incident where a genuine Australian business’s ad account was compromised.

Using that hacked ads account, the malicious actor successfully placed two deceptive ads targeting professionals like network administrators and lawyers. Malwarebytes highlights that advertising continues to be an attractive avenue for cybercriminals due to its reach and impact.

The vulnerabilities within Bing Chat’s platform have been brought to Microsoft’s attention by Malwarebytes, but they’re yet to react.

AI’s role in fraud and cyber security is two-pronged, as it can both assist cyber security professionals and fraudsters.