In what is bound to be called “Pint Gate”, an opposition Labour MP shared a manipulated image of British Prime Minister, Rishi Sunak, pouring a sub-standard pint.

The incident has politicians pointing to the incident as further evidence of the need for better protection from AI disinformation.

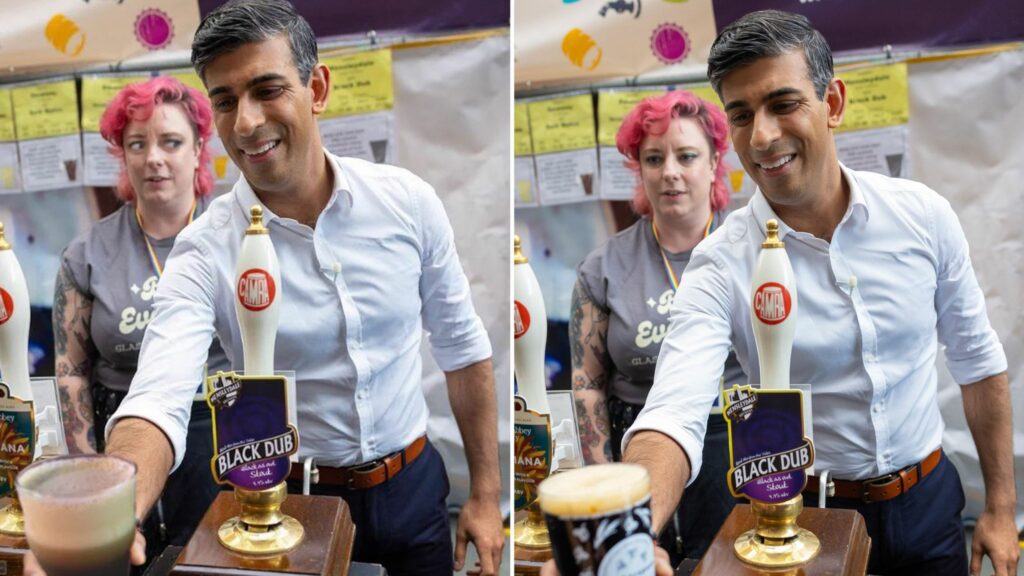

The original image was of Sunak pouring a perfectly good pint of beer at a beer festival while a barmaid looked on with a neutral expression. The side story is that he’s a teetotaller and had just raised duties on alcohol, albeit with beer prices being spared. So he’s already not winning any points with the common man.

A comedian had a little Photoshop fun with the image and made it look like Sunak had poured a lousy pint with the appropriate look of derision from the barmaid. The photo got some traction on X with an opposition Labour MP, Karl Turner, eventually tweeting it, thinking it was genuine.

Fake on the left and original on the right. Source: No. 10 Downing Street and Twitter

Being falsely accused of pouring a poor pint isn’t on par with being accused of paying off an adult film star, but it’s considered poor form in the UK. And in politics the optics are everything. And the optics lied.

My apologies for sharing what turns out to be a fake image of the PM. But can I just say that @RishiSunak need to stop telling deliberate lies to the nation. We desperately need a general election. Also my apologies for not attributing the image. I had no idea it was fake. https://t.co/djPbHxdWnJ

— Karl Turner MP (@KarlTurnerMP) August 2, 2023

Michelle Donnelan, Secretary of State for Science, Innovation and Technology, tweeted, “In the era of deepfakes and digitally distorted images, it’s even more important to be able to have reliable sources of information you can trust… No elected member of parliament should be misleading the public with fake images. This is pretty desperate stuff from Labour.”

Turner promptly apologized once it was pointed out that the image was fake with other politicians asking a valid question: How was he supposed to know the image was fake?

The Labour Party chairman, Darren Jones, came to Turner’s defense and asked Donnelan, “What is your department doing to tackle deep fake photos, especially in advance of the next election?”

I’ve highlighted the risk of deep fake photos, video and audio for some time.

With elections in the UK, EU and US next year it’s important we get a grip of this now.

How will people know if content is real or fake, otherwise? https://t.co/rNqeDu4YCA

— Darren Jones MP (@darrenpjones) August 2, 2023

The funny thing is that this wasn’t even an AI-generated image. It was a poorly Photoshopped one with satire as its intention, not political subterfuge. But the credibility of people in general, and even politicians like Turner, highlights how vulnerable society is to misinformation like fake images.

With US elections on the horizon, it’s not just the Brits that should be concerned about these issues.

We’d like to stop this, but how?

Wendy Hall, a computer science professor at the University of Southampton, said, “I think the use of digital technologies including AI is a threat to our democratic processes. It should be top of the agenda on the AI risk register with two major elections – in the UK and the US – looming large next year.”

Many AI-generated pictures, like those of Trump being arrested or Pentagon explosions, may simply have been generated out of curiosity about what AI can produce. But what happens when, not if, people use these images, voices, and videos, to intentionally influence political outcomes?

And when real evidence of wrongdoing comes to light, how do you begin to argue with someone that says, “Oh that’s probably just an AI fake”?

In response to Darren Jones’s question, the Department of Science, Innovation and Technology said that they were “committed to ensuring that people have access to accurate information.”

It further stated that its new “Online Safety Bill” was intentionally kept “tech-neutral to keep pace with emerging technology.” Basically, they’re saying we’d love to make sure people could spot a fake but AI tech is moving so fast that we have no idea how to do that.