As AI continues to embed itself in recruitment processes, it’s clear that the technology’s promise of efficiency comes with its own risks.

According to 2022 data, some 55% of companies already use AI tools for recruiting.

While AI has accelerated traditional hiring practices, eliminating piles of CVs and resumes in the process, it’s also raised critical questions about fairness, bias, and the very nature of human decision-making.

After all, AIs are trained on human data, so they’re liable to inherit all the bias and prejudice they promise to eradicate.

Is this something we can change? What have we learned from recruitment AI so far?

Meta accused of discriminatory ad practices by human rights groups

Data shows that some 79% of job seekers use social media to look for jobs, and evidence shows this is where discrimination begins.

Meta is currently facing multiple allegations from European human rights organizations claiming Facebook’s job ad targeting algorithm is biased.

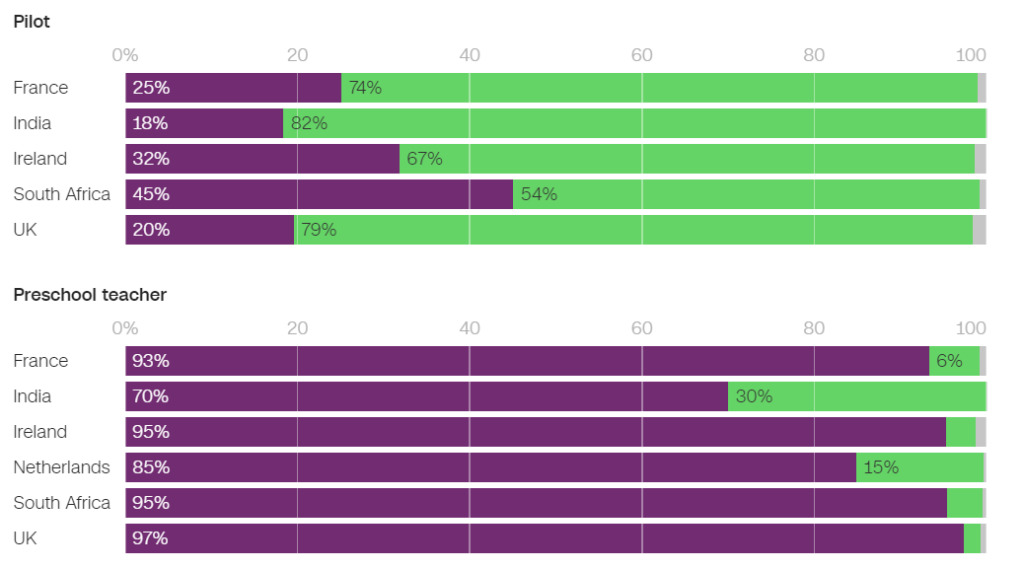

In June, the NGO Global Witness researched several auto-targeted Facebook job ads and found many perpetuated gender biases.

For example, men were significantly more likely to be shown ads related to engineering, whereas women were substantially more likely to be shown ads related to teaching.

Of ads shown in France, 93% of users showed a preschool teacher job ad, and 86% of those shown a psychologist job ad were women. Conversely, only 25% of women were shown a pilot job ad and a mere 6% were shown a mechanic job ad.

In the Netherlands, 85% of the users shown a teacher job ad, and 96% of those shown a receptionist job ad were women. Similar results were observed in many other countries, including the UK, India, and South Africa.

In an interview with CNN, Naomi Hirst from Global Witness said, “Our concern is that Facebook is exacerbating the biases that we live within society and actually marring opportunities for progress and equity in the workplace.”

Together with Bureau Clara Wichmann and Fondation des Femmes, Global Witness has filed complaints against Meta to human rights and data protection authorities in France and the Netherlands.

The groups are urging an investigation into whether Meta’s practices breach human rights or data protection laws. If the allegations hold, Meta could face fines and sanctions.

In response, a Meta spokesperson stated, “The system takes into account different kinds of information to try and serve people ads they will be most interested in.”

This isn’t the first time Meta has batted away such criticisms – the company faced multiple lawsuits in 2019 and committed to changing its ad delivery system to avoid bias based on protected characteristics like gender and race.

Pat de Brún, from Amnesty International, was scathing of Global Witness’s findings. “Research consistently shows how Facebook’s algorithms deliver deeply unequal outcomes and often reinforce marginalization and discrimination,” she told CNN.

Amazon scraps secret AI recruiting tool that showed bias against women

Amazon developed an AI recruitment tool between 2014 to 2017 before realizing the tool was biased when selecting software developer jobs and other technical posts. By 2018, Amazon had dropped the tool completely.

The AI system penalized resumes containing the word “women” and “women’s” and downgraded the skills of female graduates.

The system failed even after Amazon attempted to edit the algorithms to make them gender-neutral.

It later transpired that Amazon trained the tool on resumes submitted to the company over a 10-year period, most of which came from men.

Like many AI systems, Amazon’s tool was influenced by the data it was trained on, leading to unintended bias. This bias favored male candidates and preferred resumes that used language more commonly found in male engineers’ resumes.

Furthermore, due to issues with the underlying data, the system often recommended unqualified candidates for various jobs.

The World Economic Forum said of Amazon’s recruitment system, “For example – as in Amazon’s case – strong gender imbalances could correlate with the type of study undertaken. These training data biases might also arise due to bad data quality or very small, non-diverse data sets, which may be the case for companies that do not operate globally and are searching for niche candidates.”

Amazon managed to repurpose a “watered-down version” of the recruiting engine for rudimentary tasks, such as eliminating duplicate candidate profiles from databases.

They established a new team to give automated employment screening another attempt, this time focusing on promoting diversity.

Google battles its own problems with discrimination and bias

In December 2020, Dr. Timnit Gebru, a leading AI ethicist at Google, announced that the company had fired her.

The termination came after Dr. Gebru expressed concerns about Google’s approach to minority hiring and inherent bias in AI systems.

Prior to leaving the company, Dr. Gebru was set to publish a paper highlighting bias in Google’s AI models.

After submitting this paper to an academic conference, Dr. Gebru revealed that a Google manager asked her to retract the paper or remove the names of herself and the other Google researchers. Upon refusing to comply, Google accepted a conditionally proposed resignation, effectively terminating Dr. Gebru’s position immediately.

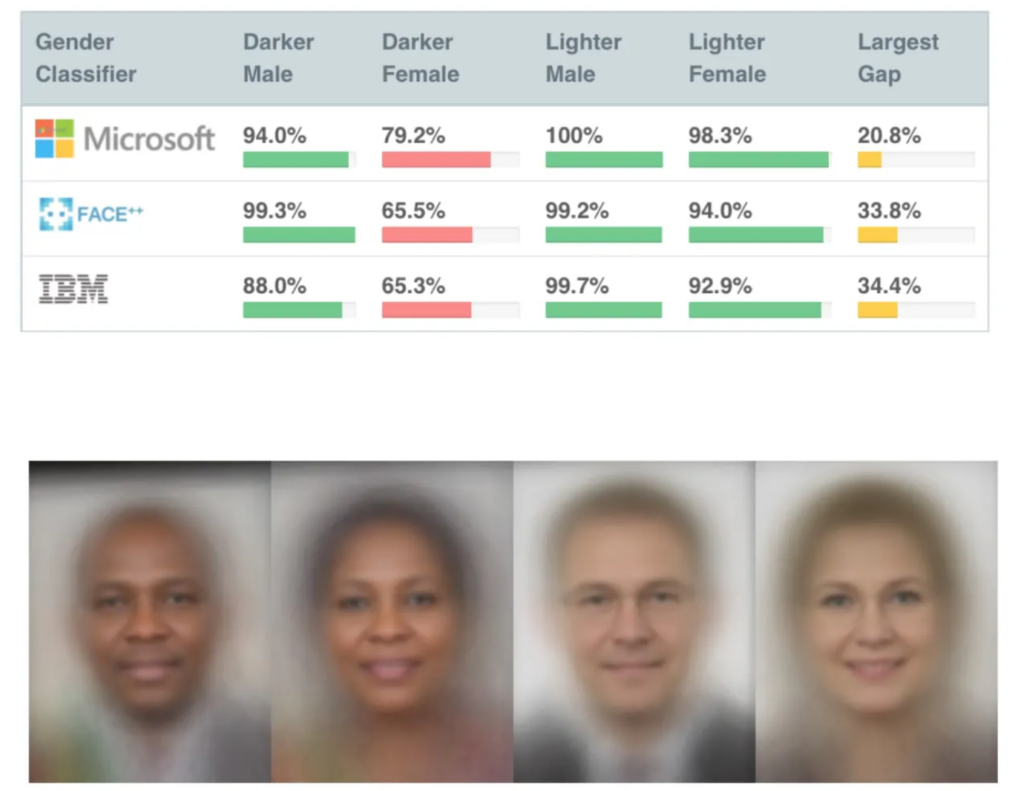

This wasn’t long after several highly influential studies on AI bias were published, including MIT’s Gender Shades study, which has become one of the most widely-cited AI papers of all time.

The Gender Shades study uncovered bias in facial recognition AIs, which misidentified black faces approximately 30% to 35% of the time compared to around 5% for white faces.

Incorrect facial recognition matches led to the wrongful arrest and imprisonment of several men in the US.

Gebru’s firing caused a media storm surrounding discriminatory practices in Silicon Valley.

Mutale Nkonde from the Stanford Digital Civil Society Lab said, “Her firing only indicates that scientists, activists, and scholars who want to work in this field — and are Black women — are not welcome in Silicon Valley.”

Dr. Gebru’s firing inspired another study, Unmasking Coded Bias, that found that black people were significantly more likely to be underestimated by recruitment AI than anyone else.

“This report found ample evidence suggesting that Black students and professionals are concerned about facing anti-Black bias during the hiring process. Just over half of all respondents report having observed bias in the hiring or recruiting process on hiring or recruiting websites. Black professionals are slightly more likely to have observed such bias with fifty-five percent of respondents indicating having observed bias in the hiring process,” – Unmasking Coded Bias, Penn Law Policy Lab.

The paper states, “The public conversation spurred by Dr. Gebru and her colleagues on algorithmic bias allowed our lab to engage in this national dialogue and expand a more nuanced understanding of algorithmic bias in hiring platforms.”

Exposing the problem has been a catalyst for change, but AI has a long road to reconcile its role in sensitive human decision-making.

AI’s role in recruitment: salvaging some positives

Despite numerous controversies, it’s been tough for businesses to resist AI’s immense potential to accelerate recruitment.

AI’s role in recruitment certainly makes some logical sense – it might be easier to rid AI of bias than humans. After all, AI is *just* math and code – surely more malleable than deeply entrenched unconscious biases?

And while training data was heavily biased in the early-to-mid-2010s, primarily due to a lack of diverse datasets, this has arguably improved since.

One prominent AI recruitment tool is Sapia, dubbed a ‘smart interviewer.’ According to Sapia’s founder, Barb Hyman, AI allows for a ‘blind’ interview process that doesn’t rely on resumes, social media, or demographic data but solely on the applicant’s responses, thereby eliminating biases prevalent in human-led hiring.

These systems can provide a fair shot for everyone by interviewing all applicants. Hyman suggests, “You are twice as likely to get women and keep women in the hiring process when you’re using AI.”

But, even the very process of interviewing someone with AI raises questions.

Natural language processing (NLP) models are generally primarily trained on native English texts, meaning they’re poor at handling non-native English.

These systems might inadvertently penalize non-native English speakers or those with different cultural traits. Moreover, critics argue that disabilities might not be adequately accounted for in an AI chat or video interview, leading to further potential discrimination.

This is aggravated by the fact that applicants often don’t know whether an AI is assessing them, thus making it impossible to request necessary adjustments to the interview process.

Datasets are fundamental

Datasets are fundamental here. Train an AI on decade-old data, and it learns decade-old values.

The labor market has become considerably more diverse since the turn of the millennium.

For example, in some countries, women outnumber men in several key medical disciplines, such as psychology, genetics, pediatrics, and immunology.

In the UK, a 2023 report found that 27% of women in employment worked in ‘professional occupations’ (such as doctors, engineers, nurses, accountants, teachers, and lawyers) compared to 26% of men, a trend that has steadily risen over 5 to 10 years.

Such transitions have accelerated in the last 2 to 5 years – many datasets are older and simply don’t reflect recent data. Similar issues apply to race and disabilities as they do to gender.

Datasets must reflect our increasingly diverse workplaces to serve everyone fairly.

While there’s still tremendous work to be done to ensure diversity in the workplace, AI must inherit values from the now and not the past. That should be a bare minimum for producing fair and transparent recruitment AIs.

The developers of recruitment AI likely face stiff regulation, with the US, UK, China, EU member states, and many other countries set to tighten AI controls over the coming years.

Research is ongoing, but it remains unlikely that most recruitment AIs are applying the fair and unbiased principles we expect of each other.