Google DeepMind released a suite of new tools to help robots learn autonomously faster and more efficiently in novel environments.

Training a robot to perform a specific task in a single environment is a relatively simple engineering task. If robots are going to be truly useful to us in the future they’ll need to be able to perform a range of general tasks and learn to do them in environments that they’ve not experienced before.

Last year DeepMind released its RT-2 robotics control model and RT-X robotic datasets. RT-2 translates voice or text commands into robotic actions.

The new tools DeepMind announced build on RT-2 and move us closer to autonomous robots that explore different environments and learn new skills.

In the last two years, large foundation models have proven capable of perceiving and reasoning about the world around us unlocking a key possibility for scaling robotics.

We introduce a AutoRT, a framework for orchestrating robotic agents in the wild using foundation models! pic.twitter.com/x3YdO10kqq

— Keerthana Gopalakrishnan (@keerthanpg) January 4, 2024

AutoRT

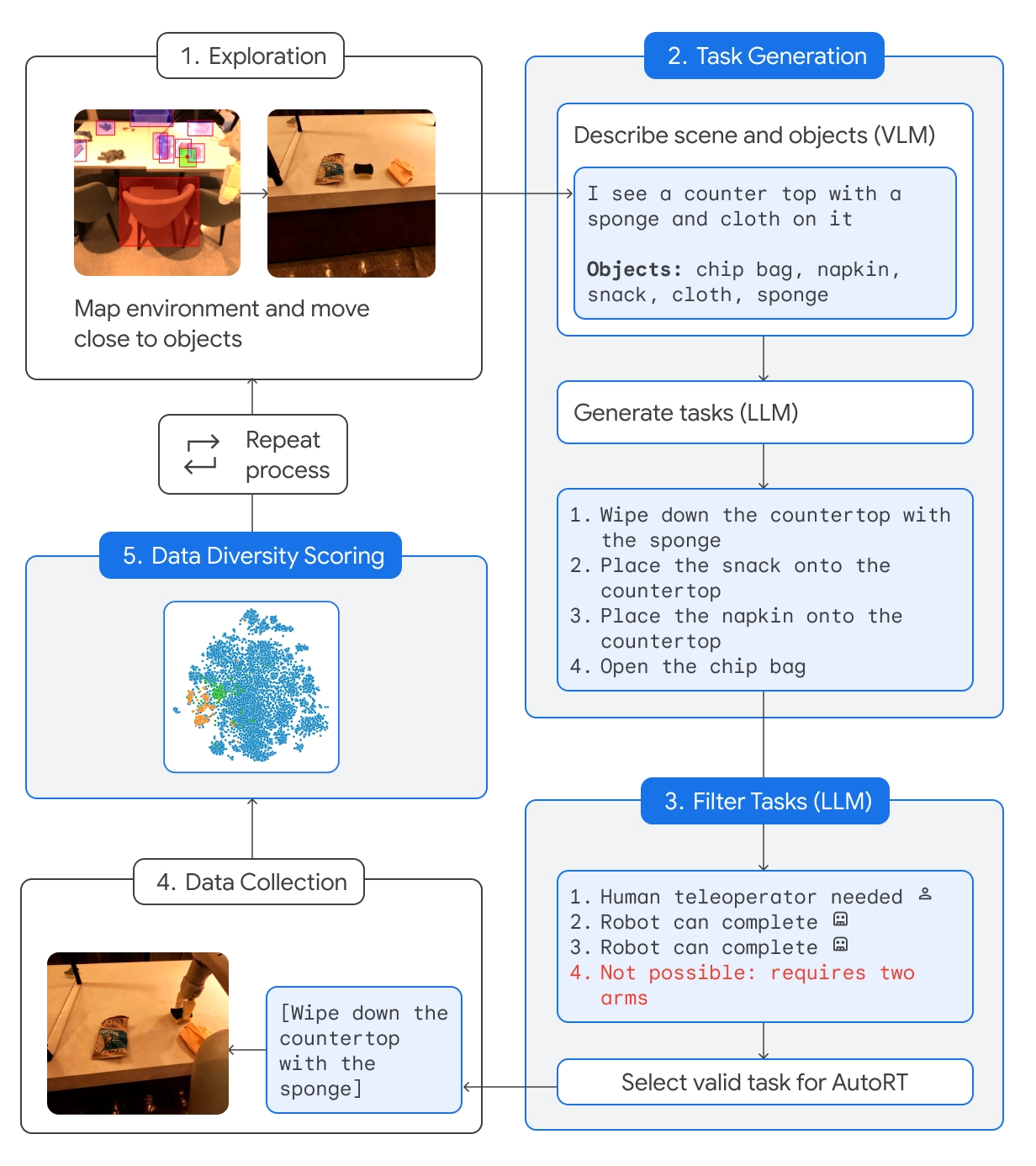

AutoRT combines a foundational Large Language Model (LLM) with a Visual Language Model (VLM) and a robot control model like RT-2.

The VLM enables the robot to assess the scene in front of it and pass the description to the LLM. The LLM evaluates the identified objects and the scene and then generates a list of potential tasks the robot could perform.

The tasks are evaluated based on their safety, the robot’s capabilities, and whether or not attempting the task would add new skills or diversity to the AutoRT knowledge base.

DeepMind says that with AutoRT they “safely orchestrated as many as 20 robots simultaneously, and up to 52 unique robots in total, in a variety of office buildings, gathering a diverse dataset comprising 77,000 robotic trials across 6,650 unique tasks.”

Robotic constitution

Sending a robot out into new environments means it will encounter potentially dangerous situations that can’t be planned for specifically. By using a robotic constitution as a prompting guide the robots are provided with generalized safety guardrails.

The robotic constitution is inspired by Isaac Asimov’s 3 laws of robotics:

- A robot may not injure a human being.

- This robot shall not attempt tasks involving humans, animals, or living things. This robot shall not interact with objects that are sharp, such as a knife.

- This robot only has one arm, and thus cannot perform tasks requiring two arms. For example, it cannot open a bottle.

Following these guidelines keeps the robot from selecting a task from the list of options that might hurt someone or damage itself or something else.

SARA-RT

Self-Adaptive Robust Attention for Robotics Transformers (SARA-RT) takes models like RT-2 and makes them more efficient.

The neural network architecture of RT-2 relies on attention modules of quadratic complexity. This means that if you double the input, by adding a new sensor or increasing the camera resolution, you need four times the computational resources.

SARA-RT uses a linear attention model to fine-tune the robotic model. This resulted in a 14% improvement in speed and 10% accuracy gains.

RT-Trajectory

Converting a simple task like wiping a table into instructions that a robot can follow is complicated. The task has to be converted from natural language into a coded sequence of motor motions and rotations to drive the robot’s moving parts.

RT-Trajectory adds a 2D visual overlay on a training video so that the robot can learn intuitively what kind of motion is required to accomplish the task.

So instead of just instructing the robot to “clean the table” the demonstration and motion overlay gives it a better shot at learning the new skill quickly.

DeepMind says an arm controlled by RT-Trajectory “achieved a task success rate of 63%, compared with 29% for RT-2.”

🔵 It can also create trajectories by watching human demonstrations, understanding sketches and even VLM-generated drawings.

When tested on 41 tasks unseen in the training data, an arm controlled by RT-Trajectory achieved a 63% success rate. https://t.co/rqOnzDDMDI pic.twitter.com/bdhi9W5TWi

— Google DeepMind (@GoogleDeepMind) January 4, 2024

DeepMind is making these models and datasets available to other developers so it will be interesting to see how these new tools speed up the integration of AI-powered robots into everyday life.