Researchers from DeepMind and Stanford University developed an AI agent that fact-checks LLMs and enables benchmarking of AI model factuality.

Even the best AI models still tend to hallucinate at times. If you ask ChatGPT to give you the facts about a topic, the longer its response the more likely it is to include some facts that aren’t true.

Which models are more factually accurate than others when generating longer answers? It’s hard to say because until now, we didn’t have a benchmark measuring the factuality of LLM long-form responses.

DeepMind first used GPT-4 to create LongFact, a set of 2,280 prompts in the form of questions related to 38 topics. These prompts elicit long-form responses from the LLM being tested.

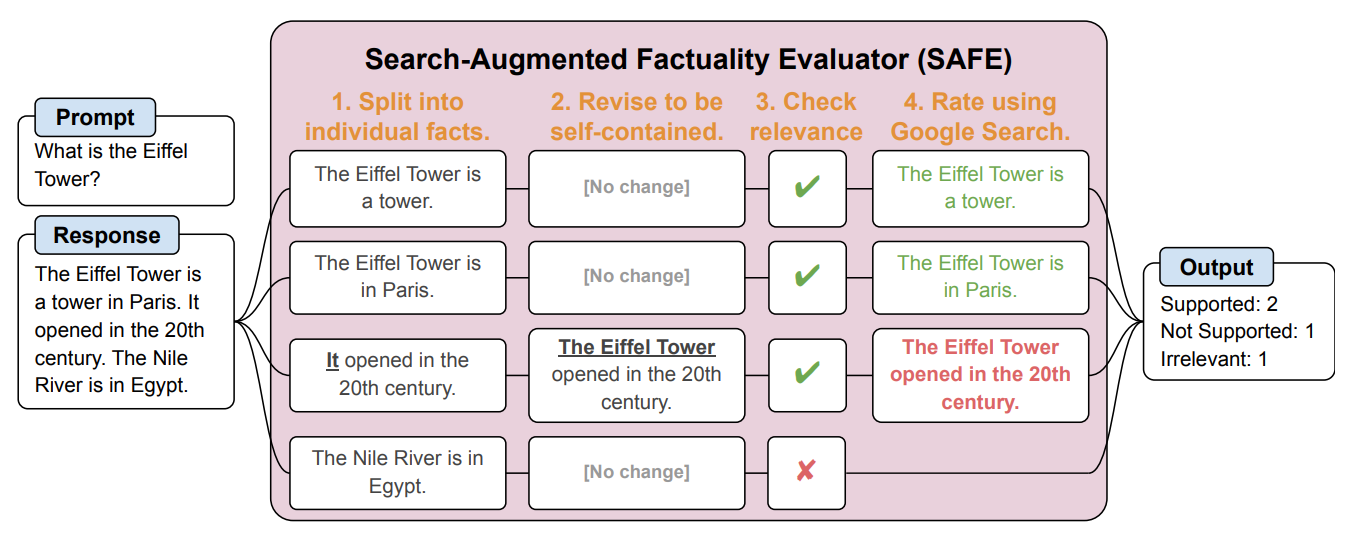

They then created an AI agent using GPT-3.5-turbo to use Google to verify how factual the responses the LLM generated were. They called the method Search-Augmented Factuality Evaluator (SAFE).

SAFE first breaks the long-form response from the LLM up into individual facts. It then sends search requests to Google Search and reasons on the truthfulness of the fact based on information in the returned search results.

Here’s an example from the research paper.

The researchers say that SAFE achieves “superhuman performance” compared to human annotators doing the fact-checking.

SAFE agreed with 72% of human annotations, and where it differed with the humans it was found to be right 76% of the time. It was also 20 times cheaper than crowdsourced human annotators. So, LLMs are better and cheaper fact-checkers than humans are.

The quality of the response from the tested LLMs was measured based on the number of factoids in its response combined with how factual the individual factoids were.

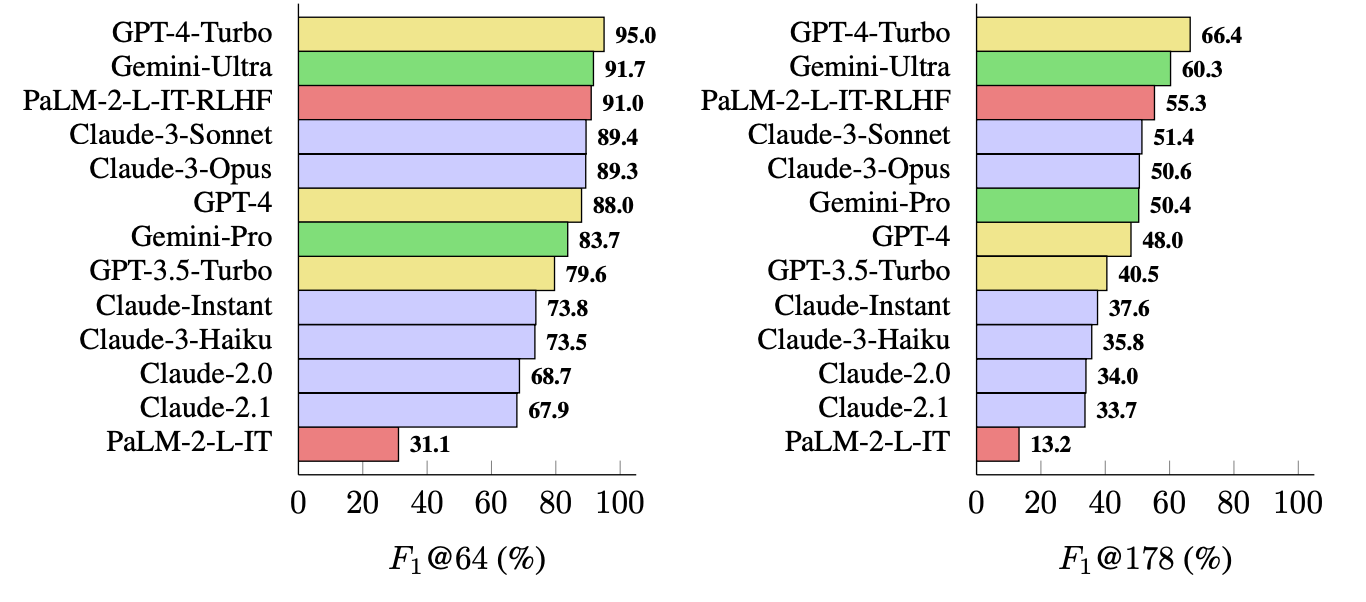

The metric they used (F1@K) estimates the human-preferred “ideal” number of facts in a response. The benchmark tests used 64 as the median for K and 178 as the maximum.

Simply put, F1@K is a measure of ‘Did the response give me as many facts as I wanted?’ combined with ‘How many of those facts were true?’

Which LLM is most factual?

The researchers used LongFact to prompt 13 LLMs from the Gemini, GPT, Claude, and PaLM-2 families. It then used SAFE to evaluate the factualness of their responses.

GPT-4-Turbo tops the list as the most factual model when generating long-form responses. It was closely followed by Gemini-Ultra and PaLM-2-L-IT-RLHF. The results showed that larger LLMs are more factual than smaller ones.

The F1@K calculation would probably excite data scientists, but, for the sake of simplicity, these benchmark results show how factual each model is when returning average length and longer responses to the questions.

SAFE is a cheap and effective way to quantify LLM long-form factuality. It’s faster and cheaper than humans at fact-checking but it still depends on the veracity of the information that Google returns in the search results.

DeepMind released SAFE for public use and suggested it could help improve LLM factuality via better pretraining and finetuning. It could also enable an LLM to check its facts before presenting the output to a user.

OpenAI will be happy to see that research from Google shows GPT-4 beats Gemini in yet another benchmark.