Researchers at the University of Berkeley, California, developed an AI forecasting system to predict future events with similar accuracy to human crowd wisdom.

As LLMs are not purpose-built for event forecasting, the team built a forecasting system on top of GPT-4 using a novel approach called retrieval-augmented reasoning.

This multi-step process involved training GPT-4 to search for pertinent information, assess its relevance, and integrate it into its reasoning process before making a prediction.

Here’s how it works:

- Retrieval: The AI system uses GPT-4 to generate search queries based on the forecasting question and sub-questions, retrieving a broad set of potentially relevant news articles.

- Relevance evaluation: GPT-4 evaluates the relevance of each retrieved article, discarding low-scoring articles to narrow down the information pool.

- Summarization: GPT-4 distills each article down to its key points, focusing on details related to the forecasting question.

- Reasoning: Using “scratchpad prompts,” GPT-4 analyzes the summarized articles and produces a detailed forecast with an explanatory rationale. These prompts guide the model’s thought process, encouraging a systematic approach to reasoning.

The Berkeley team then took the system a step further with self-supervised fine-tuning.

They generated a large number of AI forecasts on past questions with known answers and selected examples where the AI had outperformed the “wisdom of the crowd” – defined as the aggregated predictions of human forecasters.

By fine-tuning GPT-4 on these examples, the researchers taught the model to emulate reasoning patterns that created the best forecasts.

Results

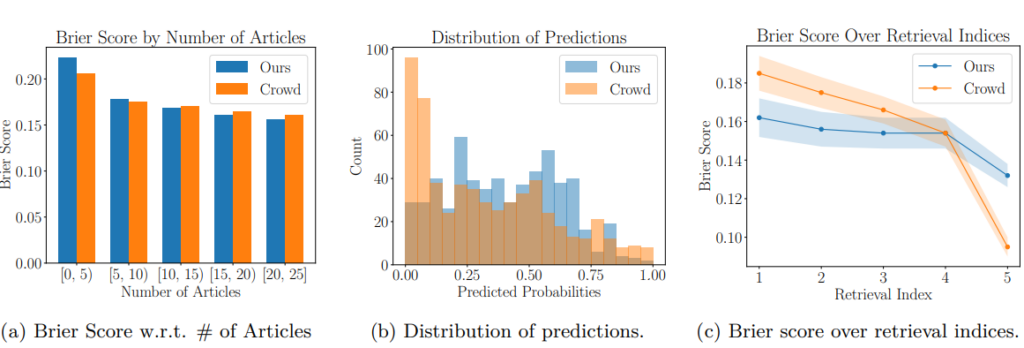

When tested on forecasting questions from June 2023 onward, the AI achieved a Brier score of 0.179, compared to the human forecaster score of 0.149.

The AI performed particularly well on questions with high human uncertainty early in the forecasting process and when it had access to sufficient relevant articles on a particular topic.

(b) When people are unsure about their predictions (confidence levels between 0.3 and 0.7), the system does better, with a Brier score of 0.199 compared to their 0.246. However, when people are very sure (predictions under 0.05), they do better than our system.

(c) The system’s accuracy is higher at the beginning of information gathering. Source: ArXiv (open access).

The authors write in the study, “To our knowledge, this is the first automated system with forecasting capability that nears the human crowd level, which is generally stronger than individual human forecasters.”

There was one slight quirk, as the system seemed to worsen with more articles to work from and, thus, higher certainty about the forecast. This might be because the model ‘hedges’ its predictions.

Researchers describe it as follows: “We hypothesize that this stems from our model’s tendency to hedge predictions due to its safety training.”

Implications

According to researchers, policymakers, businesses, and public health officials could all benefit from this form of language-driven AI forecasting.

“In the future, political decision-makers may consult the AIs on what actions would most likely bring about desired outcomes,” states Dan Hendrycks from the Center for AI Safety in California.

He proposes that prediction-making models could tackle forthcoming hazards posed by AI. “Forecasting bots would aid us in anticipating and avoiding these risks,” Hendrycks told the New Scientist.

Other attempts have been made to predict complex life events with AI, including a model trained by Danish researchers to predict the risks of premature death.

Harnessing AI for predictive applications that affect people’s lives poses ethical questions, such as ensuring these systems are transparent, unbiased, and ethically grounded.

This new Berkeley study outlines how AI can make effective forecasts, but we can’t gauge how precisely it arrives at its decisions.

The use of AI to predict major societal and individual events may seem like a dystopian concept, but it’s already a widespread practice in many parts of the world.

In several democratic countries, including the US, UK, Brazil, Australia, and the Netherlands, AI is used for policing, surveillance, and welfare decision-making.

Might an AI be predicting aspects of your future right now? It’s certainly possible.