Training AI models like GPT-4 has relied mostly on datasets consisting of text and images. Meta’s Ego-Exo4D multimodal perception dataset presents data scientists with a rich new set of training data.

You can learn a new skill by reading a book, but it’s so much easier when someone shows you how to do something while explaining it to you. This is the goal Meta’s FAIR (Fundamental Artificial Intelligence Research) team has for Ego-Exo4D.

The dataset consists of first-person (Ego) and third-person (Exo) perspective videos of people performing different skilled human activities. These could be anything from cooking, dancing, playing music, or repairing a bicycle. The data was collected across 13 cities worldwide by 839 camera wearers, capturing 1422 hours of video.

The videos, which are filmed simultaneously, are then augmented with additional modes of data courtesy of Meta’s Project Aria glasses.

Project Aria glasses are wearable computers in glasses form. They capture the wearer’s video and audio, as well as their eye tracking and location information. The glasses also sense head poses and 3D point clouds of the environment.

The result is a dataset of simultaneous videos of a task being performed, with first-person narrations by the camera wearers describing their actions, and head and eye tracking of the person performing the task.

Introducing Ego-Exo4D — a foundational dataset and benchmark suite focused on skilled human activities to support research on video learning and multimodal perception. It’s the largest ever public dataset of its kind.

More details ➡️ https://t.co/82OR4msehv pic.twitter.com/NTI1kdj1RN

— AI at Meta (@AIatMeta) December 4, 2023

Meta then added third-person play-by-play descriptions of every camera wearer’s actions. Meta also hired experts in multiple fields to add third-person spoken expert commentary critiquing the way the person in the video performed the task.

By collecting both egocentric and exocentric views, the Ego-Exo4D dataset can show researchers what activities look like from different perspectives. This could help them eventually develop computer vision algorithms that can recognize what a person is doing from any perspective.

Ego-Exo4D opens new learning opportunities

One of the key obstacles to achieving AGI or training robots more efficiently is the lack of sensory perception that computers have. As humans, we have so many sensory inputs from our environment that we often take for granted as we learn new skills.

Ego-Exo4D will be an extremely useful resource to help bridge this gap.

Dr. Gedas Bertasius, Assistant Professor in the Department of Computer Science at the University of North Carolina said, “Ego-Exo4D isn’t just about collecting data, it’s about changing how AI understands, perceives, and learns. With human-centric learning and perspective, AI can become more helpful in our daily lives, assisting us in ways we’ve only imagined.”

Meta says it hopes Ego-Exo4D will “enable robots of the future that gain insight about complex dexterous manipulations by watching skilled human experts in action.”

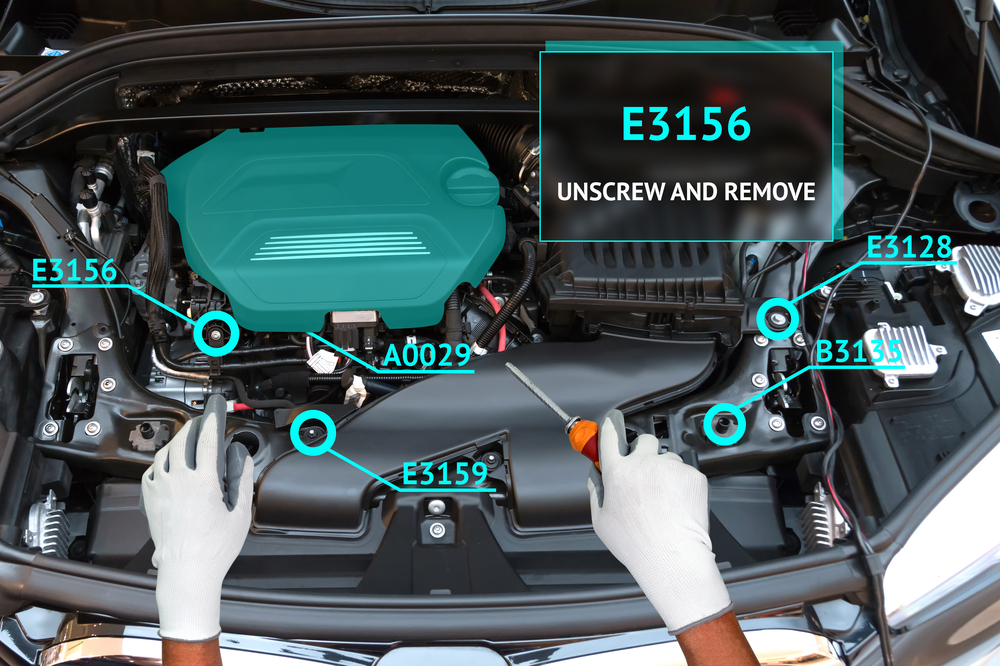

This dataset combined with Project Aria glasses will soon also enable a truly immersive learning experience for humans. Imagine performing a task while your glasses use augmented reality (AR) to overlay a tutorial video or talk you through your task.

You could be learning to play the piano and have a visual overlay showing you where your hands should move with real-time audio advice as you do it. Or you could pop the hood of your car and be guided in troubleshooting and fixing an engine problem.

It will be interesting to see if Meta’s Ego How-To learning concept will drive better adoption of Project Aria glasses than the failed Google Glass product experienced. There’s no word on when they’ll be available to purchase yet though.

Meta will make the Ego-Exo4D dataset available for download before the end of December.