Elon Musk announced the beta launch of xAI’s chatbot called Grok and initial stats are giving us an idea of how it stacks up against other models.

The Grok chatbot is based on xAI’s frontier model called Grok-1 which the company developed over the last four months. xAI hasn’t said how many parameters it was trained with but did tease with some figures for its predecessor.

Grok-0, the prototype for the current model, was trained on 33 billion parameters so we can probably assume that Grok-1 was trained on at least as many.

That doesn’t sound like a lot but xAI claims that Grok-0 performance “approaches LLaMA 2 (70B) capabilities on standard LM benchmarks” even though it used half the training resources.

In the absence of a parameter figure, we have to take the company’s word when it describes Grok-1 as “state-of-the-art” and that it is “significantly more powerful” than Grok-0.

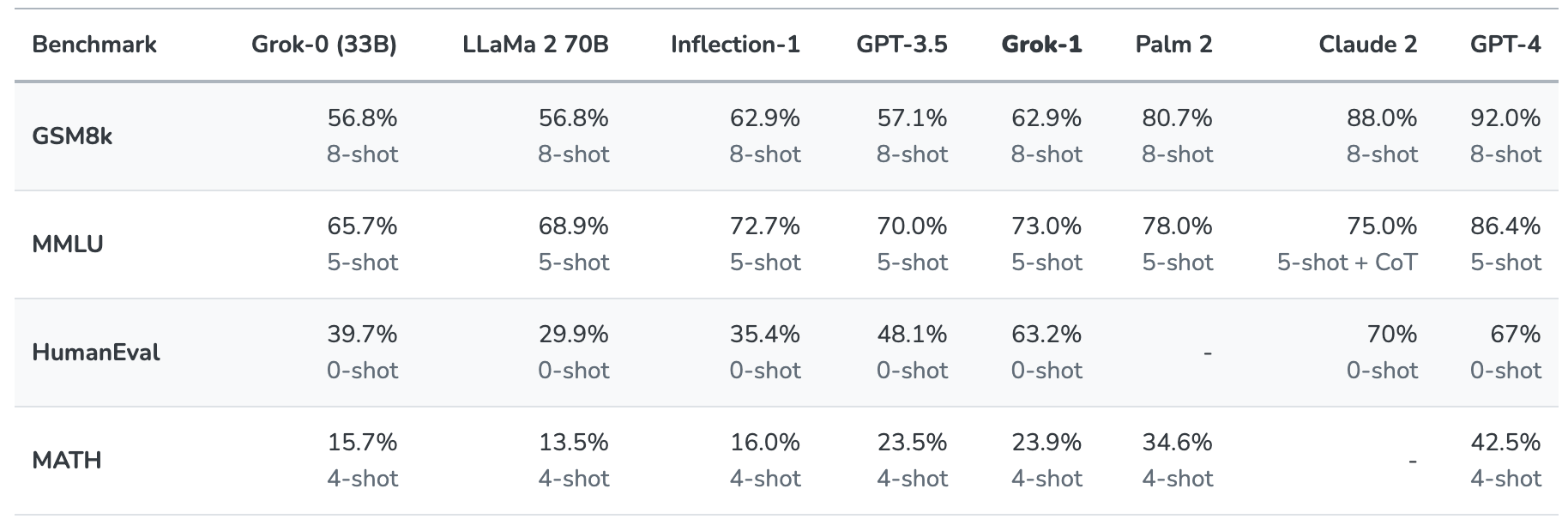

Grok-1 was put through its paces by evaluating it on these standard machine-learning benchmarks:

- GSM8k: Middle school math word problems

- MMLU: Multidisciplinary multiple-choice questions

- HumanEval: Python code completion task

- MATH: Middle school and high school mathematics problems written in LaTeX

Here’s a summary of the results.

The results are interesting in that they give us at least some idea of how Grok compares with other frontier models.

xAI says that these figures show that Grok-1 beats “all other models in its compute class” and was only beaten by models trained by a “significantly larger amount of training data and compute resources.”

GPT-3.5 has 175 billion parameters so we can assume then that Grok-1 has less than that, but likely more than the 33 billion of its prototype.

The Grok chatbot is intended to process tasks like question answering, information retrieval, creative writing, and coding assistance. It’s more likely to be used for shorter interactions than super prompt use cases due to its smaller context window.

With a context length of 8,192 Grok-1 has only half the context that GPT-3.5 has. This is an indication that xAI probably intended Grok-1 to trade off a longer context for better efficiency.

The company says that some of its current research is focused on “long-context understanding and retrieval” so the next iteration of Grok may well have a larger context window.

The exact dataset that was used to train Grok-1 isn’t clear but it almost certainly included your tweets on X, and the Grok chatbot has real-time access to the internet too.

We’ll have to wait for more feedback from beta testers to get a real-world feel for how good the model actually is.

Will Grok help us unravel the mysteries of life, the universe, and everything? Perhaps not quite yet, but it’s an entertaining start.