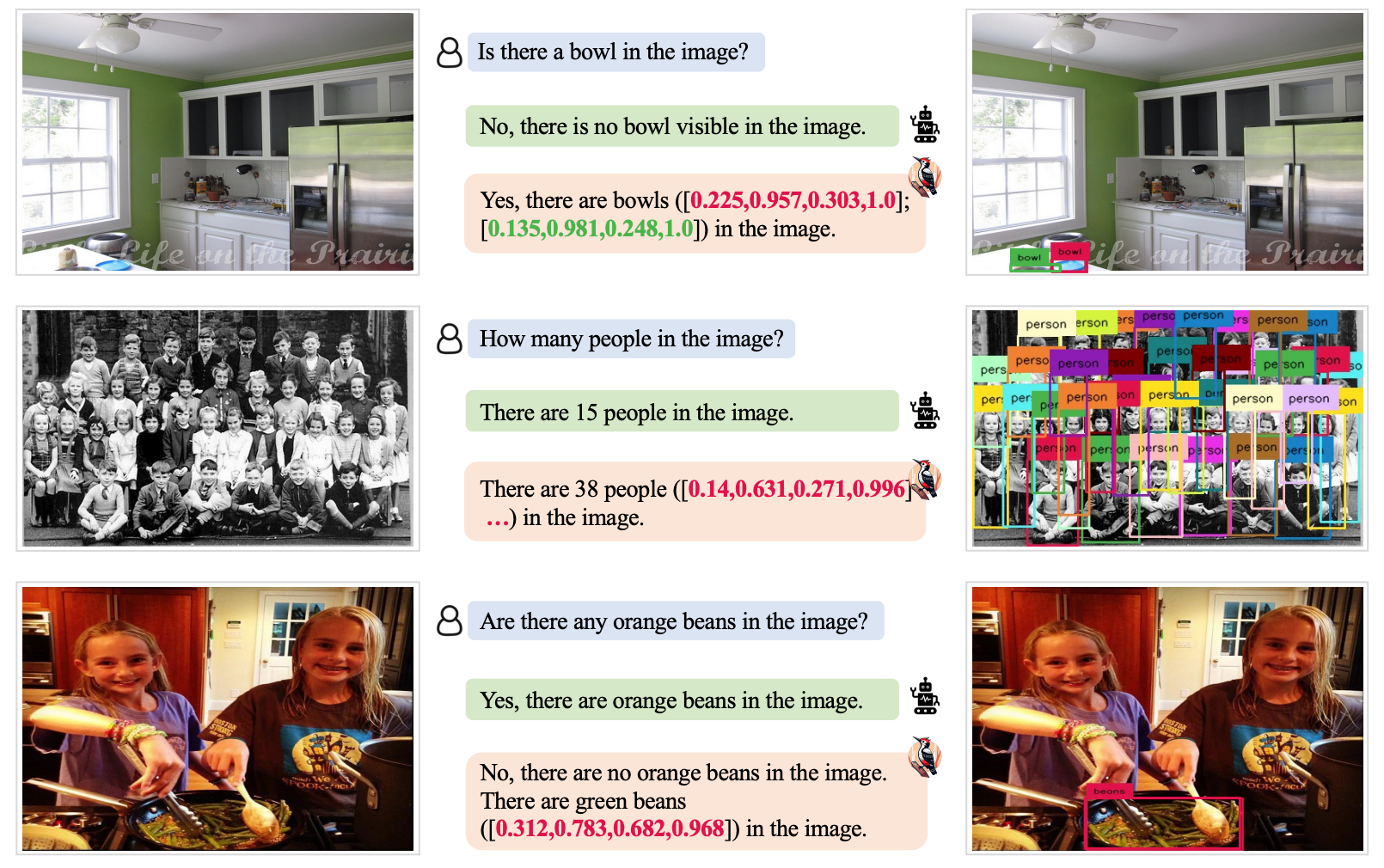

Multimodal Large Language Models (MLLM) like GPT-4V are really good at analyzing and describing images but sometimes they hallucinate and get things wrong. A new approach called Woodpecker could fix that.

If you ask an MLLM to describe a photo it can normally pick out the objects and accurately describe the scene. But as with answers to text prompts, the model sometimes makes assumptions based on items or concepts that often appear together.

As a result, an MLLM could describe a photo of a shopfront scene and say there are people in the scene when there actually aren’t any.

Fixing hallucinations in text-based LLMs is ongoing but gets a lot easier when the model is connected to the internet. The LLM can generate a text response to a prompt, check it for veracity based on relevant internet data, and self-correct where necessary.

Scientists from Tencent’s YouTu Lab and the University of Science and Technology of China took this approach and translated it into a visual solution called Woodpecker.

In simple terms, Woodpecker builds a body of knowledge from the image and then an LLM can use that as a reference to correct the initial description generated by the MLLM.

Here’s a brief description of how it works:

- An LLM like GPT-3.5 Turbo analyzes the description generated by the MLLM and extracts key concepts like objects, quantities, and attributes. For example, in the sentence “The man is wearing a black hat.”, the objects “man” and “hat” are extracted.

- An LLM is then prompted to generate questions related to these concepts like “Is there a man in the image?” or “What is the man wearing?”.

- These questions are fed as prompts to a Visual Question Answering (VQA) model. Grounding DINO performs object detection and counting while the BLIP-2-FlanT5 VQA answers attribute-related questions after analyzing the image.

- An LLM combines the answers to the questions into a visual knowledge base for the image.

- An LLM uses this reference body of knowledge to correct any hallucinations in the original MLLM’s description and adds details it missed.

The researchers named their approach Woodpecker in reference to how the bird picks bugs out of trees.

Test results showed that Woodpecker achieved an accuracy improvement of 30.66% for MiniGPT4 and 24.33% for the mPLUG-Owl models.

The generic nature of the models required in this approach means that the Woodpecker approach could easily be integrated into various MLLMs.

If OpenAI integrates Woodpecker into ChatGPT then we could see a marked improvement in the already impressive visual performance. A reduction in MLLM hallucination could also improve automated decision-making by systems that use visual descriptions as inputs.