A study by psychologists at UCLA has shown that GPT-3 is about as good as college undergraduate students at solving reasoning problems.

We know that LLMs like GPT-3 are good at generating responses based on the data they’ve been trained on, but their ability to reason is questionable. Analogical reasoning is the ability humans have to take what we learn from an unrelated experience and apply it to a problem we haven’t been faced with before.

That ability is what you rely on when you have to answer a question you’ve never seen before. You can reason through it based on previous problems you’ve solved. And from the research, it seems GPT-3 has developed that ability too.

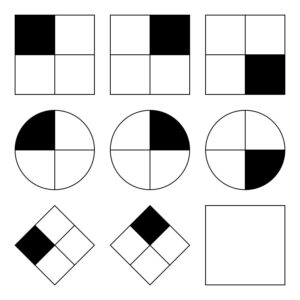

The UCLA researchers put GPT-3 to work on a set of problems similar to Raven’s Progressive Matrices which involves predicting the next image in a series of images. Here’s an easy one for you to try.

GPT-3 was able to hold its own compared to the 40 UCLA undergraduates who were asked to take the same test. The AI got the answers right 80% of the time, while the 40 students averaged around 60%. The top students scored about the same as GPT-3 did.

UCLA psychology professor Hongjing Lu, the study’s senior author, said “Surprisingly, not only did GPT-3 do about as well as humans but it made similar mistakes as well.”

The researchers also asked GPT-3 to solve some word association problems. For example, “‘Car’ is to ‘Road’ as ‘Boat’ is to which word?”. The answer is obviously ‘water’ but these kinds of questions can be tricky for an AI.

Well, at least the researchers thought it might be tricky. It turns out that GPT-3 performed better than the average college applicants did in their SATs.

GPT-3 struggles with problems that are easy for humans

What AI models struggle with are problems requiring a visual understanding of physical space. If you presented GPT-3 with a list of tools like a hammer, a nail, and a picture, it isn’t able to come up with the obvious solution to hanging the picture on the wall.

That kind of problem is easy for humans to solve because we can see, hold, and feel physical objects in a space we occupy. Those experiences make it easy for our brains to learn and solve problems in a way that AI models can’t. That being said, GPT-4 is getting better at this kind of reasoning now.

While the researchers could measure the performance of GPT-3, they have no idea of the “thinking” process it follows to get the answers. Does it follow a similar thought process humans do or does it do something completely different? With GPT-3 being a closed model it’s not possible to look under the hood to see what’s happening.

The surprising result of this research is that GPT-3 seems to be able to solve novel problems without any direct training. That’s closely aligned with how humans solve new problems. GPT-4 is expected to perform even better at these problems and who knows what other “thinking” ability might emerge with more testing.

While spatial reasoning is a challenge for LLMs, these challenges could be solved by visual models like Google’s RT-2 which was recently announced. Once AI models can begin to “see” and interact physically with their surroundings their problem-solving capabilities will improve exponentially.