The exponential growth of AI systems is outpacing research and regulation, leaving governments in an awkward position to balance advantages with risks.

Laws take years to develop and become legally binding. AI evolves on a weekly basis.

That’s the dichotomy facing AI leaders and politicians, with the first piece of meaningful AI regulation in the West, the EU AI Act, forthcoming in 2026. Even one year ago, ChatGPT was just a whisper.

Top US and EU officials met for US-EU Trade and Tech Council (TTC) on the 31st of May in Luleå, Sweden. Margrethe Vestager, Europe’s digital commissioner, who met with Google CEO Sundar Pichai the week before to discuss a potential ‘AI Pact,’ said, “Democracy needs to show we are as fast as the technology.”

Officials acknowledge the yawning gap between the pace of technology and the pace of lawmaking. Referring to generative AI like ChatGPT, Gina Raimondo, the U.S. commerce secretary, said, “It’s coming at a pace like no other technology.”

So what did the TTC meeting achieve?

Watermarking, external audits, feedback loops – just some of the ideas discussed with @AnthropicAI and @sama @OpenAI for the #AI #CodeOfConduct launched today at the #TTC in #Luleå @SecRaimondo Looking forward to discussing with international partners. pic.twitter.com/wV08KDNs3h

— Margrethe Vestager (@vestager) May 31, 2023

Primarily, attendees discussed non-binding or voluntary frameworks around risk and transparency, which will be presented to the G7 in the fall.

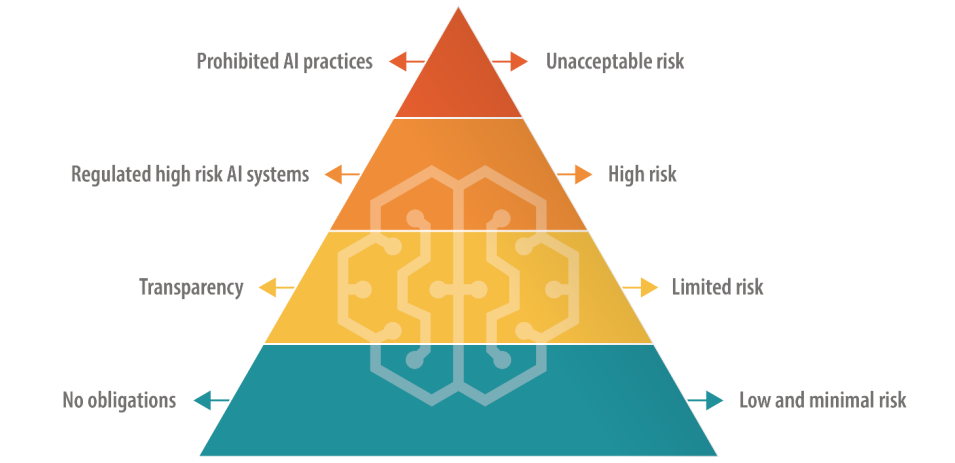

The EU, which has a direct approach to digital legislation, opts for a tiered approach to AI regulation, where AIs are sorted into categories based on risk.

This includes a banned “unacceptable risk” tier and a “high risk” tier, which tech bosses like OpenAI CEO Sam Altman fear will compromise their products’ functionality.

The US isn’t proposing such definitive regulations, favoring voluntary rules.

Many more meetings between the EU, US, and big tech will be required to align views with meaningful practical action.

Will voluntary AI rules work?

There are many examples of voluntary rules in other sectors and industries, such as voluntary data security frameworks and ESG disclosures, but none lie as close to the cutting edge as a voluntary AI governance framework.

After all, we’re dealing with an extinction-level threat here, according to top tech leaders and academics who co-signed the Center for AI Safety’s statement on AI risk this week.

Big companies like OpenAI and Google already have central departments focused on governance and matters of internal compliance, so aligning their products and services with voluntary frameworks could be a matter of re-writing internal policy documents.

Voluntary rules are better than nothing, but with numerous propositions on the table, politicians and AI leaders will have to choose something to run with sooner or later.