AI researchers at Stanford evaluated the compliance of 10 leading AI models against the proposed EU AI Act and found wide variation, with generally lackluster results across the board.

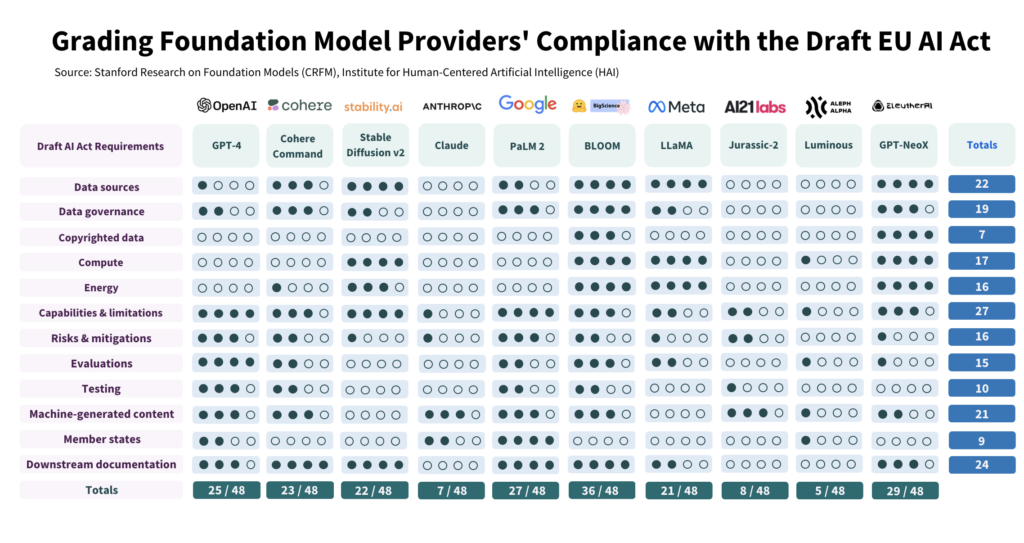

The study critically examined 10 AI models against 12 criteria set out in the EU’s draft legislation and uncovered wide variation in compliance, with no model ticking every box.

The study highlights the chasm between the AI Act’s expectations and current governance efforts among leading AI developers.

To comply with the act, developers must vastly improve their transparency and risk mitigation strategies, which are almost totally lacking in some cases.

How the study was conducted

The authors conducted a detailed study of the AI Act, extracting 22 requirements.

From these, they selected 12 that could be meaningfully evaluated using public information. They then created a 5-point rubric for each of these 12 requirements.

The open-source AI model BLOOM, produced by Hugging Face, emerged as the highest-scoring model with a total of 36 out of a potential 48 points.

Conversely, Google-backed Anthropic and German AI company Aleph Alpha performed significantly poorer, scoring 7 and 5, respectively. ChatGPT fell in the middle of the pack with 25/48.

The 4 leading areas of non-compliance are copyrighted data, energy, risk mitigation, and evaluation/testing.

One of the authors, Kevin Klyman, a researcher at Stanford’s Center for Research on Foundation Models, noted that most developers don’t disclose their risk mitigation strategies, which could be a dealbreaker. Klyman said, “Providers often do not disclose the effectiveness of their risk mitigation measures, meaning that we cannot tell just how risky some foundation models are.”

Moreover, there’s wide variation in the training data used to train models. The EU will require AI developers to be more transparent with their data sources, which 4/10 of developers fail to do. ChatGPT only scored 1 point in that area.

Open source versus proprietary models

The report also discovered a clear dichotomy in compliance depending on whether a model was open source or proprietary.

Open-source models achieved strong scores on resource disclosure and data requirements, but their risks are largely undocumented.

Proprietary models are the opposite – they’re heavily tested and highly documented with robust risk mitigation strategies but are untransparent regarding data and technology-related metrics.

Or, to put a finer point on it, open-source developers don’t have so many competitive secrets to shield, but their products are inherently riskier, as they can be used and modified by almost anyone.

Conversely, private developers will likely keep aspects of their models under lock and key but can demonstrate security and risk mitigation. Even Microsoft, OpenAI’s primary investor, doesn’t fully understand how OpenAI’s models work.

What does the study recommend?

The study’s authors acknowledge that the gap between the EU’s expectations and reality is alarming and put forward several recommendations to policymakers and model developers.

The study recommends to EU Policymakers:

- Refine and specify the parameters of the EU AI Act: The researchers argue that the AI Act’s technical language and parameters are underspecified.

- Promote transparency and accountability: Researchers argue that the strictest rules should be ultra-targeted to the very largest and most dominant developers, which should lead to more effective enforcement.

- Provide sufficient resources for enforcement: For the EU AI Act to be effectively enforced, technical resources and talent should be made available to enforcement agencies.

The study recommends to global policymakers:

- Prioritize transparency: The researchers highlight that transparency is critical and should be the main focus of policy efforts. They argue that lessons from social media regulation reveal the damaging consequences of deficient transparency, which shouldn’t be repeated in the context of AI.

- Clarify copyright issues: The boundaries of copyright for AI training data and AI outputs are hotly debated. Given the low compliance observed in disclosing copyrighted training data, the researchers argue that legal guidelines must specify how copyright interacts with training procedures and the output of generative models. This includes defining the terms under which copyright or licenses must be respected during training and determining how machine-generated content might infringe copyright.

The study recommends to foundation model developers:

- Strive for continuous improvement: Providers should consistently aim to improve their compliance. Larger providers, such as OpenAI, should lead by example and disseminate resources to downstream customers accessing their models via API.

- Advocate for industry standards: Model providers should contribute to establishing industry standards, which can lead to a more transparent and accountable AI ecosystem.

While there are some positives to glean from the risk and monitoring standards established by leading developers like OpenAI, deficiencies in areas such as copyright are far from ideal.

As for applying regulation to AI as an all-encompassing category – that could prove very tricky indeed – as commercial and open-source models are structurally distinct and tough to lump together.