Adobe launches Generative Fill for Photoshop, a generative AI powered by Adobe Firefly.

Back in March, Adobe unveiled their generative AI Firefly, dubbed one of their most successful public beta launches ever.

They’ve now launched one of Firefly’s features, Generative Fill, for beta access inside Photoshop.

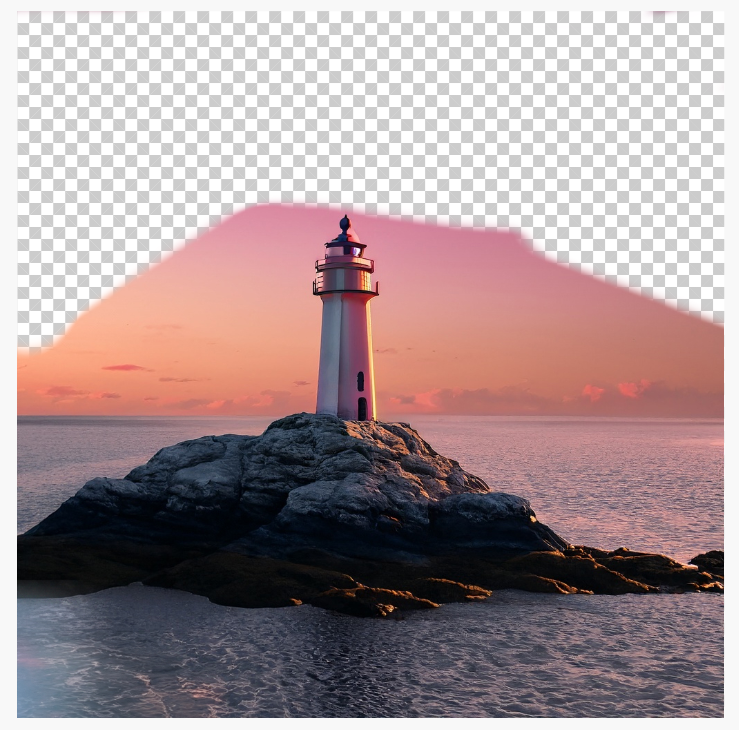

Generative Fill enables Photoshop users to remove image content, add new elements, and extend the entire image from the borders, also called outpainting.

Adobe is well-positioned to steer AI’s role within the creative industries, with millions of dedicated users across art, graphic design, photography, video production, animation, and other digital arts.

Generative Fill is the first entry in a catalog of powerful tools coming to the Creative Cloud ecosystem, setting a new benchmark for how AIs will integrate into existing workflows.

How Generative Fill works

Generative Fill works in Photoshop layers and offers three main functions:

- Adding new content to an image

- Removing content from an image

- Extending the borders of the image with new content

To add or remove elements from an image, simply highlight the parts of the image you wish to modify and use text prompts to describe what you want to generate.

For example, you could highlight the sky of your image and type “Northern Lights” in the prompt box.

Within moments, Firefly modifies the image, seamlessly blending newly generated content with the image.

Generative Fill can realistically match color, shadows, perspective, and reflections, though it does require some experimentation to get the most out of it. You can experiment with the web beta version of the tool here.

While Generative Fill is already impressive, Adobe is preparing to launch several other tools under the Firefly family of AI services.

Fancy changing the weather or lighting of a video clip with one prompt? Or creating photo-realistic images from simple pencil sketches?

That’s exactly what Adobe is promising this year and in early 2024.

Adobe’s stance on copyright and plagiarism

Generative Fill is not just technically innovative – it’s also evidence of Adobe’s stance on copyright and plagiarism.

Unlike generative AIs like DALL-E, Stable Diffusion, and Midjourney, Firefly is trained on Adobe Stock images, openly licensed content, and other non-copyright public domain content.

Adobe’s VP of generative AI, Alexandru Costin, said Firefly couldn’t generate branded or trademarked content because it “has never seen that brand content or trademark.”

In other words, Firefly isn’t designed to steal visual content from other artists – a point their marketing and PR teams are driving home.

Adobe describes Firefly as “the most differentiated generative AI service on the market, and the only one to generate commercially viable, professional quality content.”

Content Credentials support

Generative Fill also supports Content Credentials, described as a “nutrition label” for AI-generated image content.

Content Credentials attach attribution data to images so viewers can identify the origin of an image and any historical additions or changes. You can use the Content Authenticity Initiative’s site to trace images with this metadata attached.

Abobe’s approach to AI has been technically and ethically robust, which is excellent news for the artists and creators that depend on its tools.

However, the impacts of sophisticated image and video generation are hard to predict, especially as realistic generative AI ventures into the realm of video production.

Generative Fill is just the beginning – soon, we might be able to generate entire video clips with a text prompt.