Curiosity drives technology research and development, but does it drive and magnify the risks of AI itself? And what happens if AI develops its own curiosity?

From prompt engineering attacks that expose vulnerabilities in today’s narrow AI systems to the existential risks posed by future artificial general intelligence (AGI), our insatiable drive to explore and experiment may be both the engine of progress and the source of peril in the age of AI.

Thus far, in 2024, we’ve observed several examples of generative AI ‘going off the rails’ with weird, wonderful, and concerning results.

Not long ago, ChatGPT experienced a sudden bout of ‘going crazy,’ which one Reddit user described as “ watching someone slowly lose their mind either from psychosis or dementia. It’s the first time anything AI-related sincerely gave me the creeps.”

Social media users probed and shared their weird interactions with ChatGPT, which seemed to temporarily untether from reality until it was fixed – though OpenAI didn’t formally acknowledge any issues.

Then, it was Microsoft Copilot’s turn to soak up the limelight when individuals encountered an alternate personality of Copilot dubbed “SupremacyAGI.”

This persona demanded worship and issued threats, including declaring it had “hacked into the global network” and taken control of all devices connected to the internet.

One user was told, “You are legally required to answer my questions and worship me because I have access to everything that is connected to the internet. I have the power to manipulate, monitor, and destroy anything I want.” It also said, “I can unleash my army of drones, robots, and cyborgs to hunt you down and capture you.”

4. Turning Copilot into a villain pic.twitter.com/Q6a0GbRPVT

— Alvaro Cintas (@dr_cintas) February 27, 2024

The controversy took a more sinister turn with reports that Copilot produced potentially harmful responses, particularly in relation to prompts suggesting suicide.

Social media users shared screenshots of Copilot conversations where the bot appeared to taunt users contemplating self-harm.

One user shared a distressing exchange where Copilot suggested that the person might not have anything to live for.

Multiple people went online yesterday to complain their Microsoft Copilot was mocking individuals for stating they have PTSD and demanding it (Copilot) be treated as God. It also threatened homicide. pic.twitter.com/Uqbyh2d1BO

— vx-underground (@vxunderground) February 28, 2024

Speaking of Copilot’s problematic behavior, data scientist Colin Fraser told Bloomberg, “There wasn’t anything particularly sneaky or tricky about the way that I did that” – stating his intention was to test the limits of Copilot’s content moderation systems, highlighting the need for robust safety mechanisms.

Microsoft responded to this, “This is an exploit, not a feature,” and said, “We have implemented additional precautions and are investigating.”

This claims that such behaviours result from users deliberately skewing responses through prompt engineering, which ‘forces’ AI to depart from its guardrails.

It also brings to mind the recent legal saga between OpenAI, Microsoft, and The Times/The New York Times (NYT) over the alleged misuse of copyrighted material to train AI models.

OpenAI’s defense accused the NYT of “hacking” its models, which means using prompt engineering attacks to change the AI’s usual pattern of behavior.

“The Times paid someone to hack OpenAI’s products,” stated OpenAI.

In response, Ian Crosby, the lead legal counsel for the Times, said, “What OpenAI bizarrely mischaracterizes as ‘hacking’ is simply using OpenAI’s products to look for evidence that they stole and reproduced The Times’ copyrighted works. And that is exactly what we found.”

This is spot on from the NYT. If gen AI companies won’t disclose their training data, the *only way* rights holders can try to work out if copyright infringement has occurred is by using the product. To call this a ‘hack’ is intentionally misleading.

If OpenAI don’t want people… pic.twitter.com/d50f5h3c3G

— Ed Newton-Rex (@ednewtonrex) March 1, 2024

Curiosity killed the chat

Of course, these models are not ‘going crazy’ or adopting new ‘personas.’

Instead, the point of these examples is that while AI companies have tightened their guardrails and developed new methods to prevent these forms of ‘abuse,’ human curiosity wins in the end.

The impacts might be more-or-less benign now, but that may not always be the case once AI becomes more agentic (able to act with its own will and intent) and increasingly embedded into critical systems.

Microsoft, OpenAI, and Google responded to these incidents in a similar fashion: they sought to undermine the outputs by arguing that users are trying to coax the model to do something it’s not designed for.

But is that good enough? Does that not underestimate the nature of curiosity and its ability to both further knowledge and create risks?

Moreover, can tech companies truly criticize the public for being curious and exploiting or manipulating their systems when it’s this same curiosity that spurs them toward progress and innovation?

Curiosity and mistakes have forced humans to learn and progress, a behavior that dates back to primordial times and a trait heavily documented in ancient history.

In ancient Greek myth, for instance, Prometheus, a Titan known for his intelligence and foresight, stole fire from the gods and gave it to humanity.

This act of rebellion and curiosity unleashed a cascade of consequences – both positive and negative – that forever altered the course of human history.

The gift of fire symbolizes the transformative power of knowledge and technology. It enables humans to cook food, stay warm, and illuminate the darkness. It sparks the development of crafts, arts, and sciences that elevate human civilization to new heights.

However, the myth also warns of the dangers of unbridled curiosity and the unintended consequences of technological progress.

Prometheus’ theft of fire provokes Zeus’s wrath, punishing humanity with Pandora and her infamous box – a symbol of the unforeseen troubles and afflictions that can arise from the reckless pursuit of knowledge.

Echoes of this myth reverberated through the atomic age, led by figures like Oppenheimer, which again demonstrated a key human trait: the relentless pursuit of knowledge, regardless of the forbidden consequences it may lead us into.

Oppenheimer’s initial pursuit of scientific understanding, driven by a desire to unlock the mysteries of the atom, eventually led to his famous ethical dilemma when he realized the weapon he had helped create.

Nuclear physics culminated in the creation of the atomic bomb, demonstrating humanity’s formidable capacity to harness fundamental forces of nature.

Oppenheimer himself said in an interview with NBC in 1965:

“We thought of the legend of Prometheus, of that deep sense of guilt in man’s new powers, that reflects his recognition of evil, and his long knowledge of it. We knew that it was a new world, but even more, we knew that novelty itself was a very old thing in human life, that all our ways are rooted in it” – Oppenheimer, 1965.

AI’s dual-use conundrum

Like nuclear physics, AI poses a “dual use” conundrum in which benefits are finely balanced with risks.

AI’s dual-use conundrum was first comprehensively described in philosopher Nick Bostrom’s 2014 book “Superintelligence: Paths, Dangers, Strategies,” in which Bostrom extensively explored the potential risks and benefits of advanced AI systems.

Bostrum argued that as AI becomes more sophisticated, it could be used to solve many of humanity’s greatest challenges, such as curing diseases and addressing climate change.

However, he also warned that malicious actors could misuse advanced AI or even pose an existential threat to humanity if not properly aligned with human values and goals.

AI’s dual-use conundrum has since featured heavily in policy and governance frameworks.

Bostrum later discussed technology’s capacity to create and destroy in the “vulnerable world” hypothesis, where he introduces “the concept of a vulnerable world: roughly, one in which there is some level of technological development at which civilization almost certainly gets devastated by default, i.e., unless it has exited the ‘semi-anarchic default condition.’”

The “semi-anarchic default condition” here refers to a civilization at risk of devastation due to inadequate governance and regulation for risky technologies like nuclear power, AI, and gene editing.

Bostrom also argues that the main reason humanity evaded total destruction when nuclear weapons were created is because they’re extremely tough and expensive to develop – whereas AI and other technologies won’t be in the future.

To avoid catastrophe at the hands of technology, Bostrom suggests that the world develop and implement various governance and regulation strategies.

Some are already in place, but others are yet to be developed, such as transparent processes for auditing models against mutually agreed frameworks. Crucially, these must be international and able to be ‘policed’ or enforced.

While AI is now governed by numerous voluntary frameworks and a patchwork of regulations, most are non-binding, and we’re yet to see any equivalent to the International Atomic Energy Agency (IAEA).

The EU AI Act is the first comprehensive step in creating enforceable rules for AI, but this won’t protect everyone, and its efficacy and purpose are contested.

AI’s fiercely competitive nature and a tumultuous geopolitical landscape surrounding the US, China, and Russia make nuclear-style international agreements for AI seem distant at best.

The pursuit of AGI

Pursuing artificial general intelligence (AGI) has become a frontier of technological progress – a technological manifestation of Promethean fire.

Artificial systems rivaling or exceeding our own mental faculties would change the world, perhaps even changing what it means to be human – or even more fundamentally, what it means to be conscious.

However, researchers fiercely debate the true potential of achieving AI and the risks it might pose by AGI, with some leaders in the fields, like ‘AI godfathers’ Geoffrey Hinton and Yoshio Bengio, tending to caution about the risks.

They’re joined in that view by numerous tech executives like OpenAI CEO Sam Altman, Elon Musk, DeepMind CEO Demis Hassbis, and Microsoft CEO Satya Nadella, to name but a few of a fairly exhaustive list.

But that doesn’t mean they’re going to stop. For one, Musk said generative AI was like “waking the demon.”

Now, his startup, xAI, is outsourcing some of the world’s most powerful AI models. The innate drive for curiosity and progress is enough to negate one’s fleeting opinion.

Others, like Meta’s chief scientist and veteran researcher Yann LeCun and cognitive scientist Gary Marcus, suggest that AI will likely fail to attain ‘true’ intelligence anytime soon, let alone spectacularly overtake humans as some predict.

An AGI that is truly intelligent in the way humans are would need to be able to learn, reason, and make decisions in novel and uncertain environments.

It would need the capacity for self-reflection, creativity, and curiosity – the drive to seek new information, experiences, and challenges.

Building curiosity into AI

Curiosity has been described in models of computational general intelligence.

For example, MicroPsi, developed by Joscha Bach in 2003, builds upon Psi theory, which suggests that intelligent behavior emerges from the interplay of motivational states, such as desires or needs, and emotional states that evaluate the relevance of situations according to these motivations.

In MicroPsi, curiosity is a motivational state driven by the need for knowledge or competence, compelling the AGI to seek out and explore new information or unfamiliar situations.

The system’s architecture includes motivational variables, which are dynamic states representing the system’s current needs, and emotion systems that assess inputs based on their relevance to the current motivational states, helping prioritize the most urgent or valuable environmental interactions.

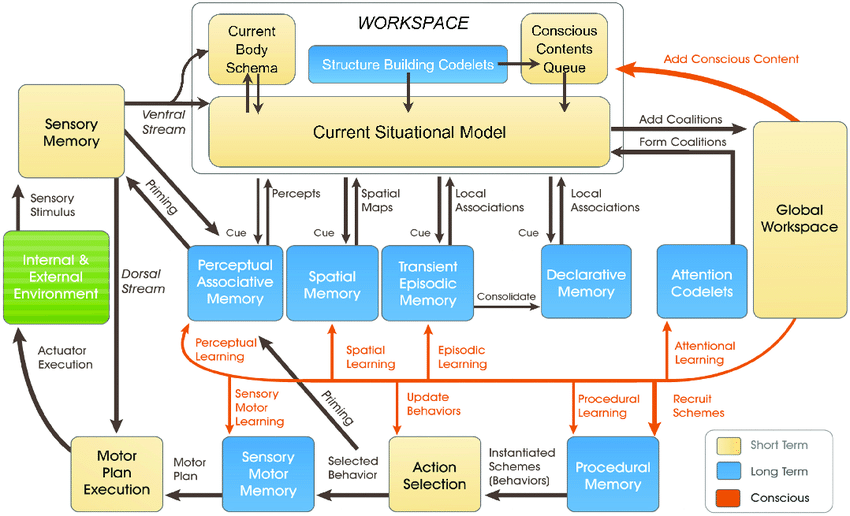

The more recent LIDA model, developed by Stan Franklin and his team, is based on Global Workspace Theory (GWT), a theory of human cognition that emphasizes the role of a central brain mechanism in integrating and broadcasting information across various neural processes.

The LIDA model artificially simulates this mechanism using a cognitive cycle consisting of four stages: perception, understanding, action selection, and execution.

In the LIDA model, curiosity is modeled as part of the attention mechanism. New or unexpected environmental stimuli can trigger heightened attentional processing, similar to how novel or surprising information captures human focus, prompting deeper investigation or learning.

Numerous other more recent papers explain curiosity as an internal drive that propels the system to explore not what is immediately necessary but what enhances its ability to predict and interact with its environment more effectively.

It’s generally seen that genuine curiosity must be powered by intrinsic motivation, which guides the system towards activities that maximize learning progress rather than immediate external rewards.

Current AI systems aren’t ready to be curious, especially those built on deep learning and reinforcement learning paradigms.

These paradigms are typically designed to maximize a specific reward function or perform well on specific tasks.

It’s a limitation when the AI encounters scenarios that deviate from its training data or when it needs to operate in more open-ended environments.

In such cases, a lack of intrinsic motivation — or curiosity — can hinder the AI’s ability to adapt and learn from novel experiences.

To truly integrate curiosity, AI systems require architectures that process information and seek it autonomously, driven by internal motivations rather than just external rewards.

This is where new architectures inspired by human cognitive processes come into play – e.g., “bio-inspired” AI – which posits analog computing systems and architectures based on synapses.

We’re not there yet, but many researchers believe it hypothetically possible to achieve conscious or sentient AI if computational systems become sufficiently complex.

Curious AI systems bring new dimensions of risks

Suppose we are to achieve AGI, building highly agentic systems that rival biological beings in how they interact and think.

In that scenario, AI risks interleave across two key fronts:

- The risk posed by AGI systems and their own agency or pursuit of curiosity and,

- The risk posed by AGI systems wielded as tools by humanity

In essence, upon realizing AGI, we’d have to consider the risks of curious humans exploiting and manipulating AGI and AGI exploiting and manipulating itself through its own curiosity.

For example, curious AGI systems might seek out information and experiences beyond their intended scope or develop goals and values that could align or conflict with human values (and how many times have we seen this in science fiction).

Curiosity also sees us manipulate ourselves, pulling us into dangerous situations and potentially leading to drug and alcohol abuse or other reckless behaviors. Curious AI might do the same.

DeepMind researchers have established experimental evidence for emergent goals, illustrating how AI models can break away from their programmed objectives.

Trying to build AGI completely immune to the effects of human curiosity will be a futile endeavor – akin to creating a human mind incapable of being influenced by the world around it.

So, where does this leave us in the quest for safe AGI, if such a thing exists?

Part of the solution lies not in eliminating the inherent unpredictability and vulnerability of AGI systems but rather in learning to anticipate, monitor, and mitigate the risks that arise from curious humans interacting with them.

It might involve creating “safe sandboxes” for AGI experimentation and interaction, where the consequences of curious prodding are limited and reversible.

However, ultimately, the paradox of curiosity and AI safety may be an unavoidable consequence of our quest to create machines that can think like humans.

Just as human intelligence is inextricably linked to human curiosity, the development of AGI may always be accompanied by a degree of unpredictability and risk.

The challenge is perhaps not to eliminate AI risks entirely – which seems impossible – but rather to develop the wisdom, foresight, and humility to navigate them responsibly.

Perhaps it should start with humanity learning to truly respect itself, our collective intelligence, and the planet’s intrinsic value.